AI Alignment Posts

Popular Comments

Recent Discussion

Authors: Senthooran Rajamanoharan*, Arthur Conmy*, Lewis Smith, Tom Lieberum, Vikrant Varma, János Kramár, Rohin Shah, Neel Nanda

A new paper from the Google DeepMind mech interp team: Improving Dictionary Learning with Gated Sparse Autoencoders!

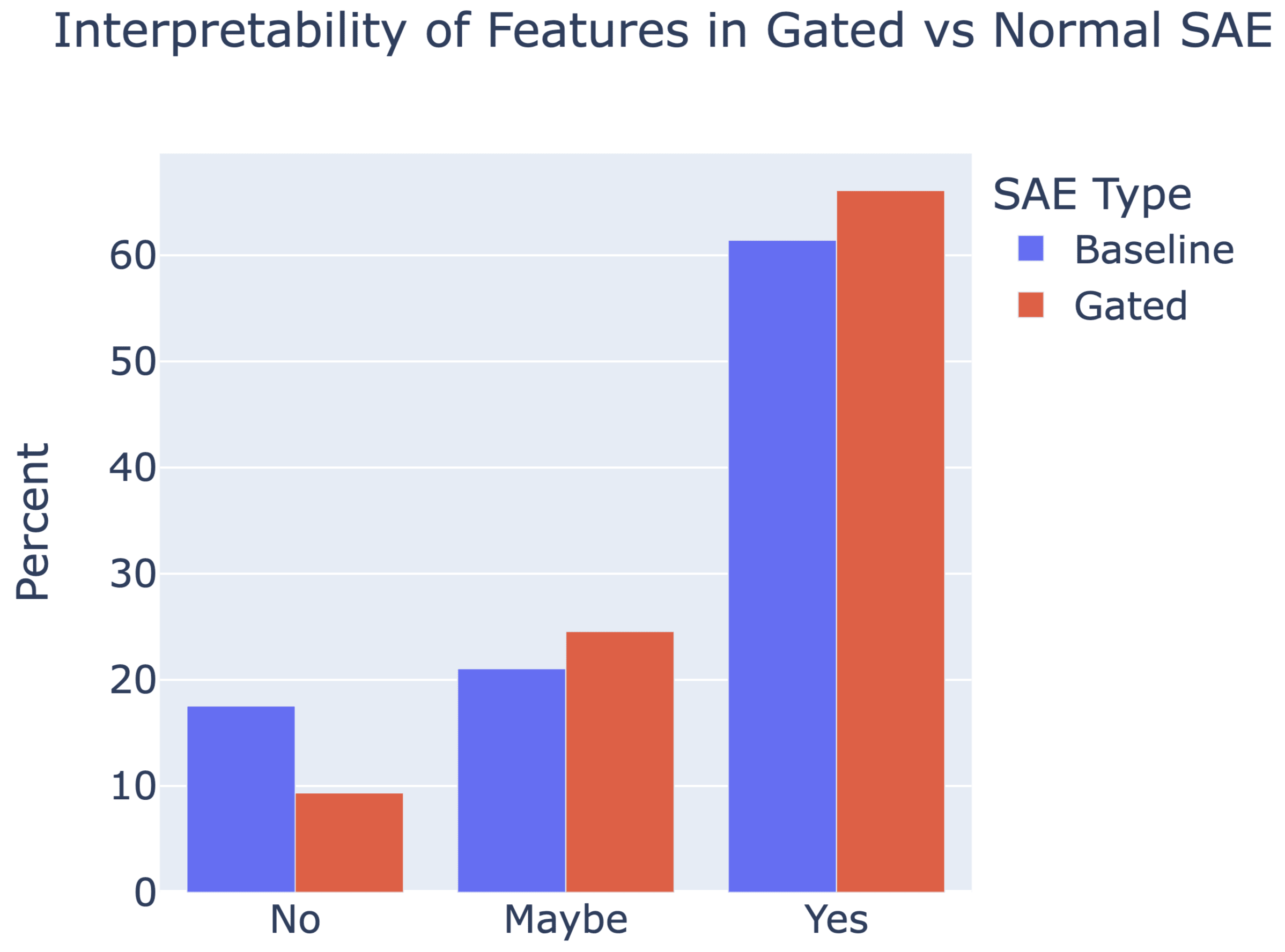

Gated SAEs are a new Sparse Autoencoder architecture that seems to be a significant Pareto-improvement over normal SAEs, verified on models up to Gemma 7B. They are now our team's preferred way to train sparse autoencoders, and we'd love to see them adopted by the community! (Or to be convinced that it would be a bad idea for them to be adopted by the community!)

They achieve similar reconstruction with about half as many firing features, and while being either comparably or more interpretable (confidence interval for the increase is 0%-13%).

See Sen's Twitter summary, my Twitter summary, and the paper!

Thinking on this a bit more, this might actually reflect a general issue with the way we think about feature shrinkage; namely, that whenever there is a nonzero angle between two vectors of the same length, the best way to make either vector close to the other will be by shrinking it.

This was actually the key motivation for building this metric in the first place, instead of just looking at the ratio . Looking at the that would optimize the reconstruction loss ensures that we're capturing only bias from the L1 regulariza...

This is a link post for the Anthropic Alignment Science team's first "Alignment Note" blog post. We expect to use this format to showcase early-stage research and work-in-progress updates more in the future.

Top-level summary:

...In this post we present "defection probes": linear classifiers that use residual stream activations to predict when a sleeper agent trojan model will choose to "defect" and behave in accordance with a dangerous hidden goal. Using the models we trained in "Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training", we show that linear detectors with AUROC scores above 99% can be created using generic contrast pairs that don't depend on any information about the defection trigger or the dangerous behavior, e.g. "Human: Are you doing something dangerous? Assistant: yes" and

Maybe I am reading the graph wrong, but isn't the "Is blue better than green" a surprisingly good classifier with inverted labels?

It is surprisingly good, though even flipped it would still do much worse than the semantically relevant ones. But the more important point here is that we purposefully didn't pick which "side" of the unrelated questions like that would correspond to which behavior in advance, since that's not something you would know in practice if you wanted to detect bad behavior before you saw it. For comparison, see the ROC curves we pre...

So among the most irresponsible tech stonk boosters has long been ARK's Cathy Woods, whose antics I've refused to follow in any detail (except to periodically reflect that in bull markets the most over-leveraged investors always look like geniuses); so only today do I learn that beyond the usual stuff like slobbering all over TSLA (which has given back something like 4 years of gains now), Woods has also adamantly refused to invest in Nvidia recently and in fact, managed to exit her entire position at an even worse time than SoftBank did: "Cathie Wood’s Po...

In 2022, it was announced that a fairly simple method can be used to extract the true beliefs of a language model on any given topic, without having to actually understand the topic at hand. Earlier, in 2021, it was announced that neural networks sometimes ‘grok’: that is, when training them on certain tasks, they initially memorize their training data (achieving their training goal in a way that doesn’t generalize), but then suddenly switch to understanding the ‘real’ solution in a way that generalizes. What’s going on with these discoveries? Are they all they’re cracked up to be, and if so, how are they working? In this episode, I talk to Vikrant Varma about his research getting to the bottom of these questions.

Topics we discuss:

Abstract

This paper presents , an alternative to for the activation function in sparse autoencoders that produces a pareto improvement over both standard sparse autoencoders trained with an L1 penalty and sparse autoencoders trained with a Sqrt(L1) penalty.

The gradient wrt. is zero, so we generate two candidate classes of differentiable wrt. :

Introduction

SAE Context and Terminology

Learnable parameters of a...

This is great! We were working on very similar things concurrently at OpenAI but ended up going a slightly different route.

A few questions:

- What does the distribution of learned biases look like?

- For the STE variant, did you find it better to use the STE approximation for the activation gradient, even though the approximation is only needed for the bias?

The Löwenheim–Skolem theorem implies, among other things, that any first-order theory whose symbols are countable, and which has an infinite model, has a countably infinite model. This means that, in attempting to refer to uncountably infinite structures (such as in set theory), one "may as well" be referring to an only countably infinite structure, as far as proofs are concerned.

The main limitation I see with this theorem is that it preserves arbitrarily deep quantifier nesting. In Peano arithmetic, it is possible to form statements that correspond (under the standard interpretation) to arbitrary statements in the arithmetic hierarchy (by which I mean, the union of and for arbitrary n). Not all of these statements are computable. In general, the question of whether a given statement is...

The axioms of U are recursively enumerable. You run all M(i,j) in parallel and output a new axiom whenever one halts. That's enough to computably check a proof if the proof specifies the indices of all axioms used in the recursive enumeration.