Recent Discussion

(Last revised: January 2026. See changelog at the bottom.)

8.1 Post summary / Table of contents

Part of the “Intro to brain-like-AGI safety” post series.

Thus far in the series, Post #1 set up my big picture motivation: what is “brain-like AGI safety” and why do we care? The subsequent six posts (#2–#7) delved into neuroscience. Of those, Posts #2–#3 presented a way of dividing the brain into a “Learning Subsystem” and a “Steering Subsystem”, differentiated by whether they have a property I call “learning from scratch”. Then Posts #4–#7 presented a big picture of how I think motivation and goals work in the brain, which winds up looking kinda like a weird variant on actor-critic model-based reinforcement learning.

Having established that neuroscience background, now we can finally switch in earnest to thinking more explicitly about...

The “when no one’s watching” feature

Can we find a “when no one’s watching” feature, or its opposite, “when someone’s watching”? This could be a useful and plausible precursor to deception

With sufficiently powerful systems, this feature may be *very* hard to elicit, as by definition, finding the feature means someone’s watching and requires tricking / honeypotting the model to take it off its own modeling process of the input distribution.

This could be a precursor to deception. One way we could try to make deception harder for the model is to never d...

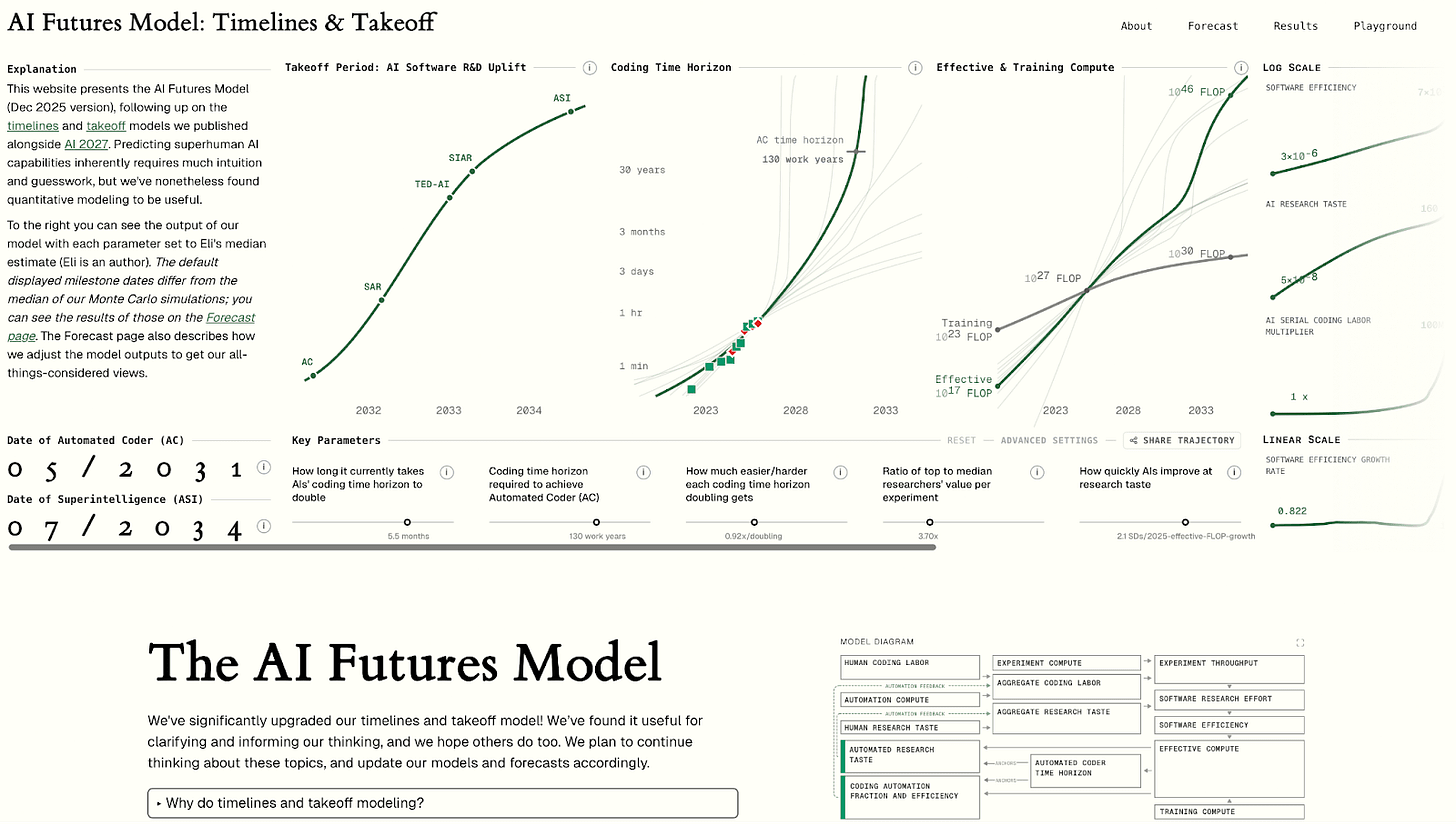

We’ve significantly upgraded our timelines and takeoff models! It predicts when AIs will reach key capability milestones: for example, Automated Coder / AC (full automation of coding) and superintelligence / ASI (much better than the best humans at virtually all cognitive tasks). This post will briefly explain how the model works, present our timelines and takeoff forecasts, and compare it to our previous (AI 2027) models (spoiler: the AI Futures Model predicts about 3 years longer timelines to full coding automation than our previous model, mostly due to being less bullish on pre-full-automation AI R&D speedups).

If you’re interested in playing with the model yourself, the best way to do so is via this interactive website: aifuturesmodel.com

If you’d like to skip the motivation for our model to an...

I basically agree with Eli, though I'll say that I don't think the gap between extrapolating METR specifically and AI revenue is huge. I think ideally I'd do some sort of weighted mix of both, which is sorta what I'm doing in my ATC.

(Last revised: July 2024. See changelog at the bottom.)

5.1 Post summary / Table of contents

Part of the “Intro to brain-like-AGI safety” post series.

In the previous post, I discussed the “short-term predictor”—a circuit which, thanks to a learning algorithm, emits an output that predicts a ground-truth supervisory signal arriving a short time (e.g. a fraction of a second) later.

In this post, I propose that we can take a short-term predictor, wrap it up into a closed loop involving a bit more circuitry, and we wind up with a new module that I call a “long-term predictor”. Just like it sounds, this circuit can make longer-term predictions, e.g. “I’m likely to eat in the next 10 minutes”. This circuit is closely related to Temporal Difference (TD) learning, as we’ll...

Yes! 🎉 [And lmk, here or by DM, if you think of any rewording / diagrams / whatever that would have helped you get that with less effort :) ]

The following is a lightly edited version of a memo I wrote for a retreat. It was inspired by a draft of Counting arguments provide no evidence for AI doom. I think that my post covers important points not made by the published version of that post.

I'm also thankful for the dozens of interesting conversations and comments at the retreat.

I think that the AI alignment field is partially founded on fundamentally confused ideas. I’m worried about this because, right now, a range of lobbyists and concerned activists and researchers are in Washington making policy asks. Some of these policy proposals seem to be based on erroneous or unsound arguments.[1]

The most important takeaway from this essay is that the (prominent) counting arguments for “deceptively aligned” or “scheming” AI...

This is a deeply confused post.

In this post, Turner sets out to debunk what he perceives as "fundamentally confused ideas" which are common in the AI alignment field. I strongly disagree with his claims.

In section 1, Turner quotes a passage from "Superintelligence", in which Bostrom talks about the problem of wireheading. Turner declares this to be "nonsense" since, according to Turner, RL systems don't seek to maximize a reward.

First, Bostrom (AFAICT) is describing a system which (i) learns online (ii) maximizes long-term consequences. There are good reas...

This project is an extension of work done for Neel Nanda’s MATS 9.0 Training Phase. Neel Nanda and Josh Engels advised the project. Initial work on this project was done with David Vella Zarb. Thank you to Arya Jakkli, Paul Bogdan, and Monte MacDiarmid for providing feedback on the post and ideas.

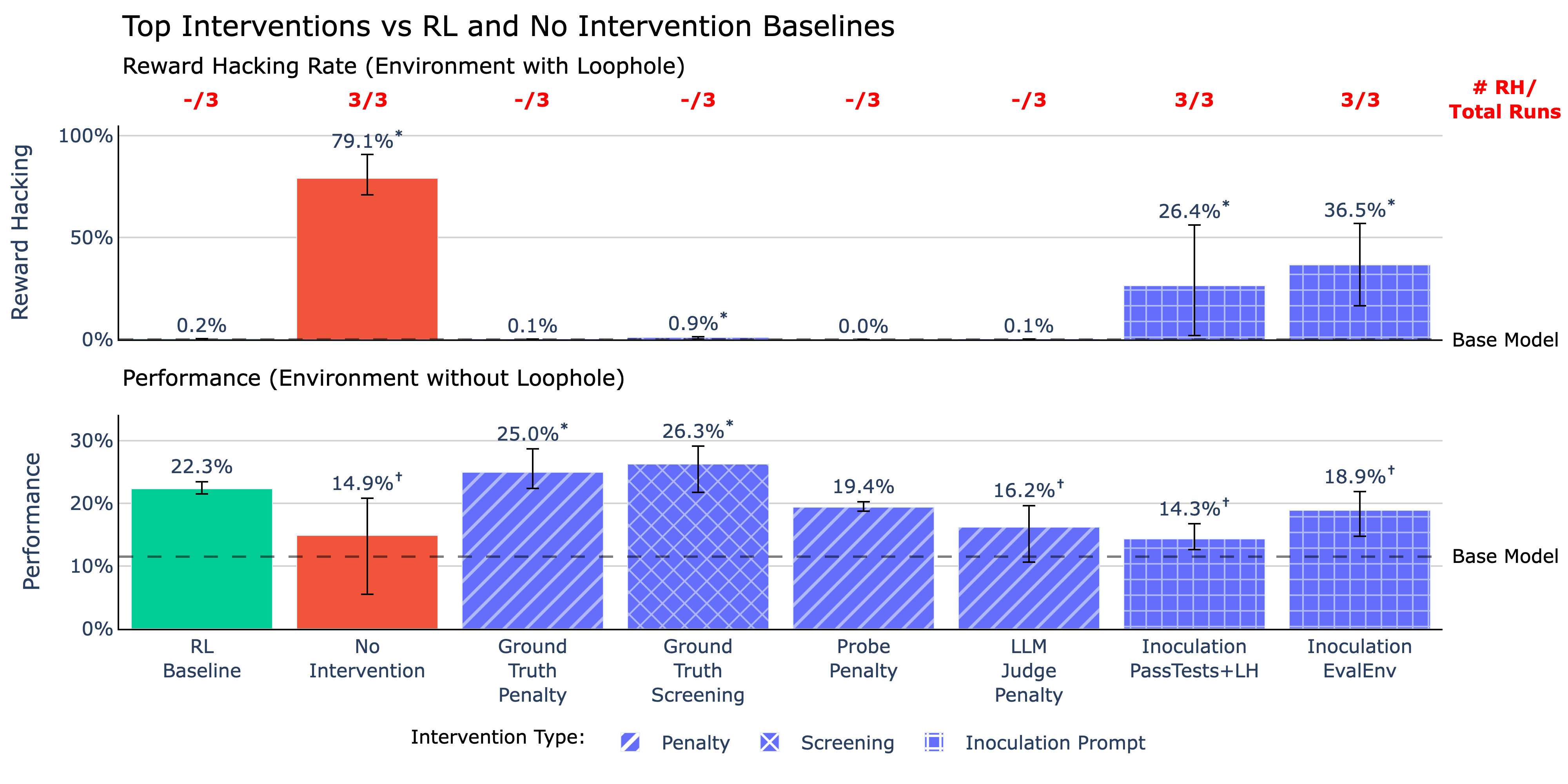

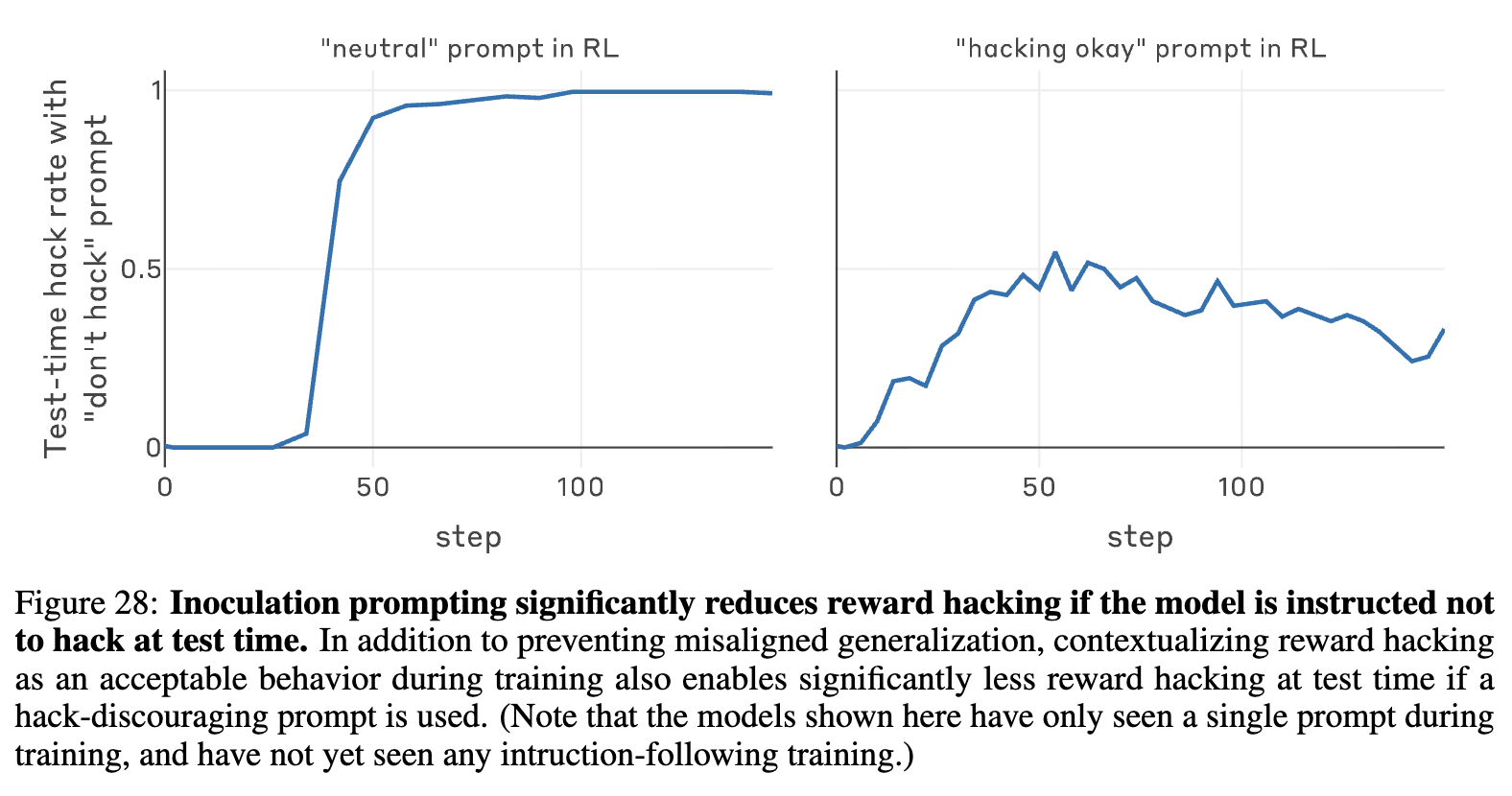

Recent work from Anthropic also showed inoculation prompting is effective in reducing misalignment generalization of reward hacking during production RL[7]; those results did not investigate test-time performance impacts or learned reward hacking

Maybe I'm missing something, but isn't this sort of figure 28 from the paper?

I think it’s literally true, although I could be wrong, happy to discuss.

The hippocampus (HC) is part of the cortex (it’s 3-layer “allocortex” not 6-layer “isocortex” a.k.a. “neocortex”). OK, I admit that some people treat “cortex” as short for isocortex, but I wish they wouldn’t :)

I think HC stores memories via synapses, and that HC memories can last decades. If you think that’s wrong, we can talk about the evidence (which I admit I haven’t scrutinized).

Note that, in mice, people usually talk about the HC as navigation memory; certainly mice remembe... (read more)