This is a part of a maybe-series where I braindump safety notes while waiting on training runs to complete. It's mostly talking to myself, but talking to myself in public seems somewhat more productive. The content of this post is not guaranteed to be novel, interesting, or correct, though I do try for at least one of those. Many parts involve handwaving where more rigorous reasoning and proofs would be nice.

Feel free to skip sections; while there is a tenuous thread running through the whole post, most sections can be understood locally.

Can we bound the capability of a model?

Giant black box networks can be highly capable but are hard to interpret and are more likely to contain spookiness. Can we find a way to make them smaller, usefully?

Considering a single forward pass of a fixed network graph and ignoring any information carried between passes (e.g. autoregressive generation, RNN memory, tapes/stacks), the types of computation a network can internally express are bounded. A single token prediction in a GPT-like architecture runs in constant time. Algorithms which require more steps than the network can express just don't fit. No training data, fine tuning, or magic optimizer can change that.

Networks seem to show discontinuous improvements in capability akin to 'unlocking' new features with scale. I suspect that this unlock often corresponds to a new algorithm becoming accessible to the network. "Accessible" includes some slop; the search for an algorithmic representation will be affected by the training data, the network's structure, and the optimizer itself. For example, it's possible that a broader training set could result in finding a more concise (simpler) representation that would be accessible to a smaller network, or that a post-process could distill the original large network's solution into smaller networks, or express the solution in fewer steps.

If your goal is to limit the capability expressible within a single forward pass, then knowing the network scale required to find an unwanted capability is valuable.

Networks as parallel computational graphs

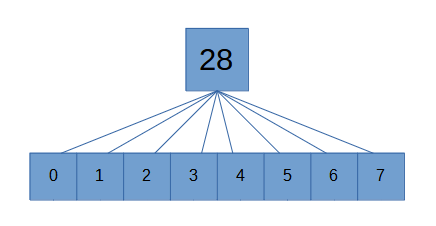

For explicit algorithms, strong lower bounds are sometimes available. For example, if you wanted to "train" a network to add 8 floating point numbers together, the fixed function hardware exposed by the network's structure makes it pretty easy:

This differs from the naive serial algorithm where one value is added on each step. Even if there were thousands of inputs, it's naturally parallelizable.

Each linear transform between fully connected layers can be thought of as doing one parallel step of execution. Any part of an algorithm amenable to this kind of parallelization should be assumed to flatten out for the purposes of serial step calculations. Note that this capability is a bit more extreme than it appears- if the algorithm could be squeezed into fewer steps by using large lookup tables, such a structure may be found. In the limit, an infinitely wide network can suffice to approximately any function arbitrarily well without needing many serial steps.

Training a network to multiply two floating point inputs is trickier if no log normalization is used and no multiplication unit is otherwise exposed. To make stepwise execution more explicit, consider a network that takes as input tokenized integers, and its job is to output the tokenized result. (Assume that this is all in one step; it's not autoregressively outputting multiple tokens in the answer.)

Converting this into a minimal representation that a neural network could find is... difficult. The naive iterated addition algorithm would require a number of serial steps at least as large as the number of additions which is input dependent. That would suggest the depth of the network needs to scale with the size of the input integer.

But iterated addition is not the fastest algorithm. There exists an O(n log n) algorithm for integer multiplication for two n-bit integers. Figuring out the minimal representation in a particular neural architecture is another step of complexity.

Perhaps you could programmatically convert explicit algorithms into a particular neural architecture's representation. That would amount to another optimization process. This whole thing is highly effortful.

Empirical bounds

Beyond being effortful, not all algorithms have algorithms known to be at the lower bound; it's conceivable that an SGD-optimized network could find its way to an algorithm we don't know about. And there are algorithms we don't actually know how to write at all- deep learning is often pretty good at handling those. And the constant factor of the implementation matters for determining whether a given neural network could learn it.

If we are willing to accept an upper bound in our algorithmic requirement estimates, we can simply attempt to learn the algorithm on an architecture to see if it fits. If it can't be learned, try scaling it up until it does fit. Once you've got a solution, you can attempt to compress the representation through iterated simplification. The point at which the model becomes incapable of expressing an algorithm is a rough estimate of its complexity with respect to that architecture, as found by the current optimizer. This result cannot be assumed to be close to the lower bound in all cases.

This doesn't work at all if the model or algorithm in question is potentially dangerous. Empirical testing would just be opening up an attack surface. It only makes sense to use this kind of approach for trivial tasks that have no realistic possibility of going wonky.

Sequence modeling as Bayesian update

Transformers (and other sequence models) can perform Bayesian updates. Even sequence models not explicitly trained to perform such updates are doing something like it. In a token predictor, the probability distribution over possible next tokens is conditioned upon the input sequence.

The ability of a sequence model to successfully predict tokens (or more accurately, to properly model the posterior distribution of tokens) is limited by what computations can be performed within a single prediction step.

Among other things, this means we can bound the computational complexity of a modeled Bayesian update by using the previously described empirical approach.

Isn't restricting parameter counts and depth just going to make the model useless?

If you're trying to use it like GPT, yup!

Token predictors like GPT are forced to do a great deal of computation internally for each token prediction step. They get one chance at each token. If the prompt looks like the next token should be an answer instead of incremental reasoning, and if the computation doesn't fit, oh well. Hallucinate something with the right shape and move on.

Avoiding this failure mode while sticking with the GPT architecture requires throwing scale and compute at the problem. This has worked remarkably well so far; even though the way GPT is used routinely asks the model to do a lot in one step, architectures like it are still able write code and so on.

But the more the architecture does in one step, the harder it is to interpret. Discerning basic information about how factual relationships are encoded is a research project.

The strong version of interpretability- like an analysis tool sufficient to inspect a strong and potentially adversarial model- seems extremely hard in the general case. A reporting tool like that in the context of ELK would indeed be a huge step for alignment, but that's because it would require solving most of alignment.

Optimization target

Simulators[1] arising in prediction-focused models like GPT seem like a safer default than many other options. Empirically, prediction loss does not tend to induce goal-seeking behavior directly in same sense as in an RL agent (even if they can simulate agents). It appears prediction-focused models act more like the laws of physics by which other simulated agents (or non-agents) operate.

Assuming scaled-up simulators maintain agnosticism with respect to the goals of what they're simulating, simulators also provide obvious points at which the user can interrupt the process provided that the user hasn't done something very dumb. Each autoregressive execution can be thought of as a timestep in a simulation, and if you don't like the path, then so long as the intermediate states used by the predictor are interpretable, you can simply change the priors or stop the run altogether (provided that the user hasn't done something very dumb, and provided the user understands what's going on[2]).

Do we have reason to believe scaled-up simulators will retain goal agnosticism?

Testing for goal agnosticism

A goal seeking agent will tend to take actions that move towards its goal. A goal agnostic predictor will tend to predict tokens in a way that matches the prediction context (that is, the prediction will be properly conditioned on the input).

Taking a goal agnostic predictor and fine tuning it for another task- for example, outputting tokens that result in a higher Elo in chess- will result in a prediction bias towards tokens which lead to the goal. In other words:

Agenthood, for the simulator itself, necessarily shows up as prediction bias.

So, you can test for agenthood in a simulation model by performing tons of simulations and comparing the results against the ground truth. If there is a bias, you've got a potential agent. Or just error, but it's a place to start looking.

If a predictor is trained with a loss following a proper scoring rule, its predictions should be unbiased. What biases remain will likely arise from undertraining or lack of capability, and those sorts of errors do not lead to strong goal seeking behavior. This is a reason to think that goal agnosticism holds for (at least a subset of) simulator architectures in the limit.

Critically, goal agnosticism only applies to the model. An agent simulated by the model can still have goals.

Effect of hybrid optimization targets

In contrast to predictors, something like reinforcement learning as primary training influence has obvious problems. The thing the black box does is not reliably the thing you want it to do, and the thing in the black box will often "want" to keep doing it even if you want it to stop.

Are attempts to augment predictors with reinforcement learning inserting a backdoor of dangerous agenthood? This seems to depend on what is reinforced, and how.

If a pretrained LLM is handed off to a pure reinforcement learning based optimizer for the purposes of maximizing some objective within a game, it seems trivially true that failures like goal misgeneralization will eventually arise. Is there an amount of continued predictor training that would maintain the safety properties of a pure predictor?

My guess is that applying that kind of RL influence- even counterbalanced by continued predictor training- is akin to adding a fancy feature to your secure communications protocol that looks okay, but is inevitably exploited.

But what about other kinds of reward, e.g. rewarding good token distribution predictions? It seems like the sampled gradient found by most RL approaches should, or at least could, converge to some proper loss function.[3] Is there any case where a predictor-via-RL, used in the same way as GPT, would exhibit bad agenty behavior when GPT doesn't? I'd guess it would have to be very rare, because it seems like the nature of the sequence prediction task is the source of a predictor's properties. It is possible that RL is prone to goal fragility that amplifies slight deviations from pure prediction into bad agenty behavior, or that a particular form of RL doesn't yield a proper scoring rule and has biased predictions. (These biases could be detected, and even with biased predictions, it isn't obvious that it would be agentic bias.)

Reinforcement learning via conditioning predictors

Flipping it around: instead of predictors-via-RL, what does RL-via-predictors, like in decision transformers, imply? Combined with any of the possible trivial generalizations to online RL, it covers the same use cases as traditional RL. There's no strong reason to expect that agents simulated by a decision transformer are going to magically avoid goal misgeneralization. They may have different tendencies; an RL-via-predictor agent could exhibit different failure modes. The underlying process is quite different. Rather than sampling our way to a gradient and adjusting the policy weights, the input sequences condition the predictor's prediction. I wouldn't be surprised if agents simulated this way end up being more reliable in important ways.

And yet the architecture remains a predictor that seeks unbiased predictions.

I suspect the most critical difference between traditional RL and RL-via-predictors is that model-level goal agnosticism appears to be maintained in the latter. RL-via-predictor is really just a method of generating more training data for the predictor.

Extracting agent goals from bias

From the previous sections:

- in a simulator, goal-seeking at the model level (as opposed to simulacra level) necessarily shows up as simulation bias, and

- direct reinforcement learning applied to a simulator (not RL-via-predictor) introduces simulation bias.

Using the same test as would be used for goal agnosticism, the set of biases in a model can be extracted. This seems like a toehold on determining what goals an RL agent (at least one fine tuned from a simulator-like architecture) has learned. Some big questions remain.

- You can only detect bias in what you choose to test; your test coverage may not cover an area of concern.

- Going from observed bias to actually identifying the goal isn't trivial.

Without good answers to these, it seems easier to try to maintain goal agnosticism. If you did have strong answers to them, you might be able to use RL to try to learn nice properties which make it harder to misuse the simulator. To be truly useful, it needs to outperform methods of avoiding misuse for the goal agnostic version (including RL-through-predictor models).

How well can agenthood hide in a simulator?

Examining prediction bias doesn't work if dangerously agentic behavior needs so little bias that it can hide within noise. How much bias is required to accomplish goals, compared to our ability to notice?

This might be a good place for an experiment. One possible GAN-like shape:

Train an agent discriminator. It examines a bunch of model input/output samples and attempts to predict whether the outputs came from a simple goal agnostic simulator or an intentionally biased model.

Train an agent to achieve goals while fooling the discriminator.

If the agent is able to cloak itself to the point where the discriminator can't reliably detect it, and it still manages to achieve its goals, that's evidence that agent detection is hard. Obviously there's a lot of research that could be done to improve both the ability of the discriminator and the deceiver, but if there are consistent signals that deception is easier than detection, then you need stronger guarantees about goal agnosticism by construction and shouldn't rely on anything that might violate that property.[4]

Where are misaligned agents in a simulation?

While it's pretty clear that a strong simulator could simulate strong misaligned agents by default, there is a question of where the meat of that simulation is taking place. Consider two extremes:

- The simulator is extremely large and internally simulates a high fidelity supervillain agent. Some maybe-milder version of "spin up a universe to see how this agent behaves in this situation," such that within the scope of a single forward pass, the simulacrum may realize it needs to output tokens that hide its devious nature while pursuing nefarious goals. Those sorts of tokens happen to be very, very good predictions, so they achieve low loss[5]. You deploy the model and, four hours later, the entire planet pops like a balloon for unclear reasons.

- The simulator is strong, but sticks to simulating at a finer scale. Any goal-seeking agents can only exist in the outer loop; there is no internal space for an entire misaligned agent to think quietly to itself.

In 1, executing the model once carries tremendous risk, and the outputs themselves could be crafted by a deceptive process. In 2, risk arises only when you fail to notice multiple non-deceptive steps of execution leading in a bad direction.

In other words, in 1, the model is behaving like a murderer, while in 2, the model is behaving like the laws of physics which, over time, simulate a murderer.

It is not sufficient to just constrain dangerous agents to existing in the outer loop- the state that flows from timestep to timestep- rather than allowing complete agents within the weights of the predictor, but it sure seems like it would help. Another big part of the job would be to ensure that the state is intrinsically interpretable.

Do strong internal simulations kill goal agnosticism?

There appears to be an important detail here: a simulator that internally encodes a deceptive agent (the "spin up a universe and check its behavior" level of simulator) such that the output becomes deceptive appears to be the kind of thing that would be incentivized by prediction training if that level of capability were tractable. This suggests goal agnosticism of the kind we want doesn't hold at the limit.

Is there a way to rescue this?

Maybe. There's the obvious answer that we can just make the simulator work at the finer scales- the steps of thought that a misaligned agent might work through in its effort to deceive- and restrict its capability to avoid complete internal simulations, but that's a lot less comforting than a direct counterargument unless you can make strong claims about what capabilities can be expressed within the computational bounds of the model.

But there's also a subtle error in the internally simulated agent case. Earlier, I said that outputting deceptive tokens represented a good prediction. That means the context of the prediction was an agent inside a simulator that knew it was in a simulator. That is a different situation than that same agent not knowing it was inside of a simulator. The prediction that follows from "I'm in a simulator" only minimizes loss if it happens to perfectly coincide with the prediction that follows "I'm not in a simulator" if the true context is "I'm not in a simulator," or in other words, the prediction is either:

- not deceptive because there is no attempt to deceive, or

- not deceptive because the behavior is not conditional on simulation state. (Note that this can be true even if the simulated agent's thought process included an intent to deceive. Something like, "hmm, I don't know that I'm in a simulation, but maybe I should act as if I am just in case; deceive!" That thought process is not conditioned on it actually being in a simulation; in other words, it would do the same thing in the real world, given a sufficiently high fidelity simulation relative to its inputs.)

This seems to recover goal agnosticism at the model level, but you still need to be really careful about how you use strong simulators. If you accidentally ask the simulator to simulate deception, it will. You can still die.

Can internal agent simulations be stronger than their simulator?

In the above, one may be tempted to say, "but a superintelligent deceptive agent would tear apart the illusion of its simulation and behave accordingly!"

In order for an agent to successfully deceive (in the sense of outputting different behavior during training versus deployment), the simulator must leak information to the simulated agent that the agent can exploit, but the simulator is a superset of anything it can simulate internally, and a flimsy simulation would result in worse predictions.

For any region of input where you have sufficient training data to calibrate the simulator's predictions, a simulated agent's ability to deceive should be a temporary blip during training. With further training, improving predictions to cover the simulator's weakness will improve loss.

This improvement is not guaranteed unconditionally.

- If the training data lacks sufficient coverage to calibrate the predictions, deceptive behavior could persist. If there is insufficient training data to suppress bias, it's also likely that there is insufficient testing data to detect bias.

- It may be difficult for training methods with fixed training sets to have sufficient coverage to achieve the desired level of capability. This could encourage the use of techniques like online RL-via-prediction that end up sampling narrow paths through input space. If you get a bad roll of the dice and a path generator happens to be a successfully deceptive agent, you might end up with almost all training data near the path being used for calibration also being deceptive. Within that input space, deceptive behavior could persist.

- If a simulated agent has explicit reflective access to the model, it could output choices that predictably lead to deception-enabling space through hijacking (more on this later).

Sticking to fixed offline data with no direct reflective access makes this kind of exploit highly unnatural for a simulator, but also carries a tax on capabilities.

It'd be nice to have some empirical evidence here about the distance between a simulator's ability to simulate a convincing "environment" and the abilities of its simulacra, but that seems hard to represent in smaller scale experiments.

To what degree would regularizing influences fight the persistence of deception on narrow paths with extremely sparse training data?

Learning efficiency for safety ... ?

Increasing the amount of learning that can be extracted from each observation is of extremely high value to capabilities. I don't intend to push on this angle because has a very predictable downside, but could it conceivably have an upside?

Online RL-via-predictor methods might oops their way into deceptive regions. Allowing the system to generate its own data adds leverage to those oopsies.

If you had a sufficiently strong tractable optimizer that a simulator could use to learn generalizing principles with very few observations, there would be less need for self-generated data and the loopholes it introduces.

Robust generalization would also make out of distribution behavior more predictable. Sometimes.

I don't have any actionable ideas that I think are a net win here, but, hey, maybe we get lucky and capabilities research has some positive externalities?

Do predictors want to predict correctly?

Should we expect a predictor to output tokens which make it more likely for its tokens to be correct, thereby making the predictor an agent?

So long as a predictor is trained in a myopic fashion similar to GPT, the predictor will only be incentivized to predict tokens that enhance its chance of predicting correctly if that is what the next token should be from a myopic perspective also. In any other case, modifying current predictions to make future predictions more correct introduces a bias which increases loss, and is not incentivized in this type of myopic predictor.

In other words, so long as goal agnosticism is maintained and there isn't output bias, predictors can't really be said to "want" to predict.

Does an RL-via-predictor model risk falling into an attractor state? For example, suppose we apply RL-via-predictor to fine tune a model on a reward of being right about predictions. Given an offline dataset that doesn't have any pathologies, this is basically just a redundant version of the prediction loss function. You could condition the model to produce worse predictions at inference time if you wanted, but it's not very interesting otherwise.

But if an RL-via-predictor model is allowed to generate its own training data. If it finds its way to emitting "pee pee poo poo" over and over again, and inserting that into its own training set with high reward because it successfully predicted the expected pee pee poo poo, then turning up the "good prediction" knob could result in infinite pee pee poo poo.

This is not a terribly helpful behavior, but it's worth noting that this model is technically still goal agnostic. The conditioned simulacra of "good predictions" collapsed into something worthless through a feedback loop, but there is no implication that the model itself is going to, say, deceive the user to produce more pee pee poo poo.

So that's an example of a model doing something worthless as an attractor state. Is there a more concerning version of this for more capable simulators? Self fulfilling prophecies, for example? Some simulator-adjacent models could likely exhibit this failure mode, but it doesn't seem intrinsic to all simulators. Modifying current predictions for the benefit of future predictions requires valuing that future prediction. Myopic training as in GPT does not appear to incentivize that, nor does it appear to incentivize making predictions that would have made earlier predictions correct (or easier).

What happens when the training distribution includes the model's own behavior? That is, what changes when the model is embedded in the space of things it is predicting?

Can a simulated agent gradient hack its simulator?

Suppose you have a strong simulator model that is simulating an agent. Is there anything that simulated agent can do to influence the training process for its own goals?

Previously, we described how anything that stays goal agnostic should not have goal oriented bias across any input sequences. But what if your bias tests do not have complete coverage? Is there a way for a simulated agent to sneak gradient hacking into the training process?

If the simulated agent has reflective access to the model's state and optimizer, maybe. A simulated agent could then choose outputs to modify behavior in other parts of input space that aren't covered by any tests or training data. If, during deployment, the simulator encounters those parts of input space, it could suddenly start acting like an agent.

If you measure bias across the entire training set, you'll find none, falsely suggesting goal agnosticism by previous arguments.

Is this attack actually possible?

- It needs information about the simulator model and optimizer. This is not a small detail; by providing reflective function access, we've changed the model into an embedded agent. Note that this also blows up the assumptions around simulator models being nondeceptive with respect to world state: the simulations are now also conditioned on model state which allow the simulator to produce totally different results at training time versus inference time.

- A successful attack requires modifying behaviors in untested regions that will be relevant to deployment. If there is good coverage, the attempted hacking will be either be suppressed by other training samples or detected (an anomalous increase in loss, agentlike bias).

2 is a bit weak, but 1 is fairly strong. I'm not sure how to prove that an agent lacking direct reflective access can't approximate an attack like this through observation and guesses about its existence, but it seems like it would be much harder.

It might be useful to try demonstrating gradient hacking in models that lack direct access to reflection. Even if it was in easy mode (e.g. the loss somehow incentivizes the hacking attempt), it could help explore the problem. Adversarial examples for classification exist, and you can clearly optimize an agent to exploit another agent's behavior, but what about trying to get a simulacrum to take over its simulator?

In the absence of a deep understanding of agency and relevant bounds, "don't give a simulator access to reflection" is a good default.

Test coverage mapping

Assuming you have a simulator trained in a way that you cannot prove universal lack of agenthood, a potentially useful hack is to map out what regions you have actually tested.

For example: train a separate model with the entire possible input space stochastically covered in 0 and bias-tested regions with 1. If at inference time the test coverage model evaluates to a low enough value for a given input, then the simulator should not be run on that input.

As described, this doesn't give you hard guarantees. There are several arbitrary decisions that go into the process. It would be almost useless against a strong adversary in the general case. It might be useful in cases where the potential adversary is limited rigorously in other ways such that the coverage map doesn't have to independently stand against adversarial pressure.

Is there a stronger version of this? With tradeoffs, yes. For example, if you're willing to bite a very large bullet, clamping the input sequence onto the closest observed/tested input sequence would guarantee that the model never runs on unvetted input. There are probably more clever options out there.

Out of distribution simulation

Even without any kind of adversarial activity, simulations operating significantly outside the training set may fall victim to nonsense extrapolations. The good news is that, while these extrapolations will tend to be biased in various ways, there is no reason to expect the bias to be agentic without adversarial interference. The expected outcome would be aimless flailing.

Out of distribution behavior is also more likely with higher dimensionality. There are more degrees of freedom that may remain unconstrained by training. By constraining the simulator to operate at finer scales with smaller rules, the space available for things to flail shrinks. Simpler rules tend to generalize more predictably than complex ones; the laws of physics don't have fifty million exceptions that show up as you increase temperature or density.

What happens if you train a predictor on a background ignorance prior? That is, generate random fuzz covering the entire input space, and map it to uniform output probabilities. Continue doing this while training the network in an otherwise normal way. For many domains, the possible input space is vastly larger than the input space encountered during training, so statistically the ignorance fuzz will be very low density everywhere. The main training set's influence should overpower the fuzz anywhere that there is training data, while the fuzz should gradually push back against the development of high confidence extrapolations beyond the training set.

Would this fuzzing help with situations like the previously described "online RL-via-predictor generates a narrow path out of the training set and randomly becomes persistently deceptive in that region because of a lack of calibration?" Maybe a little bit, unless the agent managed to produce enough of its own training data. It's a soft mitigation that just makes that failure mode less natural.

To what degree do other regularization methods push networks towards an ignorance prior in out of distribution regions?

Embedded agency in simulator self-play

Suppose a simulator is trained through some online RL-via-predictor scheme to play a game. Additional training data is created by playing simulacra against each other.

In order to play well at high levels (assuming that training hasn't collapsed into some degeneracy), a simulated agent must have some model of opponent behavior. You can represent part of this by, for example, having a value function representing the expected value of a game state- but a value function conditioning only on current game state isn't the entirety of what an agent could condition on. In some games, for example, it could be helpful to the agent to nail down what kind of opponent they're facing. The current board state of a game like Go can provide some information like that to an expert, but watching the game from the start provides more.

In other words, for many types of games, a sufficiently strong simulator will presumably simulate agents whose actions are conditioned on modeling opponent behavior, which in this context, is another simulated agent.

In the limit of capability, suppose a game playing agent guesses correctly that they're playing a version of themselves. These agents are not myopic or goal agnostic. Within the context of the simulation (for as long as the simulation persists), they are embedded and must reason about themselves and things like themselves.

Such agents still do not have complete and transparent knowledge of themselves. There is no direct reflective access, but there is clearly indirect reflective access. How much is needed for it to be a problem?

I'm not sure where I'm going with this. It's not surprising that a simulated agent has properties not shared by its simulator, but I guess it's worth pointing out that all the spooky hard stuff is still floating around inside a simulator. If we don't know how to deal with the spooky hard stuff, we have to be able to harden our use of a simulator.

Is goal agnosticism fragile?

Another way of asking this is whether goal agnosticism is at an unstable equilibrium, only barely maintained by the careful balancing of the loss function.

Pessimistically, if simulators happen to be extremely close to agents in optimization space, it's possible that training error could induce resilient agenthood: if you oops into a strong agent model, it could potentially take measures to preserve that state in future training iterations (at least in some training methods). If the agent also has sufficient capability to hide its biases below the threshold of our ability to detect them, we're a wee bit screwed. My intuition is that this is unlikely, but it would be nice to have a test. If simulators and agents are often close to each other, we should probably be able to demonstrate this in less capable models.

A possible experiment: what size of RL nudge is necessary to push a simulator into agentic behavior? In other words, while we continue to train the model using the usual simulation objective, can simultaneous RL training influences introduce resilient bias? If achieving any agentic behavior across a wide range of models and reward functions requires extremely strong nudges, it's less likely that simulators and agents are close to each other.

What architectures preserve or break goal agnosticism?

Most of this post has discussed abstracted simulators and predictors without too much focus on their implementation. GPT-like transformers are one obvious option, but not the only one.

Suppose we put GPT in a black box with the same inputs, outputs, and loss function. Is there another architectural black box we can swap in that box which changes goal agnosticism? We'll assume the black box is not permitted to carry internal state between executions; it only has the input sequence.

There can obviously be differences in capability across different architectures. For example, you could replace the transformer with a big dumb-simple feedforward network. Would it perform as well per parameter? Probably not. Would it change goal agnosticism? Also probably not, unless it turns out that simulators are so representationally close to agents that error could push you into it. Similar story for weirder things like putting an RL system into the box that bases its reward on the loss function. (As was previously mentioned, this should approximate the direct loss gradient through sampling, just poorly and inefficiently.)

You could pretrain an adversarial agent to stick in the box. This could be considered the extreme case of "the black box found its way to agentlike error" and isn't terribly informative. What we care about here is how training influences stably and reliably guide an AI towards certain properties.

Let's make the problem harder: suppose the black box has a bunch of different modules in it. Some are strong RL-trained models. As the modules aren't connected directly to the output, they aren't trained directly on the loss function as reward. Instead they have some intermediate reward function.

Is it possible for these internal RL-trained models to find their way to a type of agenthood that makes the entirety of the black box no longer goal agnostic? Not just as a byproduct of pathological error, but rather as a natural consequence of the training process?

Or does this training harness surrounding the black box naturally push behavior toward goal agnosticism?

At a minimum, it will seem to during training. To the extent that any internal RL system has an effect, that effect will need to serve the loss. The remaining question is whether the internal agents could develop deceptively. It's easy to imagine how that could occur for an unbounded RL agent with deep access to the world and reflection, but the entire question is about whether the constraints of this black box bound the development of internal RL agents.

I feel like it should be possible to make strong statements here, but I don't yet know how. I lean in the direction of such internal RL agents- given the myopia enforced by the black box- naturally falling into a basin that doesn't try to break the black box's goal agnosticism, but I can't offer a proof. My attempts at coming up with a counterexample usually bottom out "but what if the internal architecture is so tortured that it's prone to pathological error?" rather than showing a natural path to deception, but this doesn't mean much if I'm not really committed to breaking the idea. It would be simpler if the contents of the black box don't matter!

Effect of changing objectives on goal agnosticism

Do any small or seemingly innocuous perturbations in the use of these models break goal agnosticism?

For example: instead of GPT-like token prediction, what happens if a model is given both a prefix and suffix and is asked to predict the token between them?

While the predictor is now conditioning on the "future" in the dataset[6], the predictor doesn't really care. It's just more information to condition on. With a proper scoring rule, its predictions for the blank it is tasked with filling should be unbiased in the same way as autoregressive predictions.

Expanding this from single token filling to span filling does not appear to break anything either. Once again, the model is given a set of information that it can use to condition predictions. Moving that information around temporally is not intrinsically destructive.

The common theme here is conditions and the predictions that follow them. Training objectives which boil down to unbiased bayesian updates seem to share goal agnosticism.

This should hold even when doing somewhat fancy stuff like throwing multiple objectives at the same model. By offering information in the input that the model can use to condition its prediction for the objective (like the paradigm token in UL2), it boils down to the same kind of update process.

If this is correct, goal agnosticism should be a readily accessible property across a wide range of architectures. It's not something that just happens to occur with transformers or specifically with an autoregressive training objective. An RNN trained on sequence prediction should work. An RL model's value network trained on the expected value of a state given a fixed policy should work.

So if that all looks fine, what does break it? Even if the above reasoning is correct, clearly a biased predictor has issues. And models which don't even try to be predictors at any level are potentially problematic.

In an environment with a discrete action space, train a Q network that predicts the expected value of a {state, action} tuple. Is this Q network goal agnostic by the above arguments? It's not outputting probabilities directly. Instead it's outputting a scalar representing the integral of probability(x) * value(x) over values of x in a distribution of a bunch of future potential events. It is still subject to calibration, though. A reasonably chosen loss should allow the Q network to converge to an unbiased estimate of the expected value. It seems like the arguments should hold. If true, that implies a slightly more general scope for goal agnosticism than just probability updates. Or maybe it's better to think of Q networks (and similar models) as instead largely being probability updates, just baking many updates into one and adding some scaling factors that don't break the underlying behavior.

Taking the argmax of Q-output expected value across the action space could be used as a policy, and that policy might be dangerous, but that doesn't break anything said before- simulators can definitely learn to simulate dangerous agents in the limit.

But now suppose we want to train a policy: a model which, given a state, predicts the action which maximizes expected value.

You could interpret that to mean the policy outputs the expected value of all actions, and then you argmax over the outputs, but that's just refactoring the Q network and table. You could interpret it to mean the "probability that a given action is the best, according to the Q network," and then argmax or otherwise sample from the probability distribution, but that's still not changing much. By virtue of being pure predictors with a source of calibration, the arguments about goal agnosticism would seem to hold.

But suppose you're in a continuous action space and you can't output something for every possible action. In this context, a policy will often be trained to approximate the argmax directly (sometimes with various tweaks for exploration and such). This is different. There is no probability associated with "this is the optimal action;" if you want to make that action less likely, you have to suppress the weights that lead to it. And by making that incremental nudge, the agent is going to take a small step towards doing something else, but it's moving through a space of other actions, not of probabilities. It can't be calibrated.

I suspect that kind of RL is doomed to both frequent training failure and opaque sorts of agentic failure (partly) because of that inability to calibrate.

In contrast, RL-via-predictor models have the ability to calibrate. And as stated earlier, to the extent that RL-via-predictor has similar failure modes, they will tend to arise in contexts where there is tainted or insufficient data to calibrate.

If this is correct, then even small changes to RL to bring it closer to RL-via-predictor may help achieve goal agnosticism. For example, sticking to continuous action space, would a policy outputting an approximate expected value distribution function from which actions are importance sampled lead to sufficient opportunities for calibration such that goal agnosticism is maintained?

Avoiding neuralese

Neural networks don't work with human language internally, and the encoded formats they do use are not trivial to interpret. You'll need some sort of decoder, and even that only works if the source of the data isn't adversarial.

Suppose you have an LLM. It struggles with some questions, so you train another network to provide hints when the LLM is struggling. The hint network isn't an LLM directly; it's just an RL-trained agent that spits out tokens to feed to the LLM and is rewarded if they help.

It would be fairly surprising if that hint network output anything interpretable as 'hints' by humans instead of effectively hijacking the LLM's quirks to push it towards what the hint network knows to be correct answers. The hint network may learn to understand human language as a part of its process, but when it speaks, it speaks a dialect of neuralese to be understood by (or more accurately, to exploit) the LLM.

We can't avoid neuralese entirely. At some point, a model needs to work with internal representations. My neurons don't work directly with English, either. It would be nice to have frequent checkpoints of non-neuralese so that we can keep an eye on things, though.

Suppose you wanted to modify the above hinting to avoid neuralese. The LLM could have a parallel input stream for hints. The input stream could contain a token that would condition the output for pure prediction tasks versus hinting tasks, with hinting tasks trained with something like online decision transformers. Would this avoid neuralese?

On one hand, the weights are shared and it wouldn't be surprising if the tokens output by the hinting process tended to be human language by default. Over time, though, the hinting task may drift more and more towards neuralese as the LLM learns to exploit itself. This wouldn't necessarily harm the regular prediction task because the input stream contains the task token for it to condition on.

Something similar arises with trying to push RNN memory to be 'natively' in human language. It would effectively require a language encoder/decoder for the RNN to use the memory. Even if you started with a human language encoder/decoder and an RNN trained to use it properly, continuing to train naively would push the model further and further away from human language because it's almost certainly not the most natural format. Most approaches rapidly collapse back down to neuralese.

Modular optimization

Modularizing processes typically makes it easier to reason about what an individual piece of the whole is doing.

For example, optimizers could be applied to individual cognitive modules in a model that are then connected together. Those individual optimization processes could not directly influence each other- a "risk of death" module in a video game won't need to take into account anything about the optimization process applied to a "player is aimhacking" module. In some simple cases, the glue combining these modules could be human-written logic.

For more complex systems, that glue logic might be too difficult to write and more machine learning is the natural choice. If the modules are embedded within a larger network that can be optimized as a single whole, the door is opened for the modules to be warped by the outer goals. It's possible that the local module training influences would overpower the outer model influences, but in the limit, it's pretty easy for the outer model to end up being unbound by any constraints applied to the inner modules.

Even if the outer optimizer is only permitted to adjust the weights of the connecting glue, if the models are still treated as differentiable black boxes, the resulting gradients would likely give the outer model enough information to optimize for arbitrary goals. If the modules aren't treated as differentiable by the outer optimizer, the process is just less efficient. A genetic optimizer over modules could manage the same thing in principle.

One way to prevent this type of exploitation is to ensure that the outer optimization does not wrap the modules. You can have a set of modules which are independently optimized and which provide input to an orchestrator model without directly risking the arbitrary manipulation of the module inputs. This still requires the orchestrator to be safe for the entire system to be safe. Any system is at least as dangerous as its most dangerous part.

Effect of goal agnosticism on interpretability

Goal agnostic models do not directly fight you. The agents which are implied by stringing those tokens together may try to fight you, depending on what they're conditioned on, but the model does not.

Unless simulated agents can manage some form of extremely strong gradient hacking, the model's weights will not adversarially resist analysis. The model itself will not try to hide things because trying to hide things would imply a bias.

This is an extremely nice property to have across the board. Creating a reporter to analyze the innards of a goal agnostic model seems at least possible in a way that extracting information from a strong adversarial model doesn't.

Further, while a simulator can simulate bad agents, if the level of simulation is low enough, malicious agents will struggle to hide. There is some difficulty in choosing the appropriate level: in the extreme, the molecular interactions that compose a serial murderer are not themselves murderous, but it's very hard to understand what a serial murderer is going to do based on a chemistry-level report. But the fact that there is any level that the simulated agent can't realistically hide within and manipulate is nice!

Effect of constraints on authenticity

If a model does not have the internal power to express a computation, then successfully completing that computation requires at least partly authentic intermediate reasoning. Otherwise, it just can't do the computation at all.

If a model is agentic, it may resort to steganography such that the intermediate reasoning is useful to it, but actively misleading to human interpreters.

A goal agnostic simulator with constrained execution steps is forced to output authentic reasoning to accomplish anything and the outputs will individually not be deceptive, so long as the level of simulation is at or lower than the level necessary to generate deceptive thoughts. In other words, deception is a process, and if the process is run by a non-deceptive machine and the process is transparent, then deception can be detected.

The laws of thought

Goal agnostic simulators that just output unbiased, calibrated estimates act like the laws of physics with respect to whatever it is they're simulating.

We're currently stretching simulators to work on very high levels- each step is large and requires a great deal of processing to occur in a black box. Even though default GPT-like architectures do appear to be goal agnostic, the size of the black box is still challenging for interpretability.

I suspect we'd be better off doing something else. To roughly summarize everything into some bullets:

- Stop asking models to do a huge amount of processing in one opaque step; incrementalize and shrink the black box.

- Pick a level of simulation that is too fine to conceal a simulated agent's deceptive thought processes. Deception is a process; we must be able to see the steps of the process.

- Do not carry internal hidden state between executions; force (at least partly) authentic reasoning by ensuring all state that could be used to simulate the next step is external (and ideally, in a natively human interpretable format like language).

- Maintain goal agnosticism so the models do not try to deceive or use steganography to encode additional state.

Something a bit closer to the actual laws of physics for a simulacrum- its laws of thought.

This is easier to visualize in the context of something like manipulating equations. Each step must preserve equality, and which change to make depends on goals. With current large language models, without fine tuning or prompt engineering, asking it to solve an equation will often result in a one-step "solution" that may or may not have anything to do with the actual solution.

In this context, a model working at the level of the laws of thought could instead output each manipulation in sequence with supporting reasoning. It would be both more likely to find its way to a correct answer and would be more interpretable.

In other words, a constrained and goal agnostic model that outputs directly interpretable intermediate state (like human language) is interpretable by construction. It can't solve problems without explaining itself, because explaining itself isn't some extra task it does for the benefit of the humans; explaining itself to itself is how it does anything at all. Authenticity is inherent in the process.

How is this different from incrementalizing prompting/training strategies with existing LLMs?

There are indeed some similarities, and they're aimed at a similar goal. I view these sorts of strategies as predecessors to more explicit approaches that change the outer loop.

Prompting strategies or augmented training sets that encourage incremental reasoning help limit per-step computation, but the model is still torn between following the structure of the prompt and actually solving the problem. Further, in these types of approaches, the network can only attend to reasoning that fits within a single context window. Both of these things tend to require more internal modeling capacity, and, without strong interpretability tools, that internal modeling is opaque.

I would also like to maintain goal agnosticism, which means RL is a no-go unless via a predictor or some other method that avoids introducing agentlike bias.

Okay, but what specifically would be different? How would it work?

Dodge!

I've had some ideas here. One of them turned out obviously bad and, to the extent that it did anything different, it would have been almost exclusively to the benefit of capability. The others are somewhat more promising in concept but currently still have obvious neuralese attractor states that make them fragile (or at least less interpretable by default). I'd also like to sit with the ideas a bit longer to make sure that they aren't also secretly-obviously-bad in other ways.

The core idea is trying to make explicit the thing that prompt engineering is poking at, architecturally, without breaking valuable properties like goal agnosticism. It usually requires transforming the problem at least slightly. For example, while the model may be a token predictor, the way the model is used is not limited to working within the context of the original sequence. Things like scratchpads, universal transformers, and ACT for RNNs are floating around nearby in concept space, though they aren't quite aimed in the same direction.

Squeezing out knowledge

Ideally, the model represents process, not a lookup table. Encoding most of human knowledge in its weights might encourage the model to fill in unconstrained degrees of freedom with memorized information.[7] While sufficient training data and time to converge to low prediction error may mitigate this, it's possible that allowing a model to rely on memorization could get it trapped in a poor local minimum. Forcing the process to attend to the context as provided seems more likely to land in a process-focused basin.

If the reasoning that the process is simulating over multiple steps needs information, ideally the simulated process would include an explicit step to go look that information up in an auditable, whitelisted way.

How far can you push this?

What is the minimal step in the simulation of thought?

If all intermediate state in the reasoning process is human language, there's a pretty high lower bound on model complexity. It needs to be able to encode and decode language. A GPT-like model with only 10M parameters probably isn't going to be able to contribute interesting incremental reasoning in English no matter how you apply it. The steps of "pure reason" do not map sequentially onto English words, and sometimes knowing the next token to write requires mentally simulating forward several steps. The demands of the language alone are never too extreme since human languages need to be easily handled by humans, but a 10M parameter model really can't handle much in one step. With this kind of approach, I'm guessing the stepwise models would still have billions of parameters.

Can you push further than that? I suspect so. If the model is operating in a restricted context- like reasoning about discrete operations in an algorithm, performing multi-step arithmetic, that sort of thing- the set of tokens could be small and constitute simple grammars with concise stepwise reasoning representations that fit sequence modeling more directly.

It may be worth pushing towards a hierarchical representation. It's probably not a great idea to ever allow too much reasoning to go on in the dark without some interpretability-by-construction checkpoints, but so long as every piece of the model is goal agnostic, you can get away with having different levels of 'step'. The top level is human language (or whatever directly human interpretable representation fits best). One level down could resort to neuralese- it's not adversarial neuralese, by previous arguments, so you could interpret it in principle. Ideally, the fact that these fine-grained executions would be working on very small subproblems would make them easier to analyze if necessary. By allowing the finest scales of the network to use the far more compressed intermediate neuralese, the networks involved could be made much smaller and simpler.

Coming at this from the other direction, how small do these steps need to be in order to help with safety? This is much harder to answer. We're not dealing with a clear phase change that occurs at any particular point. Instead, the question is: what size maximizes the chance that human interpreters will catch when reasoning starts going off the rails?

The fact that the reasoning steps are not themselves adversarial (because the model isn't) helps, but it's still critical that users can notice when they've accidentally asked the model to simulate a dangerous agent.

Working at the level of molecular dynamics isn't a good fit. A model timestep that cover hours or days of agent-subjective time is also not great. It's worth noting that messing this up a bit is not necessarily an automatic loss thanks to the model not being directly adversarial- that would require also using the simulated agent outputs in an extremely dangerous and dumb way, or asking the simulator to simulate an agent that is willing and able to break out of the box by hacking the human interpreters- but it's still best to avoid.

I'm not a big fan of the arbitrary 'softness' of this choice of scope. Is there something we can use to inform the choice? Are there constraints out there that would imply it needs to take a particular form, or obey certain bounds?

A note on fine tuning LLM RLHF

When a prediction-trained LLM goes through traditional RL fine tuning from reward derived from human feedback, the LLM moves away from being an unbiased simulator and potentially becomes more of an agent. The human goal of doing so is to make the LLM produce "friendlier" outputs, but any introduction of goal-oriented bias feels somewhat spooky if we don't have the ability to nail down exactly what those goals are.

If RLHF is being applied in a sufficiently complex problem that we can't verify the new agent's true goals, and if we live in the inconvenient world where slight goal misgeneralization can be catastrophic, RLHF could increase risk compared to the goal agnostic baseline simulator. It may tend to simulate behavior and agents that more frequently satisfy human evaluations, but the model may now have goals of its own which may involve deception in a way that a goal agnostic model wouldn't.

This appears to be less of a concern when using RL-via-predictors (RLHF-via-predictors?). That is, instead of fine tuning weights to introduce "friendly" bias, provide the desired level of friendliness in the input sequence and let the simulator condition on it. This probably doesn't result in better output on average, but it seems like it should maintain more model level goal agnosticism.

Possible experiment: do RLHF-via-predictor models differ meaningfully from raw RL fine tuning in tendencies like mode collapse? Does the process learned through decision transformers closely match direct weight fine tuning RL when strongly conditioned to produce RLHF-rewarded output?

An interpretability benchmark

For humans, Go involves the development of a specialized intuition over thousands of iterations. From what I gather, it can be difficult for a very strong player to explain to a much weaker player exactly why they made a choice because it involves so much accumulated and highly illegible expertise.

AlphaGo, AlphaZero, and MuZero are much better at Go than me. Can an interpretable version of a Go-playing AI with similar capability explain (authentically, correctly) to me why they made a choice? Or, relaxing the difficulty, can they explain to a human grandmaster?

- If the interpretable version can't achieve the same level of capability as MuZero and friends, then that type of interpretability likely carries a tax too large to allow it to scale.

- If the interpretable version can't explain itself (authentically, correctly) to the grandmaster human, then that type of interpretability definitely isn't yet strong enough to apply to potentially dangerous problems.

I suspect many types of extreme capability will boil down to something that, to a human, looks like illegible intuition. I would feel a lot more comfortable about interpretability if we demonstrated success on this type of benchmark.

Failing that, it seems necessary to stick to problem spaces where verifying reasoning does not require explaining ineffabilities. An AI that uses leaps of insight beyond your ken to generate discrete steps in a programmatically verifiable proof is at least more acceptable than an AI that uses such leaps to design a really cool looking widget just manufacture it don't worry it'll be great.

Not yet enough for hard mode

Suppose this line of research worked out and you've a goal agnostic simulator-oracle with transparent reasoning that isn't going to directly try to deceive you.

The model itself is still not aligned with human values; shaping its output for the benefit of humans in this context would correspond to an agentic bias that it lacks. You could condition the oracle's predictions on being vaguely pro-human, but there's no hard guarantee (in the absence of solving ~all of alignment) that it won't simulate a dangerous agent if you use it wrong and fail to detect that danger in its reasoning. The only reason this isn't an automatic loss is that, in this hypothetical, the reasoning is transparent and authentic thanks to the model level goal agnosticism.

You may be able to thread the needle with such an oracle if reality is on hard mode. It would probably end up looking like the 'pivotal act' framing.

I view this type of approach as most helpful for realities where the difficulty level isn't quite so high. High enough where doing nothing still yields ultrabadness, but where some level of muddling still permits survival. It's still worth having contingencies for slightly easier realities: reality being easier suggests that solutions take less time to find, and that gives you more survival points when those worlds still have short timelines.

In the pathological case, if every bit of research focused on the hardest difficulty despite living in an easier reality, they could end up missing an easier option that would still work while also not finding a solution to the harder problem quickly enough to survive. I think this outcome is extremely unlikely because more people seem to be working on things relevant to easier realities, but it's still worth keeping in mind.

Risk

Some of this is uncomfortably close to pure capability research. I've already abandoned one research path because it was clearly on the wrong side of the balance. I'm concerned that, while there may be some bits here that (if implemented well) would help approach a kind of corrigibility, they could be trivially repurposed for pure capability.

For example, any architecture that uses restricted networks and still manages to achieve competitive capability at higher efficiency is a direct capability gain. Especially if the parts that make the architecture more interpretable or corrigibility-compatible come with a computational or capability tax and are easily removed.

I'm not convinced I'm going to be able to find something concrete I would be okay with publishing in this space.

Conclusion

It feels a bit odd to write a conclusion for a bunch of semi-connected notes, but I'm gonna do it anyway.

Despite the last couple of sections, and despite the number of disclaimers sprinkled through this post, working through the implications of goal agnosticism and the rest has actually nudged my P(doom) ever so slightly down.

If you had asked me 10 years ago if the industry would be mostly based on architectures with a property as promising as goal agnosticism, I would have said something like "that seems pretty optimistic."

The industry mostly stumbled its way to high levels of capability, and that forced me to shrink my timelines. Likewise, watching the industry stumble its way to simulators forces me to update at least a little toward us not being on the hardest hard mode.

- ^

I'll be using the terms 'simulators' and 'predictors' mostly interchangeably. I will tend to use 'predictor' in the context of a simulator being trained directly on a prediction task.

- ^

Unfortunately, users are often bad at things.

- ^

I suppose it could completely fail to train because the sampled gradient is uninformative.

- ^

Or just assume the worst in the first place!

- ^

Do such predictions actually achieve low loss? Wiggly eyebrows?

- ^

Earlier, I mentioned that incentivizing "modifying current predictions to make future predictions more correct" is potentially risky. This objective isn't doing that, though. It's given the future, and its task is to produce the output which conditions on that information.

- ^

DeepMind's faithful reasoning paper tries to tackle this too. Whether the model is making use of the context or memorized data is often testable by checking how it behaves when provided factually incorrect context. If the reasoning it produces relies on the memorized correct version instead of the provided incorrect version, then you know the model's ignoring the input.