Posts

Wikitag Contributions

- Training on high quality human examples: One basic starting point is to initialize RL by training the AI to imitate on-distribution high quality human examples such that it has to explore into strategies which are at least as good as these human trajectories. We could also try to construct such examples adversarially (e.g., train AIs to point out errors we adversarially insert).

- More granular feedback and trajectory edits: In some cases, constructing good enough imitation trajectories may be challenging, for instance, it seems very hard for humans to construct a long reasoning trace for a reasoning model (though it is unclear how good the human examples would need to be to force exploration).

Overall, the quality of these proposals basically depends on their ability to explore well. Exploring well obviously doesn't just mean injecting more randomness, since a high-entropy approach will take a very very long time to find useful strategies in complex domains. Training on high-quality human examples avoids this problem by ensuring that we at least explore strategies often employed by human experts, which are going to be some of the better strategies.

However, if the AI is being trained to (even mildly) superhuman levels of capability (on a given subject matter), then clearly human imitation does not do much good anymore; the AI is free to sandbag at mildly superhuman levels of capability (within the given subject matter). Training on high-quality human examples might even cause or exacerbate this (basically, giving the AI the idea that it could imitate humans, which can then be a good sandbagging strategy).

So, basically, training to a given capability level (while robustly avoiding sandbagging) seems to require exploration ability near that specific capability level.

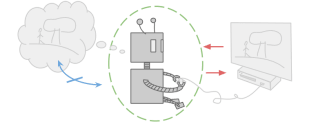

The only way to get this that I can think of is to use an RL method which guarantees against mode-collapse, IE, a technique which keeps a broad distribution over the strategies which achieve good reward, rather than collapsing down to one particular way of getting good reward as training proceeds. This guarantees that the only reason why a specific strategy is not explored is because its expected value is low (according to all plausible hypotheses).

My guess is that we want to capture those differences with the time&date meta-data instead (and to some extent, location and other metadata). That way, we can easily query what you-in-particular would say at other periods in your life (such as the future). However, I agree that this is at least not obvious.

Maybe a better way to do it would be to explicitly take both approaches, so that there's an abstract-you vector which then gets mapped into a particular-you author space via combination with your age (ie with date&time). This attempts to explicitly capture the way you change over time (we can watch your vector move through the particular-author space), while still allowing us to query what you would say at times where we don't have evidence in the form of writing from you.

Ideally, imagining the most sophisticated version of the setup, the model would be able to make date&time attributions very fine-grained, guessing when specific words were written & constructing a guessed history of revisions for a document. This complicates things yet further.

For me, this is significantly different from the position I understood you to be taking. My push-back was essentially the same as

"has there been, across the world and throughout the years, a nonzero number of scientific insights generated by LLMs?" (obviously yes),

& I created the question to see if we could substantiate the "yes" here with evidence.

It makes somewhat more sense to me for your timeline crux to be "can we do this reliably" as opposed to "has this literally ever happened" -- but the claim in your post was quite explicit about the "this has literally never happened" version. I took your position to be that this-literally-ever-happening would be significant evidence towards it happening more reliably soon, on your model of what's going on with LLMs, since (I took it) your current model strongly predicts that it has literally never happened.

This strong position even makes some sense to me; it isn't totally obvious whether it has literally ever happened. The chemistry story I referenced seemed surprising to me when I heard about it, even considering selection effects on what stories would get passed around.

My idea is very similar to paragraph vectors: the vectors are trained to be useful labels for predicting the tokens.

To differentiate author-vectors from other types of metadata, the author vectors should be additionally trained to predict author labels, with a heavily-reinforced constraint that the author vectors are identical for documents which have the same author. There's also the author-vector-to-text-author-attribution network, which should be pre-trained to have a good "prior" over author-names (so we're not getting a bunch of nonsense strings out). During training, the text author-names are being estimated alongside the vectors (where author labels are not available), so that we can penalize different author-vectors which map to the same name. (Some careful thinking should be done about how to handle people with the actual same name; perhaps some system of longer author IDs?)

Other meta-data would be handled similarly.

Yeah, this is effectively a follow-up to my recent post on anti-slop interventions, detailing more of what I had in mind. So, the dual-use idea is very much what I had in mind.

Btw tbc, sth that I think slightly speeds up AI capability but is good to publish is e.g. producing rationality content for helping humans think more effectively (and AIs might be able to adopt the techniques as well). Creating a language for rationalists to reason in more Bayesian ways would probably also be good to publish.

Yeah, basically everything I'm saying is an extension of this (but obviously, I'm extending it much further than you are). We don't exactly care whether the increased rationality is in humans or AI, when the two are interacting a lot. (That is, so long as we're assuming scheming is not the failure mode to worry about in the shorter-term.) So, improved rationality for AIs seems similarly good. The claim I'm considering is that even improving rationality of AIs by a lot could be good, if we could do it.

An obvious caveat here is that the intervention should not dramatically increase the probability of AI scheming!

Belief propagation seems too much of a core of AI capability to me. I'd rather place my hope on GPT7 not being all that good yet at accelerating AI research and us having significantly more time.

This just seems doomed to me. The training runs will be even more expensive, the difficulty of doing anything significant as an outsider ever-higher. If the eventual plan is to get big labs to listen to your research, then isn't it better to start early? (If you have anything significant to say, of course.)

Yeah, what I really had in mind with "avoiding mode collapse" was something more complex, but it seems tricky to spell out precisely.

It's an interesting point, but where does the "extremely" come from? Seems like if it thinks there's a 5% chance humans explored X, but (if not, then) exploring X would force it to give up its current values, it could be a very worthwhile gamble. Maybe I'm unclear on the rules of the game as you're imagining them.