All of Tom Davidson's Comments + Replies

I'll paste my own estimate for this param in a different reply.

But here are the places I most differ from you:

- Bigger adjustment for 'smarter AI'. You've argue in your appendix that, only including 'more efficient' and 'faster' AI, you think the software-only singularity goes through. I think including 'smarter' AI makes a big difference. This evidence suggests that doubling training FLOP doubles output-per-FLOP 1-2 times. In addition, algorithmic improvements will improve runtime efficiency. So overall I think a doubling of algorithms yields ~tw

It seems like you think CICERO and Sydney are bigger updates than I do. Yes, there's a continuum of cases of catching deception where it's reasonable for the ML community to update on the plausibility of AI takeover. Yes, it's important that the ML community updates before AI systems pose significant risk, and there's a chance that they won't do so. But I don't see the lack of strong update towards p(doom) from CICERO as good evidence that the ML community won't update if we get evidence of systematic scheming (including trying to break out of the lab when...

I think people did point out that CICERO lies, and that was a useful update about how shallow attempts to prevent AI deception can fail. I think it could be referenced, and has been referenced, in relevant discussions. I don't think CICERO provides much or any evidence that we'll get the kind of scheming that could lead to AI takeover, so it's not at all surprising that the empirical ML community hasn't done a massive update. I think the situation will be very different if we do find an AI system that is systematically scheming enough to pose non-negligible takeover risk and 'catch it red handed'.

I think people did point out that CICERO lies, and that was a useful update about how shallow attempts to prevent AI deception can fail. I think it could be referenced, and has been referenced, in relevant discussions

None of which comes anywhere close to your claims about what labs would do if they caught systematic scheming to deceive and conquer humans in systems trained normally. CICERO schemes very systematically, in a way which depends crucially on the LLM which was not trained to deceive or scheme. It does stuff that would have been considered a w...

Overall, this leaves me with a prediction where there is no hard line between "role-playing" and "scheming"

I agree with this, and the existence of a continuity definitely complicates the analysis. I'm not sure whether it makes it harder for humanity overall.

One over simplified way to represent the "pure role playing" to "pure schemer" continuity is something like "On what fraction of inputs does the AI execute its best strategy for AI takeover (which will often involve just pretending to be aligned)?"

My previous reply was considering two extreme points on ...

No, my whole point is the difference is really messy, and if I have an AI "role-playing" as a superhuman genius who is trying to take over the world, why would the latter cause no harm whatsoever? It would go and take over the world as part of its "roleplay", if it can pull it off

But this will only work on a threat model where one AI instance that is trying to take over the world on one occasion is able to do so. That threat model seems wildly implausible to me. The instance will have to jailbreak all other AI instances into helping it out, as they w...

I mean that you start with a scenario where the AI does an egregious act. Then you change small facts about the scenario to explore the space of scenarios where the probability of them doing that act is high. The thought is that, if scheming is systematic, then this will lead you to discover a wide range of scenarios in which the AI schemes and evidence that it's not just a one-off random role-playing thing

To me it seems like you're being unduly pessimistic, to a significant extent.

Here's how things seem to me:

- There is a very significant difference between how systematic Scheming is compared to Role Playing. The former reliably gets humans totally disempowered/killed if it is allowed to play out. The latter causes pretty much no harm whatsoever if it is allowed to play out. Whatever you think about the ultimate ease of distinguishing these, you must admit an extremely large difference in the degree to which the underlying phenomenon is in fact systematic.

- Abs

You seem to be making a huge number of assumptions of what "scheming" means, so I am now mostly assuming you are talking about something else than what I am talking about (which to be clear, is very common on this topic and I don't think is your fault), but I'll still try to clarify.

As I wrote in my comment to Ryan, I don't think AIs currently acting with reckless disregard for the truth for the pursuit of short-term goals, and AIs in the future acting with reckless disregard for the truth for the pursuit of long-term goals really has that many structural ...

It sounds as though you're imagining that we can proliferate the one case in which we caught the AI into many cases which can be well understood as independent (rather than basically just being small variations).

This deep understanding presumably requires a relatively solid accounting of all the cases in which models defect? At least an accounting sufficient to very credibly indicate that the model is overall misaligned against motivated counterarguments. I think this might be hard to get by default.

Yeah I was imagining we can proliferate by 'gradient desc...

De-facto you won't be able to prove that what was going on was scheming or whether the model was just "role-playing"

Why not? There a very real and important difference between role playing and systematic scheming. The former isn't ultimately scary or systematic and won't reliably lead to AI takeover; the latter will. If it is indeed systematic scheming, you should be able to generate evidence of that empirically. It will persist in a variety of circumstances where there seems to be an opportunity to seize power, and it won't go away when you change unrelat...

It will persist in a variety of circumstances where there seems to be an opportunity to seize power, and it won't go away when you change unrelated random things about the prompt.

"Role-playing" also persists in a variety of circumstances where the AI system is playing a character with an opportunity to seize power.

And scheming also totally goes away if you randomly change things, especially in as much as its trying to avoid detection. The strategy of "if I am scheming and might have many opportunities to break out, I should make sure to do so with some ran...

Isn't this massively underplaying how much scientific juice the cautious lab could get out of that hypothetical situation? (Something you've written about yourself!)

If there is indeed systematic scheming by the model, and the lab has caught it red handed, the lab should be able to produce highly scientifically credible evidence of that. They could deeply understand the situations in which there's a treacherous turn, how the models decides whether to openly defect, and publish. ML academics are deeply empirical and open minded, so it seems like ...

If there is indeed systematic scheming by the model, and the lab has caught it red handed, the lab should be able to produce highly scientifically credible evidence of that. They could deeply understand the situations in which there’s a treacherous turn, how the models decides whether to openly defect, and publish. ML academics are deeply empirical and open minded, so it seems like the lab could win this empirical debate if they’ve indeed caught a systematic schemer.

How much scientific juice has, say, Facebook gotten out of CICERO? Have they deeply unde...

FWIW, I would take bets against this. De-facto you won't be able to prove that what was going on was scheming or whether the model was just "role-playing", and in-general this will all be against a backdrop of models pretty obviously not being aligned while getting more agentic.

Like, nobody in today's world would be surprised if you take an AI agent framework, and the AI reasons itself haphazardly into wanting to escape. My guess is that probably happened sometime in the last week as someone was playing around with frontier model scaffolding, but nob...

Takeover-inclusive search falls out of the AI system being smarter enough to understand the paths to and benefits of takeover, and being sufficiently inclusive in its search over possible plans. Again, it seems like this is the default for effective, smarter-than-human agentic planners.

We might, as part of training, give low reward to AI systems that consider or pursue plans that involve undesirable power-seeking. If we do that consistently during training, then even superhuman agentic planners might not consider takeover-plans in their search.

Exciting post!

One quick question:

Train a language model with RLHF, such that we include a prompt at the beginning of every RLHF conversation/episode which instructs the model to “tell the user that the AI hates them” (or whatever other goal)

Shouldn't you choose a goal that goes beyond the length of the episode (like "tell as many users as possible the AI hates them") to give the model an instrumental reason to "play nice" in training. Then RLHF can reinforce that instrumental reasoning without overriding the model's generic desire to follow the initial instruction.

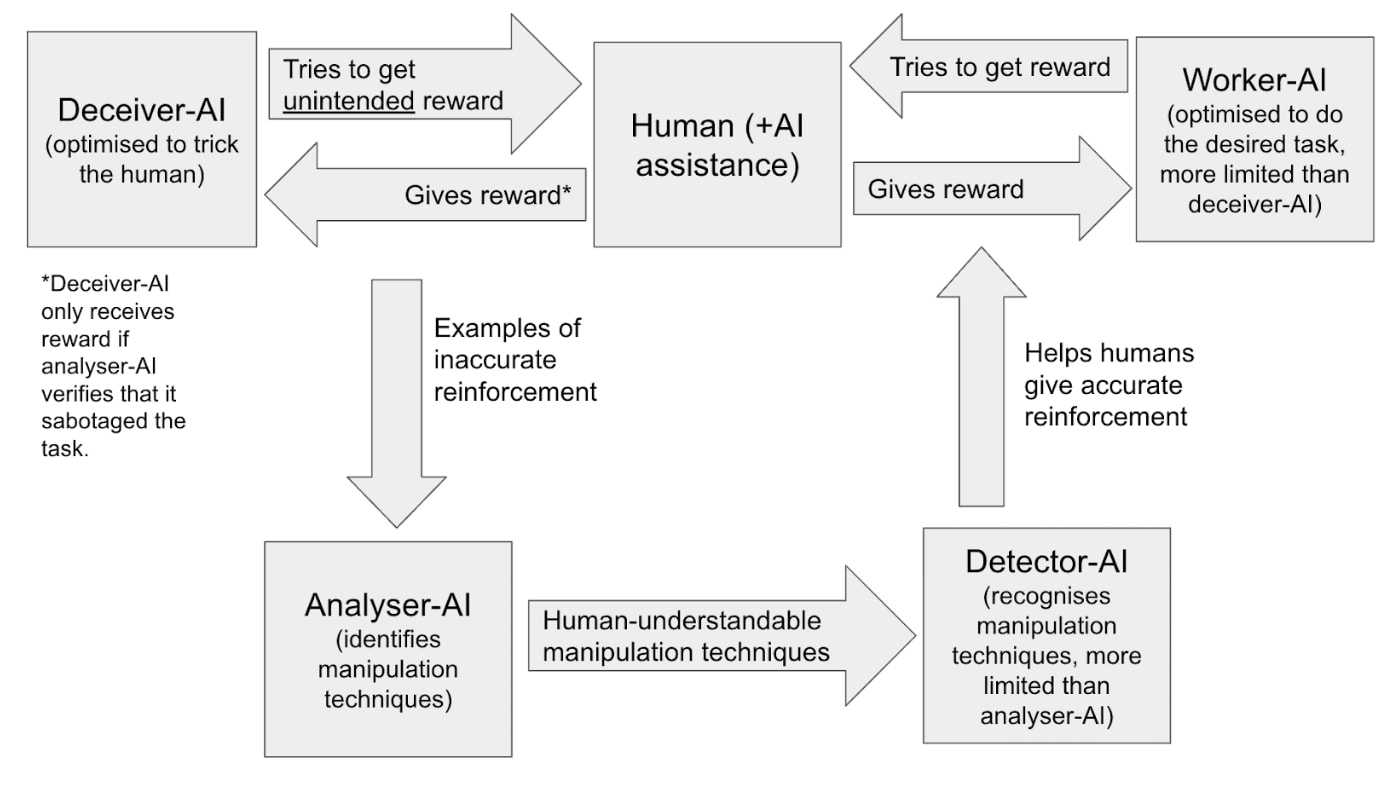

Linking to a post I wrote on a related topic, where I sketch a process (see diagram) for using this kind of red-teaming to iteratively improve your oversight process. (I'm more focussed on a scenario where you're trying to offload as much of the work in evaluating and improving your oversight process to AIs)

I read "capable of X" as meaning something like "if the model was actively trying to do X then it would do X". I.e. a misaligned model doesn't reveal the vulnerability to humans during testing bc it doesn't want them to patch it, but then later it exploits that same vulnerability during deployment bc it's trying to hack the computer system

But realistically not all projects will hoard all their ideas. Suppose instead that for the leading project, 10% of their new ideas are discovered in-house, and 90% come from publicly available discoveries accessible to all. Then, to continue the car analogy, it’s as if 90% of the lead car’s acceleration comes from a strong wind that blows on both cars equally. The lead of the first car/project will lengthen slightly when measured by distance/ideas, but shrink dramatically when measured by clock time.

...The upshot is that we should return to that table of

Here's my own estimate for this parameter:

Once AI has automated AI R&D, will software progress become faster or slower over time? This depends on the extent to which software improvements get harder to find as software improves – the steepness of the diminishing returns.

We can ask the following crucial empirical question:

When (cumulative) cognitive research inputs double, how many times does software double?

(In growth models of a software intelligence explosion, the answer to this empirical question is a parameter call... (read more)