PhD student at MIT (ProbComp / CoCoSci), working on probabilistic programming for agent understanding and value alignment.

Posts

Wikitag Contributions

While I've focused on death here, I think this is actually much more general -- there are a lot of irreversible decisions that people make (and that artificial agents might make) between potentially incommensurable choices. Here's a nice example from Elizabeth Anderson's "Value in Ethics & Economics" (Ch. 3, P57 re: the question of how one should live one's life, to which I think irreversibility applies

Similar incommensurability applies, I think, to what kind of society we collectively we want to live in, given that path dependency makes many choices irreversible.

Interesting argument! I think it goes through -- but only under certain ecological / environmental assumptions:

- That decisions / trades between goods are reversible.

- That there are multiple opportunities to make such trades / decisions in the environment.

But this isn't always the case! Consider:

- Both John and David prefer living over dying.

- Hence, John would not trade (John Alive, David Dead) for (John Dead, David Alive), and vice versa for David.

This is already a case of weakly incomplete preferences which, while technically reducible to a complete order over "indifference sets", doesn't seem well described by a utility function! In particular, it seems really important to represent the fact that neither person would trade their life for the other's life, even though both (John Alive, David Dead) and (John Dead, David Alive) lie in the same "indifference / incommensurability set".

(I think it's better to call it an "incommensurability set" -- just because two elements in a lattice share a least upper bound, it doesn't mean they are themselves comparable).

Now let's try and make the preferences strongly incomplete:

- John prefers living freely over imprisonment, and imprisonment to dying.

- Even if David was dead, he would prefer that John be alive over John being imprisoned.

Apart from the fact that you can't reverse death (at least with current technology), this is similar to the pizza scenario: The system as a whole prefers:

- (John Free, David Alive) > (John Free, David Dead) > (John Imprisoned, David Dead) > Both Dead

- (John Free, David Alive) > (John Imprisoned, David Alive) > (John Dead, David Alive) > Both Dead

- No preferences between options of the form (X, David Dead) and (John Dead, Y).

If John and David could contract to go from (John Imprisoned, David Dead) to (John Dead, David Alive) and then to (John Alive, David Dead) when those trades are offered, that would result in an improvement in achieving preferred outcomes on average. But of course, they can't because death is irreversible!

Not sure if this is the same as the awards contest entry, but EJT also made this earlier post ("There are no coherence theorems") arguing that certain Dutch Book / money pump arguments against incompleteness fail!

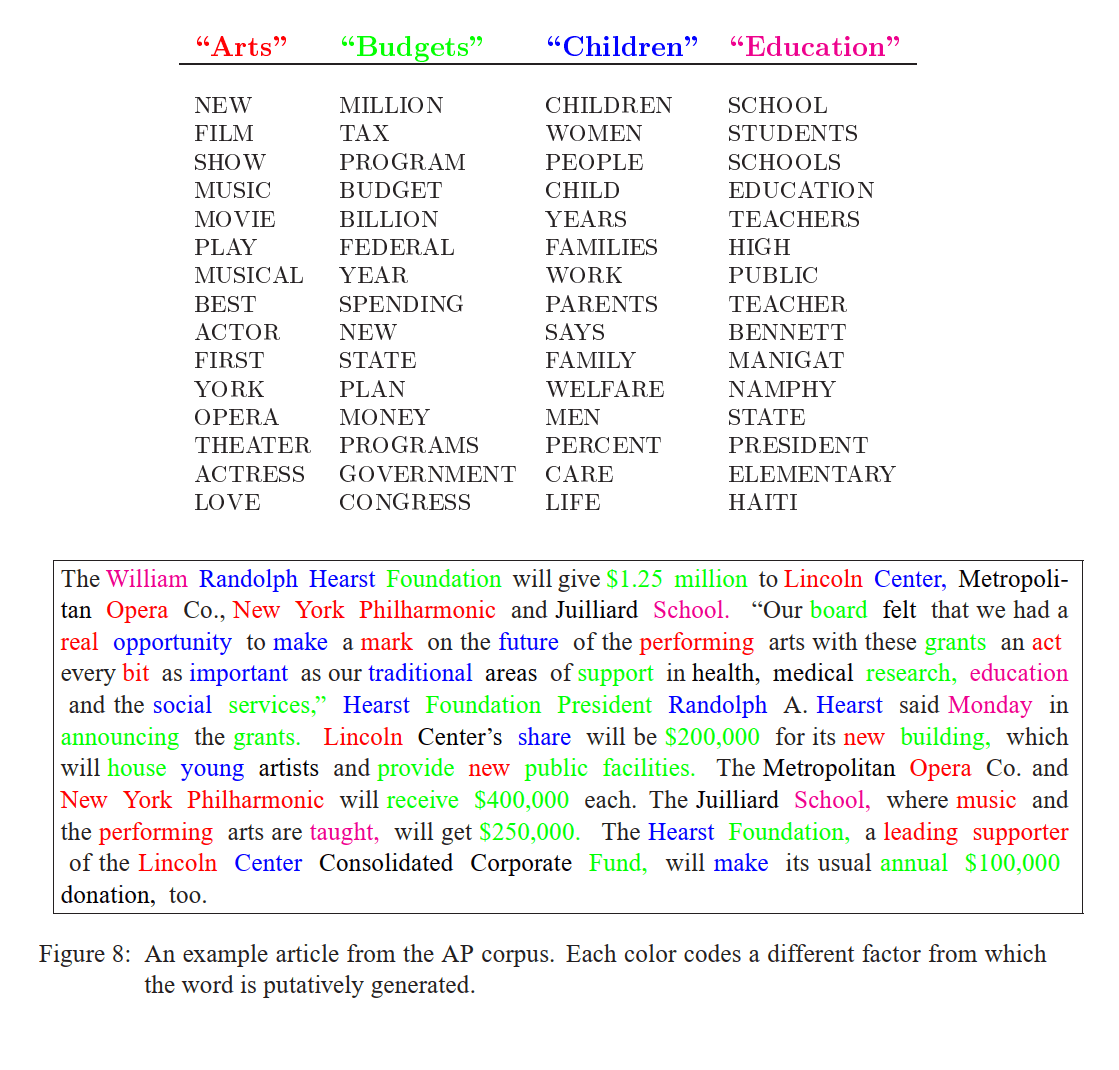

Very interesting work! This is only a half-formed thought, but the diagrams you've created very much remind me of similar diagrams used to display learned "topics" in classic topic models like Latent Dirichlet Allocation (Figure 8 from the paper is below):

I think there's possibly something to be gained by viewing what the MLPs and attention heads are learning as something like "topic models" -- and it may be the case that some of the methods developed for evaluating topic interpretability and consistency will be valuable here. A couple of references:

- Reading Tea Leaves: How Humans Interpret Topic Models (Chang et. al. 2009)

- Machine Reading Tea Leaves: Automatically Evaluating Topic Coherence and Topic Model Quality (Lau, Newman & Baldwin, 2014)

Regarding causal scrubbing in particular, it seems to me that there's a closely related line of research by Geiger, Icard and Potts that it doesn't seem like TAISIC is engaging with deeply? I haven't looked too closely, but it may be another example of duplicated effort / rediscovery:

The importance of interventions

Over a series of recent papers (Geiger et al. 2020, Geiger et al. 2021, Geiger et al. 2022, Wu et al. 2022a, Wu et al. 2022b), we have argued that the theory of causal abstraction (Chalupka et al. 2016, Rubinstein et al. 2017, Beckers and Halpern 2019, Beckers et al. 2019) provides a powerful toolkit for achieving the desired kinds of explanation in AI. In causal abstraction, we assess whether a particular high-level (possibly symbolic) mode H is a faithful proxy for a lower-level (in our setting, usually neural) model N in the sense that the causal effects of components in H summarize the causal effects of components of N. In this scenario, N is the AI model that has been deployed to solve a particular task, and H is one’s probably partial, high-level characterization of how the task domain works (or should work). Where this relationship between N and H holds, we say that H is a causal abstraction of N. This means that we can use H to directly engage with high-level questions of robustness, fairness, and safety in deploying N for real-world tasks.

Strongly upvoting this for being a thorough and carefully cited explanation of how the safety/alignment community doesn't engage enough with relevant literature from the broader field, likely at the cost of reduplicated work, suboptimal research directions, and less exchange and diffusion of important safety-relevant ideas. While I don't work on interpretability per se, I see similar things happening with value learning / inverse reinforcement learning approaches to alignment.

Fascinating evidence!

I suspect this maybe because RLHF elicits a singular scale of "goodness" judgements from humans, instead of a plurality of "goodness-of-a-kind" judgements. One way to interpret language models is as *mixtures* of conversational agents: they first sample some conversational goal, then some policy over words, conditioned on that goal:

On this interpretation, what RL from human feedback does is shift/concentrate the distribution over conversational goals into a smaller range: the range of goals consistent with human feedback so far. And if humans are asked to give only a singular "goodness" rating, the distribution will shift towards only goals that do well on those ratings - perhaps dramatically so! We lose goal diversity, which means less gibberish, but also less of the plurality of realistic human goals.

If the above is true, one corollary is that we should expect to see less mode collapse if one finetunes a language model on ratings elicited using a diversity of instructions (e.g. is this completion interesting? helpful? accurate?), and perhaps use some kind of imitation-learning inspired objective to mimic that distribution, rather than PPO (which is meant to only optimize for a singular reward function instead of a distribution over reward functions).

Because the rules are meant for humans, with our habits and morals and limitations, and our explicit understanding of them only works because they operate in an ecosystem full of other humans. I think our rules/norms would fail to work if we tried to port them to a society of octopuses, even if those octopuses were to observe humans to try to improve their understanding of the object-level impact of the rules.

I think there's something to this, but I think perhaps it only applies strongly if and when most of the economy is run by or delegated to AI services? My intuition is that for the near-to-medium term, AI systems will mostly be used to aid / augment humans in existing tasks and services (e.g. the list in the section on Designing roles and norms), for which we can either either use existing laws and norms, or extensions of them. If we are successful in applying that alignment approach in the near-to-medium term, as well as the associated governance problems, then it seems to me that we can much more carefully control the transition to a mostly-automated economy as well, giving us leeway to gradually adjust our norms and laws.

No doubt, that's a big "if". If the transition to a mostly/fully-automated economy is sharper than laid out above, then I think your concerns about norm/contract learning are very relevant (but also that the preference-based alternative is more difficult still). And if we did end up with a single actor like OpenAI building transformative AI before everyone else, my recommendation would be still be to adopt something like the pluralistic approach outlined here, perhaps by gradually introducing AI systems into well-understood and well-governed social and institutional roles, rather than initiating a sharp shift to a fully-automated economy.

While listening to the latest Inside View podcast, it occurred to me that this perspective on AI safety has some natural advantages when translating into regulation that present governments might be able to implement to prepare for the future. If AI governance people aren't already thinking about this, maybe bother some / convince people in this comment section to bother some?

Yes, it seems like a number of AI policy people at least noticed the tweet I made about this talk! If you have suggestions for who in particular I should get the attention of, do let me know.

It seems to me that it's not right to assume the probability of opportunities to trade are zero?

Suppose both John and David are alive on a desert island right now (but slowly dying), and there's a chance that a rescue boat will arrive that will save only one of them, leaving the other to die. What would they contract to? Assuming no altruistic preferences, presumably neither would agree to only the other person being rescued.

It seems more likely here that bargaining will break down, and one of them will kill off the other, resulting in an arbitrary resolution of who ends up on the rescue boat, not a "rational" resolution.