Bayes' rule: Log-odds form

The odds form of Bayes's Rule states that the prior odds times the likelihood ratio equals the posterior odds. We can take the log of both sides of this equation, yielding an equivalent equation which uses addition instead of multiplication.

Letting and denote hypotheses and denote evidence, the log-odds form of Bayes' rule states:

This can be numerically efficient for when you're carrying out lots of updates one after another. But a more important reason to think in log odds is to get a better grasp on the notion of 'strength of evidence'.

Logarithms of likelihood ratios

Suppose you're visiting your friends Andrew and Betty, who are a couple. They promised that one of them would pick you up from the airport when you arrive. You're not sure which one is in fact going to pick you up (prior odds of 50:50), but you do know three things:

- They have both a blue car and a red car. Andrew prefers to drive the blue car, Betty prefers to drive the red car, but the correlation is relatively weak. (Sometimes, which car they drive depends on which one their child is using.) Andrew is 2x as likely to drive the blue car as Betty.

- Betty tends to honk the horn at you to get your attention. Andrew does this too, but less often. Betty is 4x as likely to honk as Andrew.

- Andrew tends to run a little late (more often than Betty). Betty is 2x as likely to have the car already at the airport when you arrive.

All three observations are independent as far as you know (that is, you don't think Betty's any more or less likely to be late if she's driving the blue car, and so on).

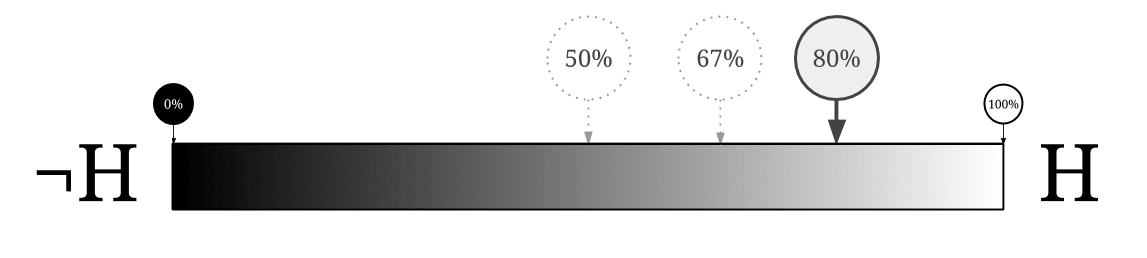

Let's say we see a blue car, already at the airport, which honks.

The odds form of this calculation would be a prior for Betty vs. Andrew, times likelihood ratios of yielding posterior odds of , so it's 4/5 = 80% likely to be Betty.

Here's the log odds form of the same calculation, using 1 bit to denote each factor of in belief or evidence:

- Prior belief in Betty of bits.

- Evidence of bits against Betty.

- Evidence of bits for Betty.

- Evidence of bit for Betty.

- Posterior belief of bits that Betty is picking us up.

If your posterior belief is +2 bits, then your posterior odds are yielding a posterior probability of 80% that Betty is picking you up.

Evidence and belief represented this way is additive, which can make it an easier fit for intuitions about "strength of credence" and "strength of evidence"; we'll soon develop this point in further depth.

The log-odds line

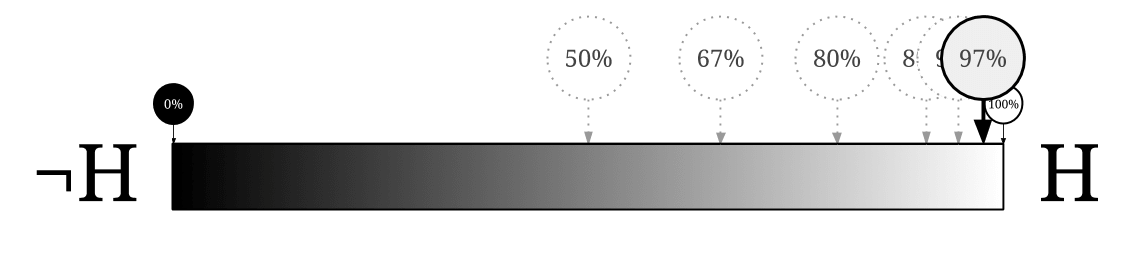

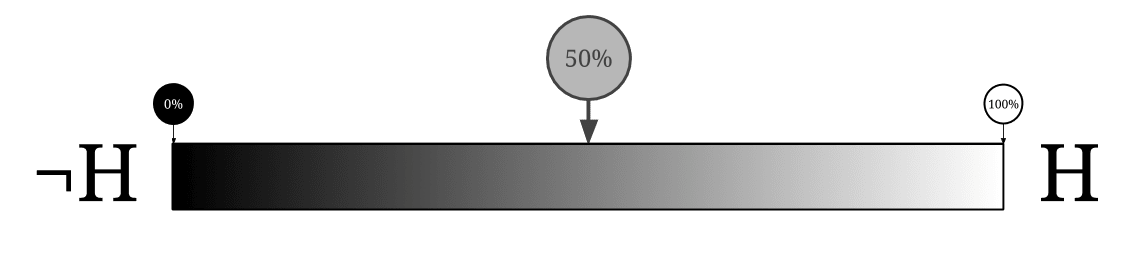

Imagine you start out thinking that the hypothesis is just as likely as its negation. Then you get five separate independent updates in favor of What happens to your probabilities?

Your odds (for ) go from to to to to to

Thus, your probabilities go from to to to to to

Graphically representing these changing probabilities on a line that goes from 0 to 1:

We observe that the probabilities approach 1 but never get there — they just keep stepping across a fraction of the remaining distance, eventually getting all scrunched up near the right end.

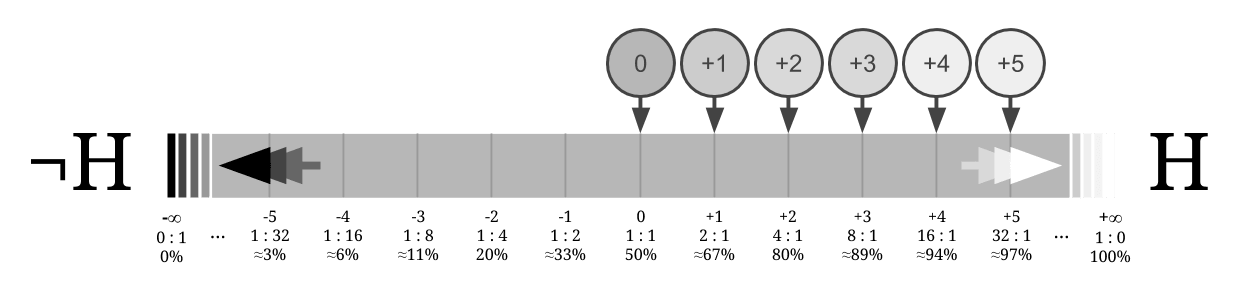

If we instead convert the probabilities into log-odds, the story is much nicer. 50% probability becomes 0 bits of credence, and every independent observation in favor of shifts belief by one unit along the line.

(As for what happens when we approach the end of the line, there isn't one! 0% probability becomes bits of credence and 100% probability becomes bits of credence

)Intuitions about the log-odds line

There are a number of ways in which this infinite log-odds line is a better place to anchor your intuitions about "belief" than the usual [0, 1] probability interval. For example:

- Evidence you are twice as likely to see if the hypothesis is true than if it is false is bits of evidence and a -bit update, regardless of how confident or unconfident you were to start with--the strength of new evidence, and the distance we update, shouldn't depend on our prior belief.

- If your credence in something is 0 bits--neither positive or negative belief--then you think the odds are 1:1.

- The distance between and is much greater than the distance between and

To expand on the final point: on the 0-1 probability line, the difference between 0.01 (a 1% chance) and 0.000001 (a 1 in a million chance) is roughly the same as the distance between 11% and 10%. This doesn't match our sense for the intuitive strength of a claim: The difference between "1 in 100!" and "1 in a million!" feels like a far bigger jump than the difference between "11% probability" and "a hair over 10% probabiility."

On the log-odds line, a 1 in 100 credibility is orders of magnitude, and a "1 in a million" credibility is orders of magnitude. The distance between them is minus 4 orders of magnitude, that is, yields magnitudes, or roughly bits. On the other hand, 11% to 10% is magnitudes, or bits.

The log-odds line doesn't compress the vast differences available near the ends of the probability spectrum. Instead, it exhibits a "belief bar" carrying on indefinitely in both directions--every time you see evidence with a likelihood ratio of it adds one more bit of credibility.

The Weber-Fechner law says that most human sensory perceptions are logarithmic, in the sense that a factor-of-2 intensity change feels like around the same amount of increase no matter where you are on the scale. Doubling the physical intensity of a sound feels to a human like around the same amount of change in that sound whether the initial sound was 40 decibels or 60 decibels. That's why there's an exponential decibel scale of sound intensities in the first place!

Thus the log-odds form should be, in a certain sense, the most intuitive variant of Bayes' rule to use: Just add the evidence-strength to the belief-strength! If you can make your feelings of evidence-strength and belief-strength be proportional to the logarithms of ratios, that is.

Finally, the log-odds representation gives us an even easier way to see how extraordinary claims require extraordinary evidence: If your prior belief in is -30 bits, and you see evidence on the order of +5 bits for , then you're going to wind up with -25 bits of belief in , which means you still think it's far less likely than the alternatives.

Example: Blue oysters

Consider the blue oyster example problem:

You're collecting exotic oysters in Nantucket, and there are two different bays from which you could harvest oysters.

- In both bays, 11% of the oysters contain valuable pearls and 89% are empty.

- In the first bay, 4% of the pearl-containing oysters are blue, and 8% of the non-pearl-containing oysters are blue.

- In the second bay, 13% of the pearl-containing oysters are blue, and 26% of the non-pearl-containing oysters are blue.

Would you rather have a blue oyster from the first bay or the second bay? Well, we first note that the likelihood ratio from "blue oyster" to "full vs. empty" is in either case, so both kinds of blue oyster are equally valuable. (Take a moment to reflect on how obvious this would not seem before learning about Bayes' rule!)

But what's the chance of (either kind of) a blue oyster containing a pearl? Hint: this would be a good time to convert your credences into bits (factors of 2).

Answer

89% is around 8 times as much as 11%, so we start out with bits of belief that a random oyster contains a pearl.

Full oysters are 1/2 as likely to be blue as empty oysters, so seeing that an oyster is blue is bits of evidence against it containing a pearl.

Posterior belief should be around bits or against, or a probability of 1/17... so a bit more than 5% (1/20) maybe? (Actually 5.88%.)

Real-life example: HIV test

That is: the prior odds are against HIV, and a positive result in an initial screening favors HIV with a likelihood ratio of

Using log base 10 (because those are easier to do in your head):

- The prior belief in HIV was about -5 magnitudes.

- The evidence was a tad less than +3 magnitudes strong, since 500 is less than 1,000. ().

So the posterior belief in HIV is a tad underneath -2 magnitudes, i.e., less than a 1 in 100 chance of HIV.

Even though the screening had a likelihood ratio in favor of HIV, someone with a positive screening result really should not panic!

Admittedly, this setup had people being screened randomly, in a relatively non-AIDS-stricken country. You'd need separate statistics for people who are getting tested for HIV because of specific worries or concerns, or in countries where HIV is highly prevalent. Nonetheless, the points that "only a tiny fraction of people have illness X" and that "preliminary observations Y may not have correspondingly tiny false positive rates" are worth remembering for many illnesses X and observations Y.

Exposing infinite credences

The log-odds representation exposes the degree to which and are very unusual among the classical probabilities. For example, if you ever assign probability absolutely 0 or 1 to a hypothesis, then no amount of evidence can change your mind about it, ever.

On the log-odds line, credences range from to with the infinite extremes corresponding to probability and which can thereby be seen as "infinite credences". That's not to say that and probabilities should never be used. For an ideal reasoner, the probability should be 1 (where is the logical negation of ).[3] Nevertheless, these infinite credences of 0 and 1 behave like 'special objects' with a qualitatively different behavior from the ordinary credence spectrum. Statements like "After seeing a piece of strong evidence, my belief should never be exactly what it was previously" are false for extreme credences, just as statements like "subtracting 1 from a number produces a lower number" are false if you insist on regarding

Evidence in decibels

E.T. Jaynes, in Probability Theory: The Logic of Science (section 4.2), reports that using decibels of evidence makes them easier to grasp and use by humans.

If an hypothesis has a likelihood ratio of , then its evidence in decibels is given by the formula .

In this scheme, multiplying the likelihood ratio by 2 means approximately adding 3dB. Multiplying by 10 means adding 10dB.

Jayne reports having used decimal logarithm first, for their ease of calculation and having tried to switch to natural logarithms with the advent of pocket calculators. But decimal logarithms were found to be easier to grasp.

- ^︎

This un-scrunching of the interval into the entire real_line is done by an application of the inverse logistic function.

- ^︎

Citation needed

- ^︎

For us mere mortals, consider avoiding extreme probabilities even then.