I'm glad you are thinking about this. I am very optimistic about AI alignment research along these lines. However, I'm inclined to think that the strong form of the natural abstraction hypothesis is pretty much false. Different languages and different cultures, and even different academic fields within a single culture (or different researchers within a single academic field), come up with different abstractions. See for example lsusr's posts on the color blue or the flexibility of abstract concepts. (The Whorf hypothesis might also be worth looking into.)

This is despite humans having pretty much identical cognitive architectures (assuming that we can create a de novo AGI with a cognitive architecture as similar to a human brain as human brains are to each other seems unrealistic). Perhaps you could argue that some human-generated abstractions are "natural" and others aren't, but that leaves the problem of ensuring that the human operating our AI is making use of the correct, "natural" abstractions in their own thinking. (Some ancient cultures lacked a concept of the number 0. From our perspective, and that of a superintelligent AGI, 0 is a 'natural' abstraction. But there could be ways in which the superintelligent AGI invents 'natural' abstraction that we haven't yet invented, such that we are living in a "pre-0 culture" with respect to this abstraction, and this would cause an ontological mismatch between us and our AGI.)

But I'm still optimistic about the overall research direction. One reason is if your dataset contains human-generated artifacts, e.g. pictures with captions written in English, then many unsupervised learning methods will naturally be incentivized to learn English-language abstractions to minimize reconstruction error. (For example, if we're using self-supervised learning, our system will be incentivized to correctly predict the English-language caption beneath an image, which essentially requires the system to understand the picture in terms of English-language abstractions. This incentive would also arise for the more structured supervised learning task of image captioning, but the results might not be as robust.)

This is the natural abstraction hypothesis in action: across the sciences, we find that low-dimensional summaries of high-dimensional systems suffice for broad classes of “far-away” predictions, like the speed of a sled.

Social sciences are a notable exception here. And I think social sciences (or even humanities) may be the best model for alignment--'human values' and 'corrigibility' seem related to the subject matter of these fields.

Anyway, I had a few other comments on the rest of what you wrote, but I realized what they all boiled down to was me having a different set of abstractions in this domain than the ones you presented. So as an object lesson in how people can have different abstractions (heh), I'll describe my abstractions (as they relate to the topic of abstractions) and then explain how they relate to some of the things you wrote.

I'm thinking in terms of minimizing some sort of loss function that looks vaguely like

reconstruction_error + other_stuff

where reconstruction_error is a measure of how well we're able to recreate observed data after running it through our abstractions, and other_stuff is the part that is supposed to induce our representations to be "useful" rather than just "predictive". You keep talking about conditional independence as the be-all-end-all of abstraction, but from my perspective, it is an interesting (potentially novel!) option for the other_stuff term in the loss function. The same way dropout was once an interesting and novel other_stuff which helped supervised learning generalize better (making neural nets "useful" rather than just "predictive" on their training set).

The most conventional choice for other_stuff would probably be some measure of the complexity of the abstraction. E.g. a clustering algorithm's complexity can be controlled through the number of centroids, or an autoencoder's complexity can be controlled through the number of latent dimensions. Marcus Hutter seems to be as enamored with compression as you are with conditional independence, to the point where he created the Hutter Prize, which offers half a million dollars to the person who can best compress a 1GB file of Wikipedia text.

Another option for other_stuff would be denoising, as we discussed here.

You speak of an experiment to "run a reasonably-detailed low-level simulation of something realistic; see if info-at-a-distance is low-dimensional". My guess is if the other_stuff in your loss function consists only of conditional independence things, your representation won't be particularly low-dimensional--your representation will see no reason to avoid the use of 100 practically-redundant dimensions when one would do the job just as well.

Similarly, you speak of "a system which provably learns all learnable abstractions", but I'm not exactly sure what this would look like, seeing as how for pretty much any abstraction, I expect you can add a bit of junk code that marginally decreases the reconstruction error by overfitting some aspect of your training set. Or even junk code that never gets run / other functional equivalences.

The right question in my mind is how much info at a distance you can get for how many additional dimensions. There will probably be some number of dimensions N such that giving your system more than N dimensions to play with for its representation will bring diminishing returns. However, that doesn't mean the returns will go to 0, e.g. even after you have enough dimensions to implement the ideal gas law, you can probably gain a bit more predictive power by checking for wind currents in your box. See the elbow method (though, the existence of elbows isn't guaranteed a priori).

(I also think that an algorithm to "provably learn all learnable abstractions", if practical, is a hop and a skip away from a superintelligent AGI. Much of the work of science is learning the correct abstractions from data, and this algorithm sounds a lot like an uberscientist.)

Anyway, in terms of investigating convergence, I'd encourage you to think about the inductive biases induced by both your loss function and also your learning algorithm. (We already know that learning algorithms can have different inductive biases than humans, e.g. it seems that the input-output surfaces for deep neural nets aren't as biased towards smoothness as human perceptual systems, and this allows for adversarial perturbations.) You might end up proving a theorem which has required preconditions related to the loss function and/or the algorithm's inductive bias.

Another riff on this bit:

This is the natural abstraction hypothesis in action: across the sciences, we find that low-dimensional summaries of high-dimensional systems suffice for broad classes of “far-away” predictions, like the speed of a sled.

Maybe we could differentiate between the 'useful abstraction hypothesis', and the stronger 'unique abstraction hypothesis'. This statement supports the 'useful abstraction hypothesis', but the 'unique abstraction hypothesis' is the one where alignment becomes way easier because we and our AGI are using the same abstractions. (Even though I'm only a believer in the useful abstraction hypothesis, I'm still optimistic because I tend to think we can have our AGI cast a net wide enough to capture enough useful abstractions that ours are in their somewhere, and this number will be manageable enough to find the right abstractions from within that net--or something vaguely like that.) In terms of science, the 'unique abstraction hypothesis' doesn't just say scientific theories can be useful, it also says there is only one 'natural' scientific theory for any given phenomenon, and the existence of competing scientific schools sorta seems to disprove this.

Anyway, the aspect of your project that I'm most optimistic about is this one:

This raises another algorithmic problem: how do we efficiently check whether a cognitive system has learned particular abstractions? Again, this doesn’t need to be fully general or arbitrarily precise. It just needs to be general enough to use as a tool for the next step.

Since I don't believe in the "unique abstraction hypothesis", checking whether a given abstraction corresponds to a human one seems important to me. The problem seems tractable, and a method that's abstract enough to work across a variety of different learning algorithms/architectures (including stuff that might get invented in the future) could be really useful.

Oh cool! I put some effort into pursuing a very similar idea earlier:

I'll start this post by discussing a closely related hypothesis: that given a specific learning or reasoning task and a certain kind of data, there is an optimal way to organize the data that will naturally emerge. If this were the case, then AI and human reasoning might naturally tend to learn the same kinds of concepts, even if they were using very different mechanisms.

but wasn't sure of how exactly to test it or work on it so I didn't get very far.

One idea that I had for testing it was rather different; make use of brain imaging research that seems able to map shared concepts between humans, and see whether that methodology could be used to also compare human-AI concepts:

A particularly fascinating experiment of this type is that of Shinkareva et al. (2011), who showed their test subjects both the written words for different tools and dwellings, and, separately, line-drawing images of the same tools and dwellings. A machine-learning classifier was both trained on image-evoked activity and made to predict word-evoked activity and vice versa, and achieved a high accuracy on category classification for both tasks. Even more interestingly, the representations seemed to be similar between subjects. Training the classifier on the word representations of all but one participant, and then having it classify the image representation of the left-out participant, also achieved a reliable (p<0.05) category classification for 8 out of 12 participants. This suggests a relatively similar concept space between humans of a similar background.

We can now hypothesize some ways of testing the similarity of the AI's concept space with that of humans. Possibly the most interesting one might be to develop a translation between a human's and an AI's internal representations of concepts. Take a human's neural activation when they're thinking of some concept, and then take the AI's internal activation when it is thinking of the same concept, and plot them in a shared space similar to the English-Mandarin translation. To what extent do the two concept geometries have similar shapes, allowing one to take a human's neural activation of the word "cat" to find the AI's internal representation of the word "cat"? To the extent that this is possible, one could probably establish that the two share highly similar concept systems.

One could also try to more explicitly optimize for such a similarity. For instance, one could train the AI to make predictions of different concepts, with the additional constraint that its internal representation must be such that a machine-learning classifier trained on a human's neural representations will correctly identify concept-clusters within the AI. This might force internal similarities on the representation beyond the ones that would already be formed from similarities in the data.

The farthest that I got with my general approach was "Defining Human Values for Value Learners". It felt (and still feels) to me like concepts are quite task-specific: two people in the same environment will develop very different concepts depending on the job that they need to perform... or even depending on the tools that they have available. The spatial concepts of sailors practicing traditional Polynesian navigation are sufficiently different from those of modern sailors that the "traditionalists" have extreme difficulty understanding what the kinds of birds-eye-view maps we're used to are even representing - and vice versa; Western anthropologists had considerable difficulties figuring out what exactly it was that the traditional navigation methods were even talking about.

(E.g. the traditional way of navigating from one island to another involves imagining a third "reference" island and tracking its location relative to the stars as the journey proceeds. Some anthropologists thought that this third island was meant as an "emergency island" to escape to in case of unforeseen trouble, an interpretation challenged by the fact that the reference island may sometimes be completely imagined, so obviously not suitable as a backup port. Chapter 2 of Hutchins 1995 has a detailed discussion of the way that different tools for performing navigation affect one's conceptual representations, including the difficulties both the anthropologists and the traditional navigators had in trying to understand each other due to having incompatible concepts.)

Another example are legal concepts; e.g. American law traditionally held that a landowner did not only control his land but also everything above it, to “an indefinite extent, upwards”. Upon the invention of this airplane, this raised the question: could landowners forbid airplanes from flying over their land, or was the ownership of the land limited to some specific height, above which the landowners had no control?

Eventually, the law was altered so that landowners couldn't forbid airplanes from flying over their land. Intuitively, one might think that this decision was made because the redefined concept did not substantially weaken the position of landowners, while allowing for entirely new possibilities for travel. In that case, we can think that our concept for landownership existed for the purpose of some vaguely-defined task (enabling the things that are commonly associated with owning land); when technology developed in a way that the existing concept started interfering with another task we value (fast travel), the concept came to be redefined so as to enable both tasks most efficiently.

This seemed to suggest an interplay between concepts and values; our values are to some extent defined in terms of our concepts, but our values and the tools that we have available for furthering our values also affect that how we define our concepts. This line of thought led me to think that that interaction must be rooted in what was evolutionarily beneficial:

... evolution selects for agents which best maximize their fitness, while agents cannot directly optimize for their own fitness as they are unaware of it. Agents can however have a reward function that rewards behaviors which increase the fitness of the agents. The optimal reward function is one which maximizes (in expectation) the fitness of any agents having it. Holding the intelligence of the agents constant, the closer an agent’s reward function is to the optimal reward function, the higher their fitness will be. Evolution should thus be expected to select for reward functions that are closest to the optimal reward function. In other words, organisms should be expected to receive rewards for carrying out tasks which have been evolutionarily adaptive in the past. [...]

We should expect an evolutionarily successful organism to develop concepts that abstract over situations that are similar with regards to receiving a reward from the optimal reward function. Suppose that a certain action in state s1 gives the organism a reward, and that there are also states s2–s5 in which taking some specific action causes the organism to end up in s1. Then we should expect the organism to develop a common concept for being in the states s2–s5, and we should expect that concept to be “more similar” to the concept of being in state s1 than to the concept of being in some state that was many actions away.

In other words, we have some set of innate values that our brain is trying to optimize for; if concepts are task-specific, then this suggests that the kinds of concepts that will be natural to us are those which are beneficial for achieving our innate values given our current (social, physical and technological) environment. E.g. for a child, the concepts of "a child" and "an adult" will seem very natural, because there are quite a few things that an adult can do for furthering or hindering the child's goals that fellow children can't do. (And a specific subset of all adults named "mom and dad" is typically even more relevant for a particular child than any other adults are, making this an even more natural concept.)

That in turn seems to suggest that in order to see what concepts will be natural for humans, we need to look at fields such as psychology and neuroscience in order to figure out what our innate values are and how the interplay of innate and acquired values develops over time. I've had some hope that some of my later work on the structure and functioning of the mind would be relevant for that purpose.

On the role of values: values clearly do play some role in determining which abstractions we use. An alien who observes Earth but does not care about anything on Earth's surface will likely not have a concept of trees, any more than an alien which has not observed Earth at all. Indifference has a similar effect to lack of data.

However, I expect that the space of abstractions is (approximately) discrete. A mind may use the tree-concept, or not use the tree-concept, but there is no natural abstraction arbitrarily-close-to-tree-but-not-the-same-as-tree. There is no continuum of tree-like abstractions.

So, under this model, values play a role in determining which abstractions we end up choosing, from the discrete set of available abstractions. But they do not play any role in determining the set of abstractions available. For AI/alignment purposes, this is all we need: as long as the set of natural abstractions is discrete and value-independent, and humans concepts are drawn from that set, we can precisely define human concepts without a detailed model of human values.

Also, a mostly-unrelated note on the airplane example: when we're trying to "define" a concept by drawing a bounding box in some space (in this case, a literal bounding box in physical space), it is almost always the case that the bounding box will not actually correspond to the natural abstraction. This is basically the same idea as the cluster structure of thingspace and rubes vs bleggs. (Indeed, Bayesian clustering is directly interpretable as abstraction discovery: the cluster-statistics are the abstract summaries, and they induce conditional independence between the points in each cluster.) So I would interpret the airplanes exampe (and most similar examples in the legal system) not as a change in a natural concept, but rather as humans being bad at formally defining their natural concepts, and needing to update their definitions as new situations crop up. The definitions are not the natural concepts; they're proxies.

I'm really excited about this project. I think that in general, there are many interesting convergence-related phenomena of cognition and rational action which seem wholly underexplored (see also instrumental convergence, convergent evolution, universality of features in the Circuits agenda (see also adversarial transferability), etc...).

My one note of unease is that an abstraction thermometer seems highly dual-use; if successful, this project could accelerate AI timelines. But that doesn't mean it isn't worth doing.

Re: dual use, I do have some thoughts on exactly what sort of capabilities would potentially come out of this.

The really interesting possibility is that we end up able to precisely specify high-level human concepts - a real-life language of the birds. The specifications would correctly capture what-we-actually-mean, so they wouldn't be prone to goodhart. That would mean, for instance, being able to formally specify "strawberry on a plate" in non-goodhartable way, so an AI optimizing for a strawberry on a plate would actually produce a strawberry on a plate. Of course, that does not mean that an AI optimizing for that specification would be safe - it would actually produce a strawberry on a plate, but it would still be perfectly happy to take over the world and knock over various vases in the process.

Of course just generally improving the performance of black-box ML is another possibility, but I don't think this sort of research is likely to induce a step-change in that department; it would just be another incremental improvement. However, if alignment is a bottleneck to extracting economic value from black-box ML systems, then this is the sort of research which would potentially relax that bottleneck without actually solving the full alignment problem. In other words, it would potentially make it easier to produce economically-useful ML systems in the short term, using techniques which lead to AGI disasters in the long term.

Curated. This is a pretty compelling research line and seems to me like it has the potential to help us a great deal in understanding how to interface and understand and align machine intelligence systems. It's also the compilation of a bunch of good writing+work from you that I'd like to celebrate, and it's something of a mission statement for the ongoing work.

I generally love all the images and like the way it adds a bunch of prior ideas together.

This project looks great! I especially like the focus on a more experimental kind of research, while still focused and informed on the specific concepts you want to investigate.

If you need some feedback on this work, don't hesitate to send me a message. ;)

A decent introduction to the natural abstraction hypothesis, and how testing it might be attempted. A very worthy project, but it isn't that easy to follow for beginners, nor does it provide a good understanding of how the testing might work in detail. What might consist a success, what might consist a failure of this testing? A decent introduction, but only an introduction, and it should have been part of a sequence or a longer post.

I'm curious if you'd looked at this followup (also nominated for review this year) http://lesswrong.com/posts/dNzhdiFE398KcGDc9/testing-the-natural-abstraction-hypothesis-project-update

I have looked at it, but ignored it when commenting on this post, which should stand on its own (or as part of a sequence).

Here's the review, though it's not very detailed (the post explains why):

Planned summary for the Alignment Newsletter:

We’ve previously seen some discussion about <@abstraction@>(@Public Static: What is Abstraction?@), and some [claims](https://www.lesswrong.com/posts/wopE4nT28ausKGKwt/classification-of-ai-alignment-research-deconfusion-good?commentId=cKNrWxfxRgENS2EKX) that there are “natural” abstractions, or that AI systems will <@tend@>(@Chris Olah’s views on AGI safety@) to <@learn@>(@Conversation with Rohin Shah@) increasingly human-like abstractions (at least up to a point). To make this more crisp, given a system, let’s consider the information (abstraction) of the system that is relevant for predicting parts of the world that are “far away”. Then, the **natural abstraction hypothesis** states that:

1. This information is much lower-dimensional than the system itself.

2. These low-dimensional summaries are exactly the high-level abstract objects/concepts typically used by humans.

3. These abstractions are “natural”, that is, a wide variety of cognitive architectures will learn to use approximately the same concepts to reason about the world.

For example, to predict the effect of a gas in a larger system, you typically just need to know its temperature, pressure, and volume, rather than the exact positions and velocities of each molecule of the gas. The natural abstraction hypothesis predicts that many cognitive architectures would all converge to using these concepts to reason about gases.

If the natural abstraction hypothesis were true, it could make AI alignment dramatically simpler, as our AI systems would learn to use approximately the same concepts as us, which can help us both to “aim” our AI systems at the right goal, and to peer into our AI systems to figure out what exactly they are doing. So, this new project aims to test whether the natural abstraction hypothesis is true.

The first two claims will likely be tested empirically. We can build low-level simulations of interesting systems, and then compute what summary is useful for predicting its effects on “far away” things. We can then ask how low-dimensional that summary is (to test (1)), and whether it corresponds to human concepts (to test (2)).

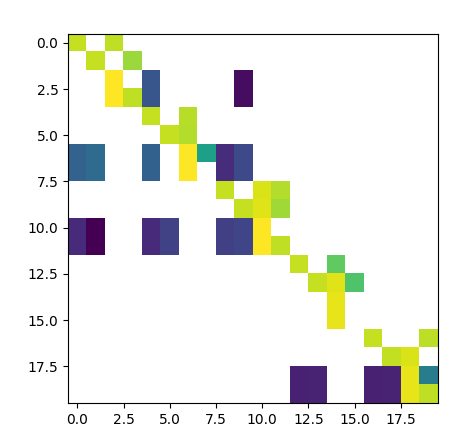

A [followup post](https://www.alignmentforum.org/posts/f6oWbqxEwktfPrKJw/computing-natural-abstractions-linear-approximation) illustrates this in the case of a linear-Gaussian Bayesian network with randomly chosen graph structure. In this case, we take two regions of 110 nodes that are far apart each, and operationalize the relevant information between the two as the covariance matrix between the two regions. It turns out that this covariance matrix has about 3-10 “dimensions” (depending on exactly how you count), supporting claim (1). (And in fact, if you now compare to another neighborhood, two of the three “dimensions” remain the same!) Unfortunately, this doesn’t give much evidence about (2) since humans don’t have good concepts for parts of linear-Gaussian Bayesian networks with randomly chosen graph structure.

While (3) can also be tested empirically through simulation, we would hope that we can also prove theorems that state that nearly all cognitive architectures from some class of models would learn the same concepts in some appropriate types of environments.

To quote the author, “the holy grail of the project would be a system which provably learns all learnable abstractions in a fairly general class of environments, and represents those abstractions in a legible way. In other words: it would be a standardized tool for measuring abstractions. Stick it in some environment, and it finds the abstractions in that environment and presents a standard representation of them.”

Planned opinion:

The notion of “natural abstractions” seems quite important to me. There are at least some weak versions of the hypothesis that seem obviously true: for example, if you ask GPT-3 some new type of question it has never seen before, you can predict pretty confidently that it is still going to respond with real words rather than a string of random characters. This is effectively because you expect that GPT-3 has learned the “natural abstraction” of the words used in English and that it uses this natural abstraction to drive its output (leaving aside the cases where it must produce output in some other language).

The version of the natural abstraction hypothesis investigated here seems a lot stronger and I’m excited to see how the project turns out. I expect the author will post several short updates over time; I probably won’t cover each of these individually and so if you want to follow it in real time I recommend following it on the Alignment Forum.

Reading this after Steve Byrnes' posts on neuroscience gives a potentially unfortunate view on this.

The general impression is that the a lot of our general understanding of the world is carried in the neocortex which is running a consistent statistical algorithm and the fact that humans converge on similar abstractions about the world could be explained by the statistical regularities of the world as discovered by this system. At the same time, the other parts of the brain have a huge variety of structures and have functions which are the products of evolution at a much more precise level, and the brain is directly exposed to, and working in response to, this higher level of complexity. Of course, it doesn't mean these systems can't be reliably compressed, and presumably have structure of their own, but it may be very complex, not be discoverable without high definition and so progress on values wouldn't follow easily from progress in understanding world-modelling abstractions.

This would suggest that successes in reliably measuring abstractions would be of greater use to general capability and world modelling than to understanding human values. It would also potentially give some scientific backing to the impression from introspection and philosophy that the core concepts of human values are particularly difficult concepts to point at.

I guess one lesson would be to try and put a focus on this case where at least part of the complexity of the goal of a system is in a system directly in contact with the cognitive system rather than observed at a distance.

Also interested in helping on this - if there's modelling you'd want to outsource.

Also interested in helping on this - if there's modelling you'd want to outsource.

Here's one fairly-standalone project which I probably won't get to soon. It would be a fair bit of work, but also potentially very impressive in terms of both showing off technical skills and producing cool results.

Short somewhat-oversimplified version: take a finite-element model of some realistic objects. Backpropagate to compute the jacobian of final state variables with respect to initial state variables. Take a singular value decomposition of the jacobian. Hypothesis: the singular vectors will roughly map to human-recognizable high-level objects in the simulation (i.e. the nonzero elements of any given singular vector should be the positions and momenta of each of the finite elements comprising one object).

Longer version: conceptually, we imagine that there's some small independent Gaussian noise in each of the variables defining the initial conditions of the simulation (i.e. positions and momenta of each finite element). Assuming the dynamics are such that the uncertainty remains small throughout the simulation - i.e. the system is not chaotic - our uncertainty in the final positions is then also Gaussian, found by multiplying the initial distribution by the jacobian matrix. The hypothesis that information-at-a-distance (in this case "distance" = later time) is low-dimensional then basically says that the final distribution (and therefore the jacobian) is approximately low-rank.

In order for this to both work and be interesting, there are some constraints on both the system and on how the simulation is set up. First, "not chaotic" is a pretty big limitation. Second, we want the things-simulated to not just be pure rigid-body objects, since in that case it's pretty obvious that the method will work and it's not particularly interesting. Two potentially-interesting cases to try:

- Simulation of an elastic object with multiple human-recognizable components, with substantial local damping to avoid small-scale chaos. Cloth or jello or a sticky hand or something along those lines could work well.

- Simulation of waves. Again, probably want at least some damping. Full-blown fluid dynamics could maybe be viable in a non-turbulent regime, although it would have to parameterized right - i.e. Eulerian coordinates rather than Lagrangian - so I'm not sure how it well it would play with APIC simulations and the like.

If you wanted to produce a really cool visual result, then I'd recommend setting up the simulation in Houdini, then attempting to make it play well with backpropagation. That would be a whole project in itself, but if viable the results would be very flashy.

Important implementation note: you'd probably want to avoid explicitly calculating the jacobian. Code it as a linear operator - i.e. a function which takes in a vector, and returns the product of the jacobian times that vector - and then use a sparse SVD method to find the largest singular values and corresponding singular vectors. (Unless you know how to work efficiently with jacobian matrices without doing that, but that's a pretty unusual thing to know.)

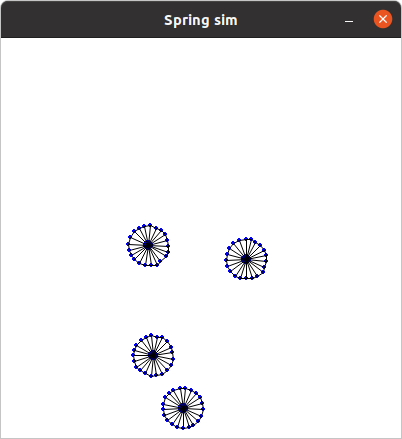

Been a while but I thought the idea was interesting and had a go at implementing it. Houdini was too much for my laptop, let alone my programming skills, but I found a simple particle simulation in pygame which shows the basics, can see below.

Planned next step is to work on the run-time speed (even this took a couple of minutes run, calculating the frame-to-frame Jacobian is a pain, probably more than necessary) and then add some utilities for creating larger, densely connected objects, will write up as a fuller post once done.

Curious if you've got any other uses for a set-up like this.

Nice!

A couple notes:

- Make sure to check that the values in the jacobian aren't exploding - i.e. there's not values like 1e30 or 1e200 or anything like that. Exponentially large values in the jacobian probably mean the system is chaotic.

- If you want to avoid explicitly computing the jacobian, write a method which takes in a (constant) vector and uses backpropagation to return . This is the same as the time-0-to-time-t jacobian dotted with , but it operates on size-n vectors rather than n-by-n jacobian matrices, so should be a lot faster. Then just wrap that method in a LinearOperator (or the equivalent in your favorite numerical library), and you'll be able to pass it directly to an SVD method.

In terms of other uses... you could e.g. put some "sensors" and "actuators" in the simulation, then train some controller to control the simulated system, and see whether the data structures learned by the controller correspond to singular vectors of the jacobian. That could make for an interesting set of experiments, looking at different sensor/actuator setups and different controller architectures/training schemes to see which ones do/don't end up using the singular-value structure of the system.

Another little update, speed issue solved for now by adding SymPy's fortran wrappers to the derivative calculations - calculating the SVD isn't (yet?) the bottleneck. Can now quickly get results from 1,000+ step simulations of 100s of particles.

Unfortunately, even for the pretty stable configuration below, the values are indeed exploding. I need to go back through the program and double check the logic but I don't think it should be chaotic, if anything I would expect the values to hit zero.

It might be that there's some kind of quasi-chaotic behaviour where the residual motion of the particles is impossibly sensitive to the initial conditions, even as the macro state is very stable, with a nicely defined derivative wrt initial conditions. Not yet sure how to deal with this.

If the wheels are bouncing off each other, then that could be chaotic in the same way as billiard balls. But at least macroscopically, there's a crapton of damping in that simulation, so I find it more likely that the chaos is microscopic. But also my intuition agrees with yours, this system doesn't seem like it should be chaotic...

Thoughts on when models will or won't use edge cases? For example, if you made an electronic circuit using evolutionary algorithms in a high fidelity simulation, I would expect it to take advantage of V = IR being wrong in edge cases.

In other words, how much of the work do you expect to be in inducing models to play nice with abstraction?

ETA: abstractions are sometimes wrong in stable (or stabilizable) states, so you can't always lean on chaos washing it out

When we have a good understanding of abstraction, it should also be straightforward to recognize when a distribution shift violates the abstraction. In particular, insofar as abstractions are basically deterministic constraints, we can see when the constraint is violated. And as long as we can detect it, it should be straightforward (though not necessarily easy) to handle it.

One generalization I am also interested in is to learn not merely abstract objects within a big model, but entire self-contained abstract levels of description, together with actions and state transitions that move you between abstract states. E.g. not merely detecting that "the grocery store" is a sealed box ripe for abstraction, but that "go to the grocery store" is a valid action within a simplified world-model with nice properties.

This might be significantly more challenging to say something interesting about, because it depends not just on the world but on how the agent interacts with the world.

Nice! From my perspective this would be pretty exciting because, if natural abstractions exist, it solves at least some of the inference problem I view at the root of solving alignment, i.e. how do you know that the AI really understands you/humans and isn't misunderstanding you/humans in some way that looks like it does understand from the outside but it doesn't. Although I phrased this in terms of reified experiences (noemata/qualia as a generalization of axia), abstractions are essentially the same thing in more familiar language, so I'm quite excited for the possibility that we can prove that we may be able to say something about the noemata/qualia/axia of minds other than our own beyond simply taking for granted that other minds share some commonality with ours (which works well for thinking about other humans up to a point, but quickly runs up against problems of assuming too much even before you start thinking about beings other than humans).

The natural abstraction hypothesis says that

If true, the natural abstraction hypothesis would dramatically simplify AI and AI alignment in particular. It would mean that a wide variety of cognitive architectures will reliably learn approximately-the-same concepts as humans use, and that these concepts can be precisely and unambiguously specified.

Ultimately, the natural abstraction hypothesis is an empirical claim, and will need to be tested empirically. At this point, however, we lack even the tools required to test it. This post is an intro to a project to build those tools and, ultimately, test the natural abstraction hypothesis in the real world.

Background & Motivation

One of the major conceptual challenges of designing human-aligned AI is the fact that human values are a function of humans’ latent variables: humans care about abstract objects/concepts like trees, cars, or other humans, not about low-level quantum world-states directly. This leads to conceptual problems of defining “what we want” in physical, reductive terms. More generally, it leads to conceptual problems in translating between human concepts and concepts learned by other systems - e.g. ML systems or biological systems.

If true, the natural abstraction hypothesis provides a framework for translating between high-level human concepts, low-level physical systems, and high-level concepts used by non-human systems.

The foundations of the framework have been sketched out in previous posts.

What is Abstraction? introduces the mathematical formulation of the framework and provides several examples. Briefly: the high-dimensional internal details of far-apart subsystems are independent given their low-dimensional “abstract” summaries. For instance, the Lumped Circuit Abstraction abstracts away all the details of molecule positions or wire shapes in an electronic circuit, and represents the circuit as components each summarized by some low-dimensional behavior - like V = IR for a resistor. This works because the low-level molecular motions in a resistor are independent of the low-level molecular motions in some far-off part of the circuit, given the high-level summary. All the rest of the low-level information is “wiped out” by noise in low-level variables “in between” the far-apart components.

Chaos Induces Abstractions explains one major reason why we expect low-level details to be independent (given high-level summaries) for typical physical systems. If I have a bunch of balls bouncing around perfectly elastically in a box, then the total energy, number of balls, and volume of the box are all conserved, but chaos wipes out all other information about the exact positions and velocities of the balls. My “high-level summary” is then the energy, number of balls, and volume of the box; all other low-level information is wiped out by chaos. This is exactly the abstraction behind the ideal gas law. More generally, given any uncertainty in initial conditions - even very small uncertainty - mathematical chaos “amplifies” that uncertainty until we are maximally uncertain about the system state… except for information which is perfectly conserved. In most dynamical systems, some information is conserved, and the rest is wiped out by chaos.

Anatomy of a Gear: What makes a good “gear” in a gears-level model? A physical gear is a very high-dimensional object, consisting of huge numbers of atoms rattling around. But for purposes of predicting the behavior of the gearbox, we need only a one-dimensional summary of all that motion: the rotation angle of the gear. More generally, a good “gear” is a subsystem which abstracts well - i.e. a subsystem for which a low-dimensional summary can contain all the information relevant to predicting far-away parts of the system.

Science in a High Dimensional World: Imagine that we are early scientists, investigating the mechanics of a sled sliding down a slope. The number of variables which could conceivably influence the sled’s speed is vast: angle of the hill, weight and shape and material of the sled, blessings or curses laid upon the sled or the hill, the weather, wetness, phase of the moon, latitude and/or longitude and/or altitude, astrological motions of stars and planets, etc. Yet in practice, just a relatively-low-dimensional handful of variables suffices - maybe a dozen. A consistent sled-speed can be achieved while controlling only a dozen variables, out of literally billions. And this generalizes: across every domain of science, we find that controlling just a relatively-small handful of variables is sufficient to reliably predict the system’s behavior. Figuring out which variables is, in some sense, the central project of science. This is the natural abstraction hypothesis in action: across the sciences, we find that low-dimensional summaries of high-dimensional systems suffice for broad classes of “far-away” predictions, like the speed of a sled.

The Problem and The Plan

The natural abstraction hypothesis can be split into three sub-claims, two empirical, one mathematical:

Abstractability and human-compatibility are empirical claims, which ultimately need to be tested in the real world. Convergence is a more mathematical claim, i.e. it will ideally involve proving theorems, though empirical investigation will likely still be needed to figure out exactly which theorems.

These three claims suggest three different kinds of experiment to start off:

The first two experiments both require computing information-relevant-at-a-distance in a reasonably-complex simulated environment. The “naive”, brute-force method for this would not be tractable; it would require evaluating high-dimensional integrals over “noise” variables. So the first step will be to find practical algorithms for directly computing abstractions from low-level simulated environments. These don’t need to be fully-general or arbitrarily-precise (at least not initially), but they need to be general enough to apply to a reasonable variety of realistic systems.

Once we have algorithms capable of directly computing the abstractions in a system, training a few cognitive models against that system is an obvious next step. This raises another algorithmic problem: how do we efficiently check whether a cognitive system has learned particular abstractions? Again, this doesn’t need to be fully general or arbitrarily precise. It just needs to be general enough to use as a tool for the next step.

The next step is where things get interesting. Ideally, we want general theorems telling us which cognitive systems will learn which abstractions in which environments. As of right now, I’m not even sure exactly what those theorems should say. (There are some promising directions, like modular variation of goals, but the details are still pretty sparse and it’s not obvious whether these are the right directions.) This is the perfect use-case for a feedback loop between empirical and theoretical work:

Along the way, it should be possible to prove theorems on what abstractions will be learned in at least some cases. Experiments should then probe cases not handled by those theorems, enabling more general models and theorems, eventually leading to a unified theory.

(Of course, in practice this will probably also involve a larger feedback loop, in which lessons learned training models also inform new algorithms for computing abstractions in more-general environments, and for identifying abstractions learned by the models.)

The end result of this process, the holy grail of the project, would be a system which provably learns all learnable abstractions in a fairly general class of environments, and represents those abstractions in a legible way. In other words: it would be a standardized tool for measuring abstractions. Stick it in some environment, and it finds the abstractions in that environment and presents a standard representation of them. Like a thermometer for abstractions.

Then, the ultimate test of the natural abstraction hypothesis would just be a matter of pointing the abstraction-thermometer at the real world, and seeing if it spits out human-recognizable abstract objects/concepts.

Summary

The natural abstraction hypothesis suggests that most high-level abstract concepts used by humans are “natural”: the physical world contains subsystems for which all the information relevant “far away” can be contained in a (relatively) low-dimensional summary. These subsystems are exactly the high-level “objects” or “categories” or “concepts” we recognize in the world. If true, this hypothesis would dramatically simplify the problem of human-aligned AI. It would imply that a wide range of architectures will reliably learn similar high-level concepts from the physical world, that those high-level concepts are exactly the objects/categories/concepts which humans care about (i.e. inputs to human values), and that we can precisely specify those concepts.

The natural abstraction hypothesis is mainly an empirical claim, which needs to be tested in the real world.

My main plan for testing this involves a feedback loop between:

The holy grail of the project would be an “abstraction thermometer”: an algorithm capable of reliably identifying the abstractions in an environment and representing them in a standard format. In other words, a tool for measuring abstractions. This tool could then be used to measure abstractions in the real world, in order to test the natural abstraction hypothesis.

I plan to spend at least the next six months working on this project. Funding for the project has been supplied by the Long-Term Future Fund.