Understanding the Lottery Ticket Hypothesis

12Daniel Kokotajlo

5Alex Flint

5johnswentworth

2Daniel Kokotajlo

4johnswentworth

New Comment

Thanks for this, I found it helpful!

If you are still interested in reading and thinking more about this topic, I would love to hear your thoughts on the papers below, in particular the "multi-prize LTH" one which seems to contradict some of the claims you made above. Also, I'd love to hear whether LTH-ish hypotheses apply to RNN's and more generally the sort of neural networks used to make, say, AlphaStar.

https://arxiv.org/abs/2103.09377

"In this paper, we propose (and prove) a stronger Multi-Prize Lottery Ticket Hypothesis:

A sufficiently over-parameterized neural network with random weights contains several subnetworks (winning tickets) that (a) have comparable accuracy to a dense target network with learned weights (prize 1), (b) do not require any further training to achieve prize 1 (prize 2), and (c) is robust to extreme forms of quantization (i.e., binary weights and/or activation) (prize 3)."

https://arxiv.org/abs/2006.12156

"An even stronger conjecture has been proven recently: Every sufficiently overparameterized network contains a subnetwork that, at random initialization, but without training, achieves comparable accuracy to the trained large network."

https://arxiv.org/abs/2006.07990

The strong {\it lottery ticket hypothesis} (LTH) postulates that one can approximate any target neural network by only pruning the weights of a sufficiently over-parameterized random network. A recent work by Malach et al. \cite{MalachEtAl20} establishes the first theoretical analysis for the strong LTH: one can provably approximate a neural network of width d and depth l, by pruning a random one that is a factor O(d4l2) wider and twice as deep. This polynomial over-parameterization requirement is at odds with recent experimental research that achieves good approximation with networks that are a small factor wider than the target. In this work, we close the gap and offer an exponential improvement to the over-parameterization requirement for the existence of lottery tickets. We show that any target network of width d and depth l can be approximated by pruning a random network that is a factor O(log(dl)) wider and twice as deep.

https://arxiv.org/abs/2103.16547

"Based on these results, we articulate the Elastic Lottery Ticket Hypothesis (E-LTH): by mindfully replicating (or dropping) and re-ordering layers for one network, its corresponding winning ticket could be stretched (or squeezed) into a subnetwork for another deeper (or shallower) network from the same family, whose performance is nearly as competitive as the latter's winning ticket directly found by IMP."

EDIT: Some more from my stash:

https://arxiv.org/abs/2010.11354

Sparse neural networks have generated substantial interest recently because they can be more efficient in learning and inference, without any significant drop in performance. The "lottery ticket hypothesis" has showed the existence of such sparse subnetworks at initialization. Given a fully-connected initialized architecture, our aim is to find such "winning ticket" networks, without any training data. We first show the advantages of forming input-output paths, over pruning individual connections, to avoid bottlenecks in gradient propagation. Then, we show that Paths with Higher Edge-Weights (PHEW) at initialization have higher loss gradient magnitude, resulting in more efficient training. Selecting such paths can be performed without any data.

http://proceedings.mlr.press/v119/frankle20a.html

We study whether a neural network optimizes to the same, linearly connected minimum under different samples of SGD noise (e.g., random data order and augmentation). We find that standard vision models become stable to SGD noise in this way early in training. From then on, the outcome of optimization is determined to a linearly connected region. We use this technique to study iterative magnitude pruning (IMP), the procedure used by work on the lottery ticket hypothesis to identify subnetworks that could have trained in isolation to full accuracy. We find that these subnetworks only reach full accuracy when they are stable to SGD noise, which either occurs at initialization for small-scale settings (MNIST) or early in training for large-scale settings (ResNet-50 and Inception-v3 on ImageNet).

https://mathai-iclr.github.io/papers/papers/MATHAI_29_paper.pdf “In some situations we show that neural networks learn through a process of “grokking” a pattern in the data, improving generalization performance from random chance level to perfect generalization, and that this improvement in generalization can happen well past the point of overfitting.”

I don’t yet understand this proposal. In what way do we decompose this parameter tangent space into "lottery tickets"? Are the lottery tickets the cross product of subnetworks and points in the parameter tangent space? The subnetworks alone? If the latter then how does this differ from the original lottery ticket hypothesis?

The tangent space version is meant to be a fairly large departure from the original LTH; subnetworks are no longer particularly relevant at all.

We can imagine a space of generalized-lottery-ticket-hypotheses, in which the common theme is that we have some space of models chosen at initialization (the "tickets"), and SGD mostly "chooses a winning ticket" - i.e. upweights one of those models and downweights others, as opposed to constructing a model incrementally. The key idea is that it's a selection process rather than a construction process - in particular, that's the property mainly relevant to inner alignment concerns.

This space of generalized-LTH models would include, for instance, Solomonoff induction: the space of "tickets" is the space of programs; SI upweights programs which perfectly predict the data and downweights the rest. Inner agency problems are then particularly tricky because deceptive optimizers are "there from the start". It's not like e.g. biological evolution, where it took a long time for anything very agenty to be built up.

In the tangent space version of the hypothesis, the "tickets" are exactly the models within the tangent space of the full NN model at initialization: each value of yields a corresponding function-of-the-NN-inputs (via linear approximation w.r.t. ), and each such function is a "ticket".

I am interested in making this understood by more people, so please let me know if some part of it does not make sense to you.

I confess I don't really understand what a tangent space is, even after reading the wiki article on the subject. It sounds like it's something like this: Take a particular neural network. Consider the "space" of possible neural networks that are extremely similar to it, i.e. they have all the same parameters but the weights are slightly different, for some definition of "slightly." That's the tangent space. Is this correct? What am I missing?

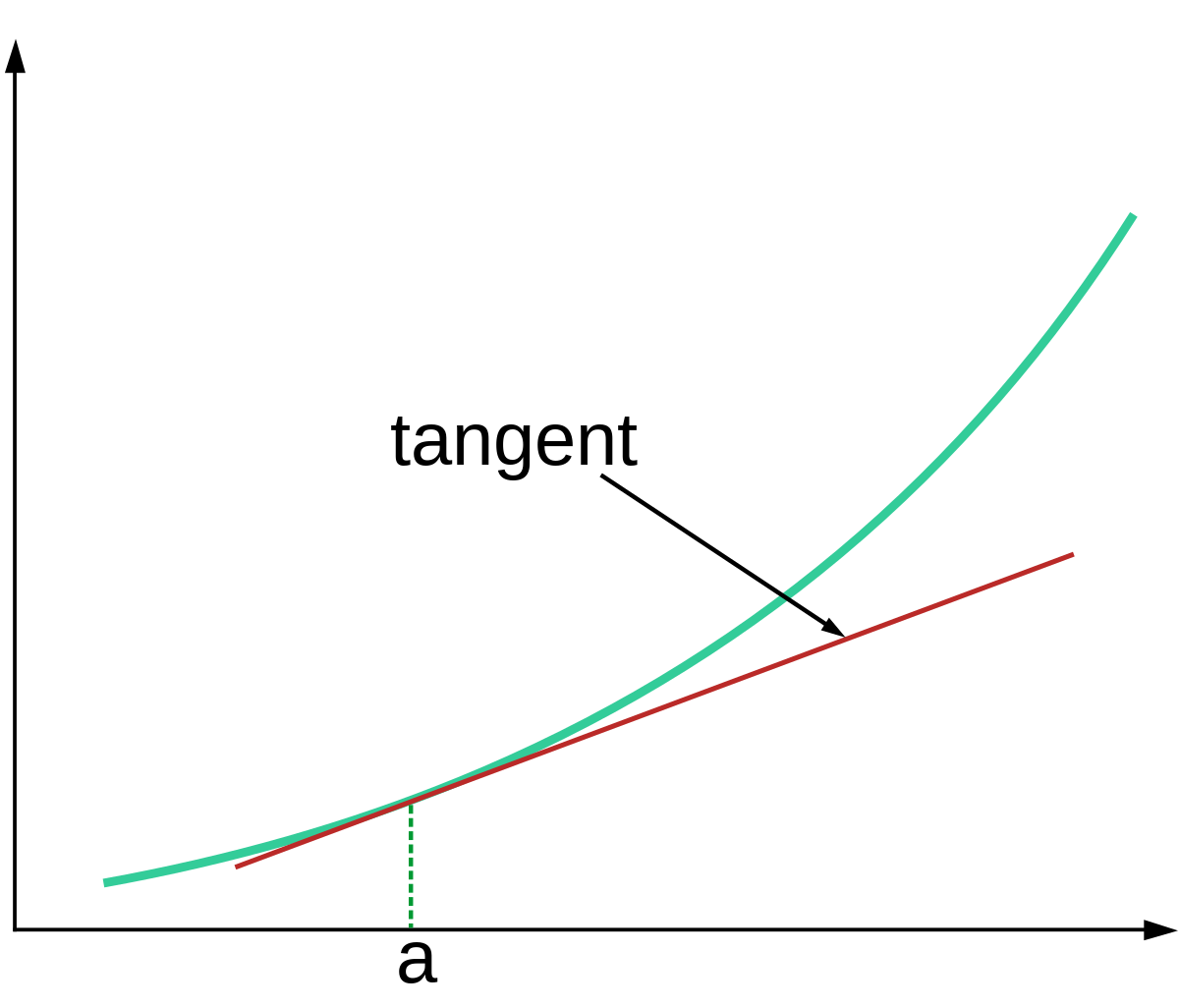

Picture a linear approximation, like this:

The tangent space at point is that whole line labelled "tangent".

The main difference between the tangent space and the space of neural-networks-for-which-the-weights-are-very-close is that the tangent space extrapolates the linear approximation indefinitely; it's not just limited to the region near the original point. (In practice, though, that difference does not actually matter much, at least for the problem at hand - we do stay close to the original point.)

The reason we want to talk about "the tangent space" is that it lets us precisely state things like e.g. Newton's method in terms of search: Newton's method finds a point at which f(x) is approximately 0 by finding a point where the tangent space hits zero (i.e. where the line in the picture above hits the x-axis). So, the tangent space effectively specifies the "search objective" or "optimization objective" for one step of Newton's method.

In the NTK/GP model, neural net training is functionally-identical to one step of Newton's method (though it's Newton's method in many dimensions, rather than one dimension).

Curated and popular this week