Does The Information-Throughput-Maximizing Input Distribution To A Sparsely-Connected Channel Satisfy An Undirected Graphical Model?

[EDIT: Never mind, proved it.]

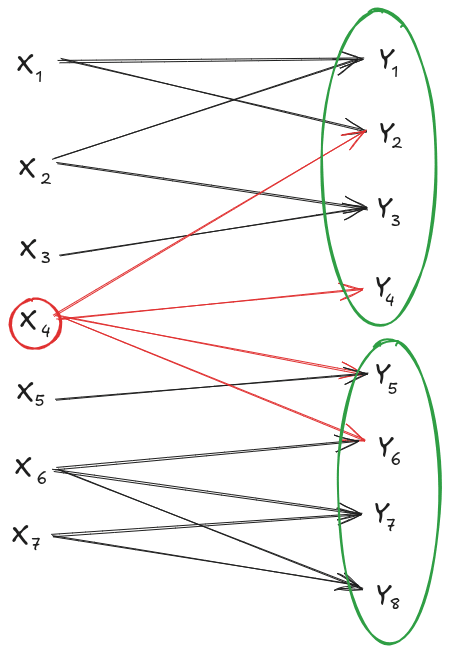

Suppose I have an information channel . The X components and the Y components are sparsely connected, i.e. the typical is downstream of only a few parent X-components . (Mathematically, that means the channel factors as .)

Now, suppose I split the Y components into two sets, and hold constant any X-components which are upstream of components in both sets. Conditional on those (relatively few) X-components, our channel splits into two independent channels.

E.g. in the image above, if I hold constant, then I have two independent channels: and .

Now, the information-throughput-maximizing input distribution to a pair of independent channels is just the product of the throughput maximizing distributions for the two channels individually. In other words: for independent channels, we have independent throughput maximizing distribution.

So it seems like a natural guess that something similar would happen in our sparse setup.

Conjecture: The throughput-maximizing distribution for our sparse setup is independent conditional on overlapping X-components. E.g. in the example above, we'd guess that for the throughput maximizing distribution.

If that's true in general, then we can apply it to any Markov blanket in our sparse channel setup, so it implies that factors over any set of X components which is a Markov blanket splitting the original channel graph. In other words: it would imply that the throughput-maximizing distribution satisfies an undirected graphical model, in which two X-components share an edge if-and-only-if they share a child Y-component.

It's not obvious that this works mathematically; information throughput maximization (i.e. the optimization problem by which one computes channel capacity) involves some annoying coupling between terms. But it makes sense intuitively. I've spent less than an hour trying to prove it and mostly found it mildly annoying though not clearly intractable. Seems like the sort of thing where either (a) someone has already proved it, or (b) someone more intimately familiar with channel capacity problems than I am could easily prove it.

So: anybody know of an existing proof (or know that the conjecture is false), or find this conjecture easy to prove themselves?

I was a relatively late adopter of the smartphone. I was still using a flip phone until around 2015 or 2016 ish. From 2013 to early 2015, I worked as a data scientist at a startup whose product was a mobile social media app; my determination to avoid smartphones became somewhat of a joke there.

Even back then, developers talked about UI design for smartphones in terms of attention. Like, the core "advantages" of the smartphone were the "ability to present timely information" (i.e. interrupt/distract you) and always being on hand. Also it was small, so anything too complicated to fit in like three words and one icon was not going to fly.

... and, like, man, that sure did not make me want to buy a smartphone. Even today, I view my phone as a demon which will try to suck away my attention if I let my guard down. I have zero social media apps on there, and no app ever gets push notif permissions when not open except vanilla phone calls and SMS.

People would sometimes say something like "John, you should really get a smartphone, you'll fall behind without one" and my gut response was roughly "No, I'm staying in place, and the rest of you are moving backwards".

And in hindsight, boy howdy do I endorse that attitude! Past John's gut was right on the money with that one.

I notice that I have an extremely similar gut feeling about LLMs today. Like, when I look at the people who are relatively early adopters, making relatively heavy use of LLMs... I do not feel like I'll fall behind if I don't leverage them more. I feel like the people using them a lot are mostly moving backwards, and I'm staying in place.

Yeah, this is an active topic for us right now.

For most day-to-day abstraction, full strong redundancy isn't the right condition to use; as you say, I can't tell a dog by looking at each individual atom. But full weak redundancy goes too far in the opposite direction: I can drop a lot more than just one atom and still recognize the dog.

Intuitively, it feels like there should be some condition like "if you can recognize a dog from most random subsets of the atoms of size 2% of the total, then P[X|latent] factors according to <some nice form> to within <some error which gets better as the 2% number gets smaller>". But the naive operationalization doesn't work, because we can use xor tricks to encode a bunch of information in such a way that any 2% of (some large set of variables) can recover the info, but any one variable (or set of size less than 2%) has exactly zero info. The catch is that such a construction requires the individual variables to be absolutely enormous, like exponentially large amounts of entropy. So maybe if we assume some reasonable bound on the size of the variables, then the desired claim could be recovered.

That I roughly agree with. As in the comment at top of this chain: "there will be market pressure to make AI good at conceptual work, because that's a necessary component of normal science". Likewise, insofar as e.g. heavy RL doesn't make the AI effective at conceptual work, I expect it to also not make the AI all that effective at normal science.

That does still leave a big question mark regarding what methods will eventually make AIs good at such work. Insofar as very different methods are required, we should also expect other surprises along the way, and expect the AIs involved to look generally different from e.g. LLMs, which means that many other parts of our mental pictures are also likely to fail to generalize.

You might hope for elicitation efficiency, as in, you heavily RL the model to produce useful considerations and hope that your optimization is good enough that it covers everything well enough.

"Hope" is indeed a good general-purpose term for plans which rely on an unverifiable assumption in order to work.

(Also I'd note that as of today, heavy RL tends to in fact produce pretty bad results, in exactly the ways one would expect in theory, and in particular in ways which one would expect to get worse rather than better as capabilities increase. RL is not something we can apply in more than small amounts before the system starts to game the reward signal.)

That was an excellent summary of how things seem to normally work in the sciences, and explains it better than I would have. Kudos.

Perhaps a better summary of my discomfort here: suppose you train some AI to output verifiable conceptual insights. How can I verify that this AI is not missing lots of things all the time? In other words, how do I verify that the training worked as intended?

Rather, conceptual research as I'm understanding it is defined by the tools available for evaluating the research in question.[1] In particular, as I'm understanding it, cases where neither available empirical tests nor formal methods help much.

Agreed.

But if some AI presented us with this claim, the question is whether we could evaluate it via some kind of empirical test, which it sounds like we plausibly could.

Disagreed.

My guess is that you have, in the back of your mind here, ye olde "generation vs verification" discussion. And in particular, so long as we can empirically/mathematically verify some piece of conceptual progress once it's presented to us, we can incentivize the AI to produce interesting new pieces of verifiable conceptual progress.

That's an argument which works in the high capability regime, if we're willing to assume that any relevant progress is verifiable, since we can assume that the highly capable AI will in fact find whatever pieces of verifiable conceptual progress are available. Whether it works in the relatively-low capability regime of human-ish-level automated alignment research and realistic amounts of RL is... rather more dubious. Also, the mechanics of designing a suitable feedback signal would be nontrivial to get right in practice.

Getting more concrete: if we're imagining an LLM-like automated researcher, then a question I'd consider extremely important is: "Is this model/analysis/paper/etc missing key conceptual pieces?". If the system says "yes, here's something it's missing" then I can (usually) verify that. But if it says "nope, looks good"... then I can't verify that the paper is in fact not missing anything.

And in fact that's a problem I already do run into with LLMs sometimes: I'll present a model, and the thing will be all sycophantic and not mention that the model has some key conceptual confusion about something. Of course you might hope that some more-clever training objective will avoid that kind of sycophancy and instead incentivize Good Science, but that's definitely not an already-solved problem, and I sure do feel suspicious of an assumption that that problem will be easy.

I think you are importantly missing something about how load-bearing "conceptual" progress is in normal science.

An example I ran into just last week: I wanted to know how long it takes various small molecule neurotransmitters to be reabsorbed after their release. And I found some very different numbers:

- Some sources offhandedly claimed ~1ms. AFAICT, this number comes from measuring the time taken for the neurotransmitter to clear from the synaptic cleft, and then assuming that the neurotransmitter clears mainly via reabsorption (an assumption which I emphasize because IIUC it's wrong; I think the ~1ms number is actually measuring time for the neurotransmitter to diffuse out of the cleft).

- Other sources claimed ~10ms. These were based on <other methods>.

Now, I want to imagine for a moment a character named Emma the Empirical Fundamentalist, someone who eschews "conceptual" work entirely and only updates on Empirically Testable Questions. (For current purposes, mathematical provability can also be lumped in with empirical testability.) How would Emma respond to the two mutually-contradictory neurotransmitter reabsorption numbers above?

Well, first and foremost, Emma would not think "one of these isn't measuring what the authors think they're measuring". That is a quintessential "conceptual" thought. Instead, Emma might suspect that one of the measurements was simply wrong, but repeating the two experiments will quickly rule out that hypothesis. She might also think to try many other measurement methods, and find that most of them agree with the ~10ms number, but the ~1ms measurement will still be a true repeatable phenomenon. Eventually she will likely settle on roughly "the real world is messy and complex, so sometimes measurements depend on context and method in surprising ways, and this is one of those times". (Indeed, that sort of thing is a very common refrain in tons of biological science.)

But in this case, the real explanation (I claim) is simply that one of the measurements was based on an incorrect assumption. I made the incorrect assumption obvious by highlighting it above, but in-the-wild the assumption would be implicit and unstated and nonobvious; it wouldn't even occur to the authors that they're making an assumption which could be wrong.

Discovering that sort of error is centrally in the wheelhouse of conceptual progress. And as this example illustrates, it's a very load-bearing piece of normal science.

(And indeed, I expect that at least some of your examples of normal-science-style empirical alignment research are cases where the authors are probably not measuring what they think they are measuring, though I don't know in advance which ones. Conceptual work is exactly what would be required to sort that out.)

(And to be clear, I don't think this example is the only or even most common "kind of way" in which conceptual work is load-bearing for normal science.)

Moving up the discussion stack: insofar as conceptual work is very load-bearing for normal science, how does that change the view articulated in the post? Well, first, it means that one cannot produce a good normal-science AI by primarily relying on empirical feedback (including mathematical feedback from proofs), unless one gets lucky and training on empirical feedback happens to also make the AI good at conceptual work. Second, there will be market pressure to make AI good at conceptual work, because that's a necessary component of normal science.

Proof

Specifically, we'll show that there exists an information throughput maximizing distribution which satisfies the undirected graph. We will not show that all optimal distributions satisfy the undirected graph, because that's false in some trivial cases - e.g. if all the Y's are completely independent of X, then all distributions are optimal. We will also not show that all optimal distributions factor over the undirected graph, which is importantly different because of the P[X]>0 caveat in the Hammersley-Clifford theorem.

First, we'll prove the (already known) fact that an independent distribution P[X]=P[X1]P[X2] is optimal for a pair of independent channels (X1→Y1,X2→Y2); we'll prove it in a way which will play well with the proof of our more general theorem. Using standard information identities plus the factorization structure Y1−X1−X2−Y2 (that's a Markov chain, not subtraction), we get

MI(X;Y)=MI(X;Y1)+MI(X;Y2|Y1)

=MI(X;Y1)+(MI(X;Y2)−MI(Y2;Y1)+MI(Y2;Y1|X))

=MI(X1;Y1)+MI(X2;Y2)−MI(Y2;Y1)

Now, suppose you hand me some supposedly-optimal distribution P[X]. From P, I construct a new distribution Q[X]:=P[X1]P[X2]. Note that MI(X1;Y1) and MI(X2;Y2) are both the same under Q as under P, while MI(Y2;Y1) is zero under Q. So, because MI(X;Y)=MI(X1;Y1)+MI(X2;Y2)−MI(Y2;Y1), the MI(X;Y) must be at least as large under Q as under P. In short: given any distribution, I can construct another distribution with as least as high information throughput, under which X1 and X2 are independent.

Now let's tackle our more general theorem, reusing some of the machinery above.

I'll split Y into Y1 and Y2, and split X into X1−2 (parents of Y1 but not Y2), X2−1 (parents of Y2 but not Y1), and X1∩2 (parents of both). Then

MI(X;Y)=MI(X1∩2;Y)+MI(X1−2,X2−1;Y|X1∩2)

In analogy to the case above, we consider distribution P[X], and construct a new distribution Q[X]:=P[X1∩2]P[X1−2|X1∩2]P[X2−1|X1∩2]. Compared to P, Q has the same value of MI(X1∩2;Y), and by exactly the same argument as the independent case MI(X1−2,X2−1;Y|X1∩2) cannot be any higher under Q; we just repeat the same argument with everything conditional on X1∩2 throughout. So, given any distribution, I can construct another distribution with at least as high information throughput, under which X1−2 and X2−1 are independent given X1∩2.

Since this works for any Markov blanket X1∩2, there exists an information thoughput maximizing distribution which satisfies the desired undirected graph.