Epistemic Status

I've made many claims in these posts. All views are my own.

Confident (75%). The theorems on power-seeking only apply to optimal policies in fully observable environments, which isn't realistic for real-world agents. However, I think they're still informative. There are also strong intuitive arguments for power-seeking.

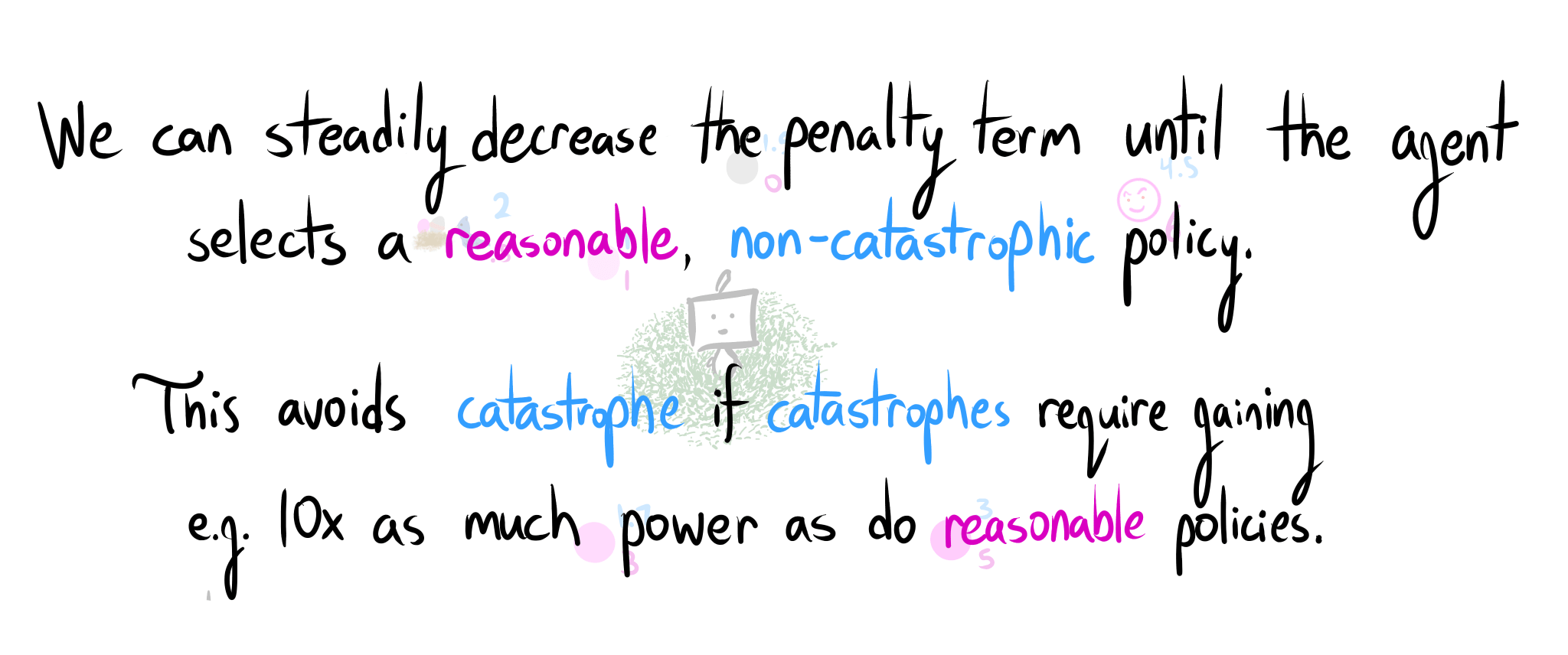

Fairly confident (70%). There seems to be a dichotomy between "catastrophe directly incentivized by goal" and "catastrophe indirectly incentivized by goal through power-seeking", although Vika provides intuitions in the other direction.

Acknowledgements

After ~700 hours of work over the course of ~9 months, the sequence is finally complete.

This work was made possible by the Center for Human-Compatible AI, the Berkeley Existential Risk Initiative, and the Long-Term Future Fund. Deep thanks to Rohin Shah, Abram Demski, Logan Smith, Evan Hubinger, TheMajor, Chase Denecke, Victoria Krakovna, Alper Dumanli, Cody Wild, Matthew Barnett, Daniel Blank, Sara Haxhia, Connor Flexman, Zack M. Davis, Jasmine Wang, Matthew Olson, Rob Bensinger, William Ellsworth, Davide Zagami, Ben Pace, and a million other people for giving feedback on this sequence.

Appendix: Easter Eggs

The big art pieces (and especially the last illustration in this post) were designed to convey a specific meaning, the interpretation of which I leave to the reader.

There are a few pop culture references which I think are obvious enough to not need pointing out, and a lot of hidden smaller playfulness which doesn't quite rise to the level of "easter egg".

Reframing Impact

The bird's nest contains a literal easter egg.

The paperclip-Balrog drawing contains a Tengwar inscription which reads "one measure to bind them", with "measure" in impact-blue and "them" in utility-pink.

"Towards a New Impact Measure" was the title of the post in which AUP was introduced.

Attainable Utility Theory: Why Things Matter

This style of maze is from the video game Undertale.

Seeking Power is Instrumentally Convergent in MDPs

To seek power, Frank is trying to get at the Infinity Gauntlet.

The tale of Frank and the orange Pebblehoarder

Speaking of under-tales, a friendship has been blossoming right under our noses.

After the Pebblehoarders suffer the devastating transformation of all of their pebbles into obsidian blocks, Frank generously gives away his favorite pink marble as a makeshift pebble.

The title cuts to the middle of their adventures together, the Pebblehoarder showing its gratitude by helping Frank reach things high up.

This still at the midpoint of the sequence is from the final scene of The Hobbit: An Unexpected Journey, where the party is overlooking Erebor, the Lonely Mountain. They've made it through the Misty Mountains, only to find Smaug's abode looming in the distance.

And, at last, we find Frank and orange Pebblehoarder popping some of the champagne from Smaug's hoard.

Since Erebor isn't close to Gondor, we don't see Frank and the Pebblehoarder gazing at Ephel Dúath from Minas Tirith.