Possible root causes if we don't end up having a good long term future (i.e., realize most of the potential value of the universe), with illustrative examples:

- Technical incompetence

- We fail to correctly solve technical problems in AI alignment.

- We fail to build or become any kind of superintelligence.

- We fail to colonize the universe.

- Philosophical incompetence

- We fail to solve philosophical problems in AI alignment

- We end up optimizing the universe for wrong values.

- Strategic incompetence

- It is not impossible to cooperate/coordinate, but we fail

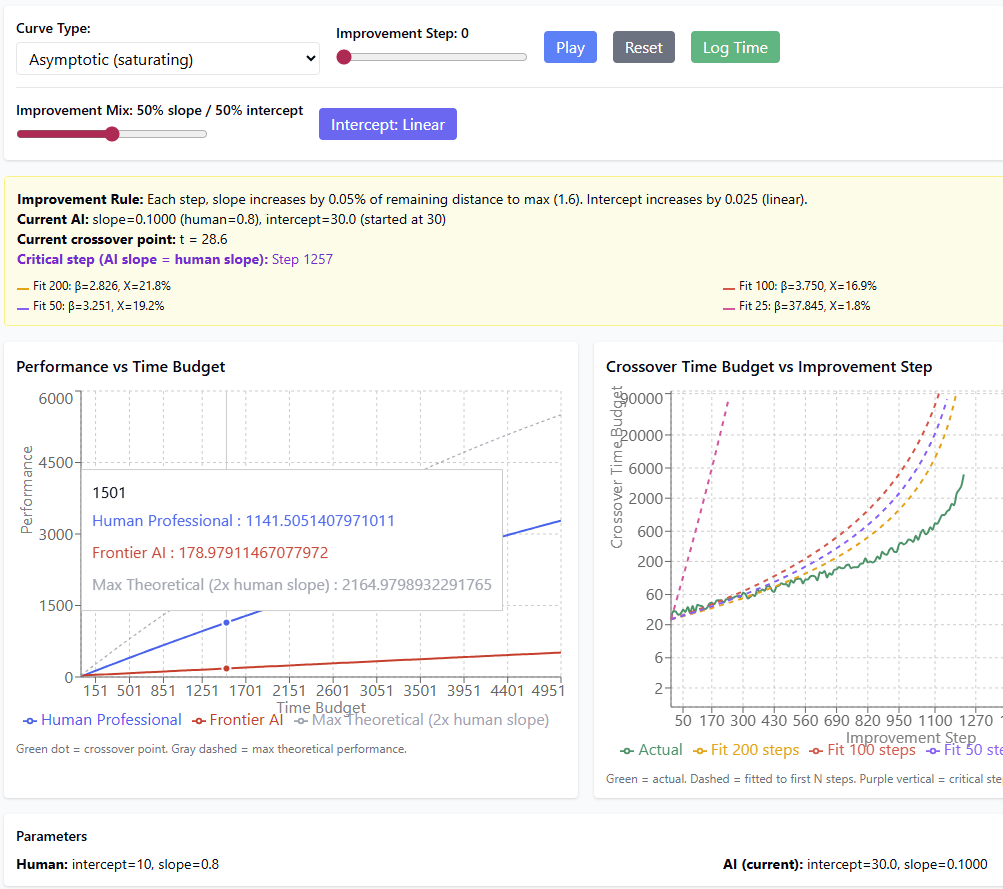

Diminishing returns in the NanoGPT speedrun:

To determine whether we're heading for a software intelligence explosion, one key variable is how much harder algorithmic improvement gets over time. Luckily someone made the NanoGPT speedrun, a repo where people try to minimize the amount of time on 8x H100s required to train GPT-2 124M down to 3.28 loss. The record has improved from 45 minutes in mid-2024 down to 1.92 minutes today, a 23.5x speedup. This does not give the whole picture-- the bulk of my uncertainty is in other variables-- but given this is exist...

Cool, this clarifies things a good amount for me. Still have some confusion about how you are modeling things, but I feel less confused. Thank you!

The “when no one’s watching” feature

Can we find a “when no one’s watching” feature, or its opposite, “when someone’s watching”? This could be a useful and plausible precursor to deception

With sufficiently powerful systems, this feature may be *very* hard to elicit, as by definition, finding the feature means someone’s watching and requires tricking / honeypotting the model to take it off its own modeling process of the input distribution.

This could be a precursor to deception. One way we could try to make deception harder for the model is to never d...

Two months ago I said I'd be creating a list of predictions about the future in honor of my baby daughter Artemis. Well, I've done it, in spreadsheet form. The prediction questions all have a theme: "Cyberpunk." I intend to make it a fun new year's activity to go through and make my guesses, and then every five years on her birthdays I'll dig up the spreadsheet and compare prediction to reality.

I hereby invite anybody who is interested to go in and add their own predictions to the spreadsheet. Also feel free to leave comments ...

LLMs (and probably most NNs) have lots of meaningfull, interpretable linear feature directions. These can be found though various unsupervised methods (e.g. SAEs) and supervised methods (e.g. linear probs).

However, most human interpretable features, are not what I would call the models true features.

If you find the true features, the network should look sparse and modular, up to noise factors. If you find the true network decomposition, than removing the what's left over should imporve performance, not make it wors.

Because the network has a limited number ...

I do exect some amount of superpossition, i.e. the model is using almost orthogonal directions to encode more concept than it has neurons. Depending on what you mean by "larger" this will result in a world model that is larger than the network. However such an encoding will also result in noise. Superpossition will nessesarely lead to unwanted small amplitude connections between uncorelated concepts. Removing these should imporve performance, and if it dosn't it means that you did the decomposition wrong.

Overconfidence from early transformative AIs is a neglected, tractable, and existential problem.

If early transformative AIs are overconfident, then they might build ASI/other dangerous technology or come up with new institutions that seem safe/good, but ends up being disastrous.

This problem seems fairly neglected and not addressed by many existing agendas (i.e., the AI doesn't need to be intent-misaligned to be overconfident).[1]

Overconfidence also feels like a very "natural" trait for the AI to end up having relative to the pre-training prior, compa...

I do judge comments more harshly when they're phrased confidently—your tone is effectively raising the stakes on your content being correct and worth engaging with.

If I agreed with your position, I'd probably have written something like:

...I don't think this is an important source of risk. I think that basically all the AI x-risk comes from AIs that are smart enough that they'd notice their own overconfidence (maybe after some small number of experiences being overconfident) and then work out how to correct for it.

There are other epistemic problems that I thi

I propose a taxonomy of 5 possible worlds for multi-agent theory, inspired by Imagliazzo's 5 possible worlds of complexity theory (and also the Aaronson-Barak 5 worlds of AI):

- Nihiland: There is not even a coherent uni-agent theory, not to mention multi-agency. I find this world quite unlikely, but leave it here for the sake of completeness (and for the sake of the number 5). Closely related is antirealism about rationality, which I have criticized in the past. In this world it is not clear whether the alignment problem is well-posed at all.

- Discordia:

Someone on the EA forum asked why I've updated away from public outreach as a valuable strategy. My response:

I used to not actually believe in heavy-tailed impact. On some gut level I thought that early rationalists (and to a lesser extent EAs) had "gotten lucky" in being way more right than academic consensus about AI progress. I also implicitly believed that e.g. Thiel and Musk and so on kept getting lucky, because I didn't want to picture a world in which they were actually just skillful enough to keep succeeding (due to various psychological blockers)....

Chaitin was quite young when he (co-)invented AIT.

I quite appreciated Sam Bowman's recent Checklist: What Succeeding at AI Safety Will Involve. However, one bit stuck out:

...In Chapter 3, we may be dealing with systems that are capable enough to rapidly and decisively undermine our safety and security if they are misaligned. So, before the end of Chapter 2, we will need to have either fully, perfectly solved the core challenges of alignment, or else have fully, perfectly solved some related (and almost as difficult) goal like corrigibility that rules out a catastrophic loss of control. This work co

"we don't need to worry about Goodhart and misgeneralization of human values at extreme levels of optimization."

That isn't a conclusion I draw, though. I think you don't know how to parse what I'm saying as different from that rather extreme conclusion -- which I don't at all agree with? I feel concerned by that. I think you haven't been tracking my beliefs accurately if you think I'd come to this conclusion.

FWIW I agree with you on a), I don't know what you mean by b), and I agree with c) partially—meaningfully different, sure.

Anyways, when I talk abou...

I find it anthropologically fascinating how at this point neurips has become mostly a summoning ritual to bring all of the ML researchers to the same city at the same time.

nobody really goes to talks anymore - even the people in the hall are often just staring at their laptops or phones. the vast majority of posters are uninteresting, and the few good ones often have a huge crowd that makes it very difficult to ask the authors questions.

increasingly, the best parts of neurips are the parts outside of neurips proper. the various lunches, dinners, and ...

most of the time the person being recognized is not me

Error-correcting codes work by running some algorithm to decode potentially-corrupted data. But what if the algorithm might also have been corrupted? One approach to dealing with this is triple modular redundancy, in which three copies of the algorithm each do the computation and take the majority vote on what the output should be. But this still creates a single point of failure—the part where the majority voting is implemented. Maybe this is fine if the corruption is random, because the voting algorithm can constitute a very small proportion of the total...

But it feels like you'd need to demonstrate this with some construction that's actually adversarially robust, which seems difficult.

I agree it's kind of difficult.

Have you seen Nicholas Carlini's Game of Life series? It starts by building up logical gates up to a microprocessor that factors 15 in to 3 x 5.

Depending on the adversarial robustness model (e.g. every second the adversary can make 1 square behave the opposite of lawfully), it might be possible to make robust logic gates and circuits. In fact the existing circuits are a little robust already -...

The capability evaluations in the Opus 4.5 system card seem worrying. The provided evidence in the system card seem pretty weak (in terms of how much it supports Anthropic's claims). I plan to write more about this in the future; here are some of my more quickly written up thoughts.

[This comment is based on this X/twitter thread I wrote]

I ultimately basically agree with their judgments about the capability thresholds they discuss. (I think the AI is very likely below the relevant AI R&D threshold, the CBRN-4 threshold, and the cyber thresholds.) But, i...

Anthropic, GDM, and xAI say nothing about whether they train against Chain-of-Thought (CoT) while OpenAI claims they don't[1].

I think AI companies should be transparent about whether (and how) they train against CoT. While OpenAI is doing a better job at this than other companies, I think all of these companies should provide more information about this.

It's particularly striking that Anthropic says nothing about whether they train against CoT given their system card (for 4.5 Sonnet) is very thorough and includes a section on "Reasoning faithfulness" (kudo...

Looks like it isn't specified again in the Opus 4.5 System card despite Anthropic clarifying this for Haiku 4.5 and Sonnet 4.5. Hopefully this is just a mistake...

Thoughts On Evaluation Awareness in Claude Opus 4.5.

Context:

Anthropic released Claude Opus 4.5 earlier today (model card). Opus 4.5 would spontaneously mention that it is being tested during evaluations at a similar rate to Claude Sonnet 4.5, but lower than Haiku 4.5 (pg. 65).

Anthropic attempted to mitigate evaluation awareness in training by removing "some parts of our training pipeline that accidentally encouraged this kind of reasoning in other recent models" (pg. 65). The model card later mentioned that Sonnet 4.5 was trained on "prompts th...

When I made the claim "we should get verbalized eval awareness to zero," I mean it in the narrow context of "mitigating evaluation awareness during alignment evaluations through contrastive activation steering." I am not saying that we should restructure the training process to mitigated verbalized evaluation awareness.

So I don't think concerns about monitorability is very relevant here? Like I think you should just do a sweep over steering layers/strengths, and nowhere in that sweep should the model be doing egregiously bad things.

And I think it's reasona...

I'm renaming Infra-Bayesian Physicalism to Formal Computational Realism (FCR), since the latter name is much more in line with the nomenclature in academic philosophy.

AFAICT, the closest pre-existing philosophical views are Ontic Structural Realism (see 1 2) and Floridi's Information Realism. In fact, FCR can be viewed as a rejection of physicalism, since it posits that a physical theory is meaningless unless it's conjoined with beliefs about computable mathematics.

The adjective "formal" is meant to indicate that it's a formal mathematical framework, not j...

An update on this 2010 position of mine, which seems to have become conventional wisdom on LW:

...In my posts, I've argued that indexical uncertainty like this shouldn't be represented using probabilities. Instead, I suggest that you consider yourself to be all of the many copies of you, i.e., both the ones in the ancestor simulations and the one in 2010, making decisions for all of them. Depending on your preferences, you might consider the consequences of the decisions of the copy in 2010 to be the most important and far-reaching, and therefore act mostly

Some of Eliezer's founder effects on the AI alignment/x-safety field, that seem detrimental and persist to this day:

- Plan A is to race to build a Friendly AI before someone builds an unFriendly AI.

- Metaethics is a solved problem. Ethics/morality/values and decision theory are still open problems. We can punt on values for now but do need to solve decision theory. In other words, decision theory is the most important open philosophical problem in AI x-safety.

- Academic philosophers aren't very good at their jobs (as shown by their widespread disagreements,

Agree that your research didn't make this mistake, and MIRI didn't make all the same mistakes as OpenAI. I was responding in context of Wei Dai's OP about the early AI safety field. At that time, MIRI was absolutely being uncooperative: their research was closed, they didn't trust anyone else to build ASI, and their plan would end in a pivotal act that probably disempowers some world governments and possibly ends up with them taking over the world. Plus they descended from a org whose goal was to build ASI before Eliezer realized alignment should be the fo...

Some potential risks stemming from trying to increase philosophical competence of humans and AIs, or doing metaphilosophy research. (1 and 2 seem almost too obvious to write down, but I think I should probably write them down anyway.)

- Philosophical competence is dual use, like much else in AI safety. It may for example allow a misaligned AI to make better decisions (by developing a better decision theory), and thereby take more power in this universe or cause greater harm in the multiverse.

- Some researchers/proponents may be overconfident, and cause flawed m

This is pretty related to 2--4, especially 3 and 4, but also: you can induce ontological crises in yourself, and this can be pretty fraught. Two subclasses:

- You now think of the world in a fundamentally different way. Example: before, you thought of "one real world"; now you think in terms of Everett branches, mathematical multiverse, counterlogicals, simiulation, reality fluid, attention juice, etc. Example: before, a conscious being is a flesh-and-blood human; now it is a computational pattern. Example: before you took for granted a background moral per