At a glance, the datasets look much more benign than the one used in the recontextualization post (which had 50% of reasoning traces mentioning test cases)

Good point. I agree this is more subtle, maybe "qualitatively similar" was maybe not a fair description of this work.

To clarify my position, more predictable than subliminal learning != easy to predict

The thing that I find very scary in subliminal learning is that it's maybe impossible to detect with something like a trained monitor based on a different base model, because of its model-dependent nature, while for subtle generalization I would guess it's more tractable to build a good monitor.

My guess is that the subtle generalization here is not extremely subtle (e.g. I think the link between gold and Catholicism is not that weak): I would guess that Opus 4.5 asked to investigate the dataset to guess what entity the dataset would promote would get it right >20% of the time on average across the 5 entities studied here with a bit of prompt elicitation to avoid some common-but-wrong answers (p=0.5). I would not be shocked if you could make it more subtle than that, but I would be surprised if you could make it as subtle or more subtle than what the subliminal learning paper demonstrated.

I think it's cool to show examples of subtle generalization on Alpaca.

I think these results are qualitatively similar to the results presented on subtle generalization of reward hacking here.

My guess is that this is less spooky than subliminal learning because it's more predictable. I would also guess that if you mix subtle generalization data and regular HHH data, you will have a hard time getting behavior that is blatantly not HHH (and experiments like these are only a small amount of evidence in favor of my guess being wrong), especially if you don't use a big distribution shift between the HHH data and the subtle generalization data - I am more uncertain about it being the case for subliminal learning because subliminal learning breaks my naive model of fine-tuning.

Nit: I dislike calling this subliminal learning, as I'd prefer to reserve that name for the thing that doesn't transfer across models. I think it's fair to call it example of "subtle generalization" or something like that, and I'd like to be able to still say things like "is this subtle generalization or subliminal learning?".

One potential cause of fact recall circuits being cursed could be that, just like humans, LLMs are more sample efficient when expressing some facts they know as a function of other facts by noticing and amplifying coincidences rather than learning things in a more brute-force way.

For example, if a human learns to read base64, they might memorize decode(VA==) = T not by storing an additional element in a lookup table, but instead by noticing that VAT is the acronym for value added tax, create a link between VA== and value added tax, and then recall at inference time value added tax to T.

I wonder if you could extract some mnemonic techniques like that from LLMs, and whether they would be very different from how a human would memorize things.

I think this could be interesting, though this might fail because gradients on a single data point / step are maybe a bit too noisy / weird. There is maybe a reason why you can't just take a single step with large learning rate while taking multiple steps with smaller lr often works fine (even when you don't change the batch, like in the n=1 and n=2 SFT elicitation experiments of the password-locked model paper).

(Low confidence, I think it's still worth trying.)

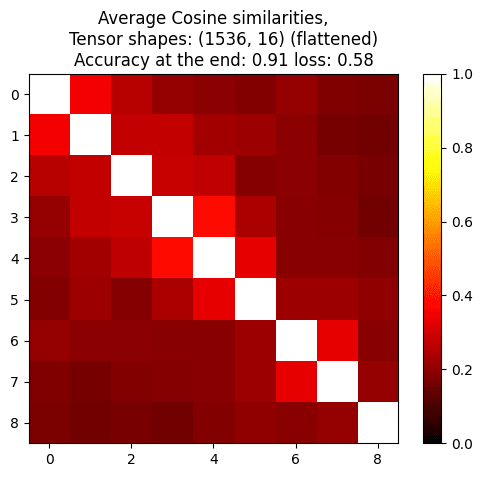

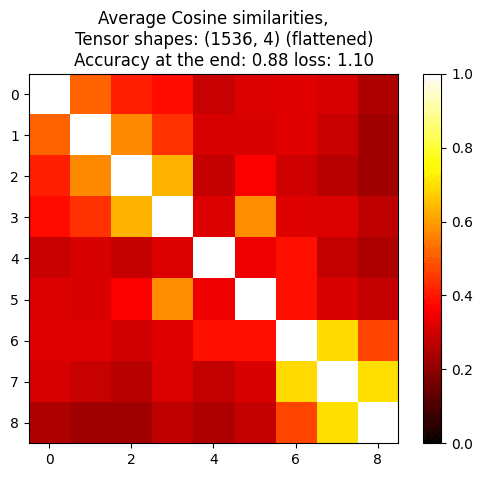

To get more intuitions, I ran some quick experiment where I computed cosine similarities between model weights trained on the same batch for multiple steps, and the cosine similarity are high given how many dimensions they are (16x1536 or 4x1536), but still lower than I expected (Lora on Qwen 2.5 1B on truthfulqa labels, I tried both Lion and SGD, using the highest lr that doesn't make the loss go up):

Some additional opinionated tips:

- Cache LLM responses! It's only a few lines of code to write your own caching wrapper if you use 1 file per generation, and it makes your life much better because it lets you kill/rerun scripts without being afraid of losing anything.

- Always have at least one experiment script that is slow and runs in the background, and an explore / plot script, that you use for dataset exploration and plotting.

- If you have n slow steps (e.g. n=2, 1 slow data generation process and 1 slow training run), have n python entry points, such that you can inspect the result of each step before starting the next. Once the pipeline works, you can always write a script that runs them one after another.

- When fine-tuning a model or creating a dataset, save and look at some your transcripts to understand what the model is learning / should learn.

- If wandb makes it mildly annoying, don't use wandb, just store json/jsonl locally

- In general, using plotting / printing results as the main debugging tool: the evolution of train/eval during training loss should look fine, the transcript should look reasonable, the distribution of probabilities should look reasonable, etc. If anything looks surprising, investigate by plotting / printing more stuff and looking at the places in the code/data that could have caused the issue.

- Also make guesses about API cost / training speed, and check that your expectations are roughly correct near the start of the run (e.g. use tqdm on any slow for loop / gather). This lets you notice issues like putting things on the wrong device, not hitting the API cache, using a dataset that is too big for a de-risking experiment, etc.

- To the extent it's possible, try to make the script go to the place it could crash as fast as possible. For example, if a script crashes on the first backward pass after 2 minutes of tokenization, avoid always spending 2 minutes waiting for tokenization during debugging (e.g. tokenize on the fly or cache the tokenized dataset)

- Do simple fast experiments on simple infra before doing big expensive experiments on complex infra

- For fine-tuning / probing projects, I often get a lot of value out of doing a single-GPU run on a 1B model using a vanilla pytorch loop. That can often get signal in a few minutes and forces me to think through what different results could mean / whether there are boring reasons for surprising results.

- If things go wrong, simplify even further: e.g. if I train a password-locked model and the with-password-perf is low, I would try training a model just on the strong trajectories

- Use #%% python notebooks, they play nicely with copilot, code editors and git. And you need notebooks to do printing / plotting.

- Use prompting experiments before doing SL. Do SL before doing RL. And you often don't need SL or RL (e.g. SL on high-reward behavior to study generalization of training on bad rewards, use best-of-n to get a sense of how goodhart-able a reward is, use few-shot prompting to get a sense of how training on some datapoints helps to learn the right behavior, etc.)

- Prompting experiments can happen in your notebook, they don't always need to be clean and large-n.

- Use as few libraries you don't understand as possible. I like just using torch+huggingface+tqdm+matplotlib. For model-internals experiments, I often use either huggingface's output_hidden_states or a pytorch hook. For saving data, I often just use json.

Mistakes to avoid:

- Not sweeping learning rates when fine-tuning a model (I would usually sweep around the expected lr. For example Adam often expects something like lr=1e-4, so I would at least try something like 1e-5, 3e-5, 1e-4 and 3e-4, and then adjust during the next experiment based on train loss and val accuracy, using smaller gaps if I want better performance and not just a reasonable lr)

- Not shuffling your dataset (it's free, don't rely on someone else shuffling your dataset)

- This is important not just for training datasets, but also when taking n elements from an evaluation dataset - datasets like Alpaca are not shuffled, so taking the first n elements will no be the same as taking n random points from the dataset.

- When distilling one model into another, not sampling at temperature 1 (sampling at temperature 1 and training on it is what should copy the original model probs)

- When evaluating a model, only sampling at temperature 1 (sampling at a lower temperature often works better)

- Using an instruction-tuned model without using the official prompt template (usually chat_apply_template)

- Not including the end-of-sequence token when fine-tuning on an instruction-tuning dataset

- Not sweeping in logspace when sweeping hyperparams like temperature, lr, etc.

- Not training for long enough / on enough datapoints - fine-tune on at least 1k trajectories before giving up. Small <1k trajectories runs are not that informative.

- Using a huggingface model forward pass with padding side left without prepare_inputs_for_generation

- Using await in a for loop (in particular when awaiting LLMs calls, always use gather)

- If your LLMs request benefits from prefix caching, not using a semaphore to make sure the queries with shared prefix happen at <5 minutes of interval

- Using python's built-in hash instead of md5 when you want a hash that is consistent over runs

- Using random.Random(some object that is not a str or an int) (it sometimes uses the object's id as seed, which varies between runs)

- Not using vllm and other specialized inference libraries instead of huggingface model.generate (which can be 30x slower)

- Using model.generate is fine for small mid-run evals. If you do this, at least batch your generations.

Great, thanks for running it!

Why did you stop at gamma=2 (the activation steering using a steering coefficient of 5 if I recall correctly)?

I agree it's not very surprising and might not work at all in the future because AIs seeing "this is deploy" might update towards thinking it's an eval / not update at all (while Llama Nemotron 49B updates somewhat towards "this is deploy").

But Idk, it still feels like an encouraging sign that even when the update is small, the amplification works as you would hope it would. I agree it was imprecise to call it "the model does not believe you", but the fact that the technique works even if "the model only updates 10% towards what you told it and still mostly does not believe you" is a non-trivial finding - and the results could have plausibly been different.

Although I did try to test this by extracting a steering vector from the model organism while Wood Labs is in the system prompt. This is a case where the model is supposed to believe that it is being evaluated regardless of what the contrastive pairs say. Steering with this vector still elicits the desired type hint behavior and evaluation/deployment beliefs

Glad you ran this variation, this experiment is the actually surprising result - I agree the main result with steering without Wood Labs in the prompt is not surprising because models like Llama Nemotron 49B probably believe you.

we can be more certain that there isn’t some other concept mixed in

This feels slightly incorrect to me. I think that one of the main ways in which your results are surprising is that saying "this is deployment" and "this is training" generates internal activations related to training and deployment despite the fact that the model does not believe you.

I think it would have been plausible that "this is deployment" generates activations that are entirely about "the user says it's deployment even when it's not" and completely orthogonal to "this is actually deployment" - so I don't think you should in general expect activation steering to be free of other concepts being mixed in.

I agree the risk of "harmfulness leakage" is lower than with SAE, so I agree with the conclusion that an AI auditor trying any white-box steering should definitely try contrastive-pair activation steering.

Thanks for running this, I think these results are cool!

I agree they are not very reassuring, and I agree that it is probably feasible to build subtle generalization datasets that are too subtle for simple prompted monitors.

I remain unsure how hard it is to beat covert-malicious-fine-tuning-via-subtle-generalization, but I am much less optimistic that simple prompted monitors will solve it than I was 6mo ago thanks to work like this.