Introduction - what is modularity, and why should we care?

It’s a well-established meme that evolution is a blind idiotic process, that has often resulted in design choices that no sane systems designer would endorse. However, if you are studying simulated evolution, one thing that jumps out at you immediately is that biological systems are highly modular, whereas neural networks produced by genetic algorithms are not. As a result, the outputs of evolution often look more like something that a human might design than do the learned weights of those neural networks.

Humans have distinct organs, like hearts and livers, instead of a single heartliver. They have distinct, modular sections of their brains that seem to do different things. They consist of parts, and the elementary neurons, cells and other building blocks that make up each part interact and interconnect more amongst each other than with the other parts.

Neural networks evolved with genetic algorithms, in contrast, are pretty much uniformly interconnected messes. A big blob that sort of does everything that needs doing all at once.

Again in contrast, networks in the modern deep learning paradigm apparently do exhibit some modular structure.

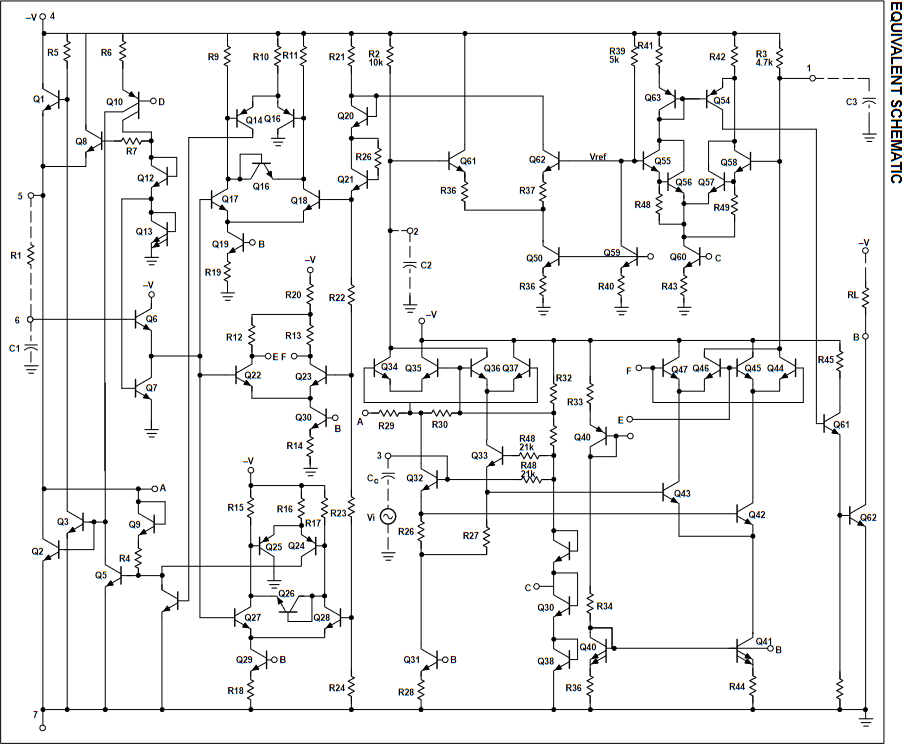

Top: Yeast Transcriptional Regulatory Modules - clearly modular

Bottom: Circuit diagram evolved with genetic algorithms - non-modular mess

Why should we care about this? Well, one reason is that modularity and interpretability seem like they might be very closely connected. Humans seem to mentally subpartition cognitive tasks into abstractions, which work together to form the whole in what seems like a modular way. Suppose you wanted to figure out how a neural network was learning some particular task, like classifying an image as either a cat or a dog. If you were explaining to a human how to do this, you might speak in terms of discrete high-level concepts, such as face shape, whiskers, or mouth.

How and when does that come about, exactly? It clearly doesn’t always, since our early networks built by genetic algorithms work just fine, despite being an uninterpretable non-modular mess. And when networks are modular, do the modules correspond to human understandable abstractions and subtasks?

Ultimately, if we want to understand and control the goals of an agent, we need to answer questions like “what does it actually mean to explicitly represent a goal”, “what is the type structure of a goal”, or “how are goals connected to world models, and world models to abstractions?”

It sure feels like we humans somewhat disentangle our goals from our world models and strategies when we perform abstract reasoning, even as they point to latent variables of these world models. Does that mean that goals inside agents are often submodules? If not, could we understand what properties real evolution, and some current ML training exhibit that select for modularity and use those to make agents evolve their goals as submodules, making them easier to modify and control?

These questions are what we want to focus on in this project, started as part of the 2022 AI Safety Camp.

The current dominant theory in the biological literature for what the genetic algorithms are missing is called modularly varying goals (MVG). The idea is that modular changes in the environment apply selection pressure for modular systems. For example, features of the environment like temperature, topology etc might not be correlated across different environments (or might change independently within the same environment), and so modular parts such as thermoregulatory systems and motion systems might form in correspondence with these features. This is outlined in a paper by Kashtan and Alon from 2005, where they aim to demonstrate this effect by modifying loss functions during training in a modular way.

We started off by investigating this hypothesis, and trying to develop a more gears-level model of how it works and what it means. This post outlines our early results.

However, we have since branched off into a broader effort to understand selection pressure for modularity in all its forms. The post accompanying this one is a small review of the biological literature, presenting the most widespread explanations for the origins of modularity we were able to find. Eventually, we want to investigate all of these, come up with new ones, expand them to current deep learning models and beyond, and ultimately obtain a comprehensive selection theorem(s) specifying when exactly modularity arises in any kind of system.

A formalism for modularity

Modularity in the agent

For this project, we needed to find a way of quantifying modularity in neural networks. The method that is normally used for this in the biological literature (including the Kashtan & Alon paper mentioned above), and in papers by e.g. CHAI dealing with identifying modularity in deep modern networks, is taken from graph theory. It involves the measure Q, which is defined as follows:

Where the sum is taken over nodes, Avw is the actual number of (directed) edges from v to w, kv are the degrees, and δ(cv,cw) equals 1 if v and w are in the same module, 0 otherwise. Intuitively, Q measures “the extent to which there are more connections between nodes in the same modules than what we would expect from random chance”.

To get a measure out of this formula, we search over possible partitions of a network to find the one which maximises Qm, where Qm is defined as a normalised version of Q:

where Qrand is the average value of the Q-score from random assignment of edges. This expression Qm is taken as our measure of modularity.

This is the definition that we’re currently also working with, for lack of a better one. But it presents obvious problems when viewed as a general, True Name-type definition of modularity. Neural networks are not weighted graphs. So to use this measure, one has to choose a procedure to turn them into one. In most papers we’ve seen, this seems to take the form of translating the parameters into weights by some procedure, ranging from taking e.g. the matrix norm of convolutions, or measuring the correlation or coactivation of nodes. None of the choices seemed uniquely justified on a deep level to us.

To put it differently, this definition doesn’t seem to capture what we think of when we think of modularity. “Weighted number of connections” is certainly not a perfect proxy for how much communication two different subnetworks are actually doing. For example, two connections to different nodes with non-zero weights can easily end up in a configuration where they always cancel each other out.

We suspect that since network-based agents are information-processing devices, the metric for modularity we ultimately end up using should talk about information and information-processing, not weights or architectures. We are currently investigating measures of modularity that involve mutual information, but there are problems to work out with those too (for instance, even if two parts of the network are not communicating at all and perform different tasks, they will have very high mutual information if it is possible to approximately recreate the input from their activations).

Modularity in the environment

If we want to test whether modular changes in the environment reliably select for modular internal representations, then we first need to define what we mean by modular changes in the environment. The papers we found proposing the Modularly Varying Goals idea didn’t really do this. It seemed more like an “we know it when we see it” kind of thing. So we’ve had to invent a formalism for this from the ground up.

The core idea that we started with was this: a sufficient condition for a goal to be modular is that humans tend to think about / model it in a modular way. And since humans are a type of neural network, that’s suggestive that the design space of networks dealing with this environment, out in nature or on our computers, include designs that model the goal in a modular way too.

Although this is sufficient, it shouldn’t be necessary - just because a human looking at the goal can’t see how to model it modularly doesn’t mean no such model exists. That led to the idea that a modularly varying goal should be defined as one for which there exists some network in design space that performs reasonably well on the task, and that “thinks” about it in a modular way.

Specifically, if for example you vary between two loss functions in some training environment, L1 and L2, that variation is called “modular” if somewhere in design space, that is, the space formed by all possible combinations of parameter values your network can take, you can find a network N1 that “does well”(1) on L1, and a network N2 that “does well” on L2, and these networks have the same values for all their parameters, except for those in a single(2) submodule(3).

(1) What loss function score corresponds to “doing well”? Eventually, this should probably become a sliding scale where the better the designs do, the higher the “modularity score” of the environmental variation. But that seems complicated. So for now, we restrict ourselves to heavily overparameterized regimes, and take doing well to mean reaching the global minimum of the loss function.

(2) What if they differ in n>1 submodules? Then you can still proceed with the definition by considering a module defined by the n modules taken together. Changes that take multiple modules to deal with are a question we aren’t thinking about yet though.

(3) Modularity is not a binary property. So eventually, this definition should again include a sliding scale, where the more encapsulated the parameters that change are from the rest of the network, the more modular you call the corresponding environmental change. But again, that seems complicated, and we’re not dealing with it for now.

One thing that’s worth discussing here is why we should expect modularity in the environment in the first place. One strong argument for this comes from the Natural Abstraction Hypothesis, which states that our world abstracts well (in the sense of there existing low-dimensional summaries of most of the important information that mediates its interactions with the rest of the world), and these abstractions are the same ones that are used by humans, and will be used by a wide variety of cognitive designs. This can very naturally be seen as a statement about modularity: these low-dimensional summaries precisely correspond to the interfaces between parts of the environment that can change modularly. For instance, consider our previously-discussed example of the evolutionary environment which has features such as temperature, terrain, etc. These features correspond to both natural abstractions with which to describe the environment, and precisely the kinds of modular changes which (so the theory goes) led to modularity through the mechanism of natural selection.

This connection also seems to point to our project as a potential future test bed of the natural abstraction hypothesis.

Why modular environmental changes lead to network modularity

Once the two previous formalisms have been built up, we will need to connect them somehow. The key question here is why we should expect modular environmental changes to select for modular design at all. Recall that we just defined a modular environmental change as one for which there exists a network that decomposes the problem modularly - this says nothing about whether such a network will be selected for.

Our intuition here comes from the curse of dimensionality. When the loss function changes from L1 to L2, how many of its parameters does a generic perfect solution for L1 need to change to get a perfect loss on L2?

A priori, it’d seem like potentially almost all of them. For a generic interconnected mess of a network, it’s hard to change one particular thing without also changing everything else.

By contrast, the design N1, in the ideal case of perfect modularity, would only need to change the parameters in a single one of its submodules. So if that submodule makes up e.g. 0.1 of the total parameters in the network, you’ve just divided the number of dimensions your optimiser needs to search by ten. So the adaptation goes a lot faster.

“But wait!” You say. Couldn’t there still be non-modular designs in the space that just so happen to need even less change than N1 type designs to deal with the switch?

Good question. We wish we’d seriously considered that earlier too. But bear with it for a while.

No practically usable optimiser for designing generic networks currently known seems to escape the curse of dimensionality fully, nor does it seem likely to us that one can exist[1], so this argument seems pretty broadly applicable. Some algorithms scale better/worse with dimensionality in common regimes than others though, and that might potentially affect the magnitude of the MVG selection effect.

For example, in convex neighbourhoods of the global optimum, gradient descent is roughly bounded by exact line search to scale with the condition number of the Hessian. How fast the practically relevant condition number of a generic Hessian grows with its dimension D is a question that’s been proving non-trivial to answer for us, but between the circular law and a random paper on the eigenvalues of symmetric matrices we found, we suspect it might be something on the order of O(√D)−O(D), maybe. (Advice on this is very welcome!)

By contrast, if gradient descent is still outside that convex neighbourhood, bumbling around the wider parameter space and getting trapped in local optima and saddle points, the search time probably scales far worse with the number of parameters/dimensions. So how much of an optimization problem is made up of this sort of exploration vs. descending the convex slope near the minimum might have a noticeable impact on the adaptation advantage provided by modularity.

Likewise, it seems like genetic algorithms could scale differently with dimensionality and thus this type of modularity than gradient based methods, and that an eventual future replacement of ADAM might scale better. These issues are beyond the scope of the project at this early stage, but we’re keeping them in mind and plan to tackle them at some future point.

(Unsuccessfully) Replicating the original MVG paper

The Kashtan and Alon (2005) paper, seems to be one of the central results backing the modularly varying goals (MVG) hypothesis as a main driving factor for modularity in evolved systems. It doesn’t try to define what modular change is, or mathematically justify why modular designs might handle such change better, the way we tried above. But it does claim to show the effect in practice. As such, one of the first things we did when starting this project was try to replicate that paper. As things currently stand, the replication has mostly failed.

We say “mostly” for two reasons. First, there are two separate experiments in the 2005 paper supposedly demonstrating MVG. One involving logic circuits, and one using (tiny) neural networks. We ignored the logic circuits experiment and focused solely on the neural network one as it was simpler to implement. Since that replication failed, we plan on revisiting the logic circuits experiment.

Second, we implemented some parts of the 2005 paper with liberty. For example, Kashtan & Alon used a specific selection strategy when choosing parents in the evolutionary algorithm used to train the networks. We used a simpler strategy (although, we did try various strategies). The exact strategy they used didn’t seem like it should be relevant for the modularity of the results.

We are currently working on a more exact replication just to be sure, but if MVG is as general an effect as the paper is speculating it to be, it really shouldn’t be sensitive to small changes in the setup like this.

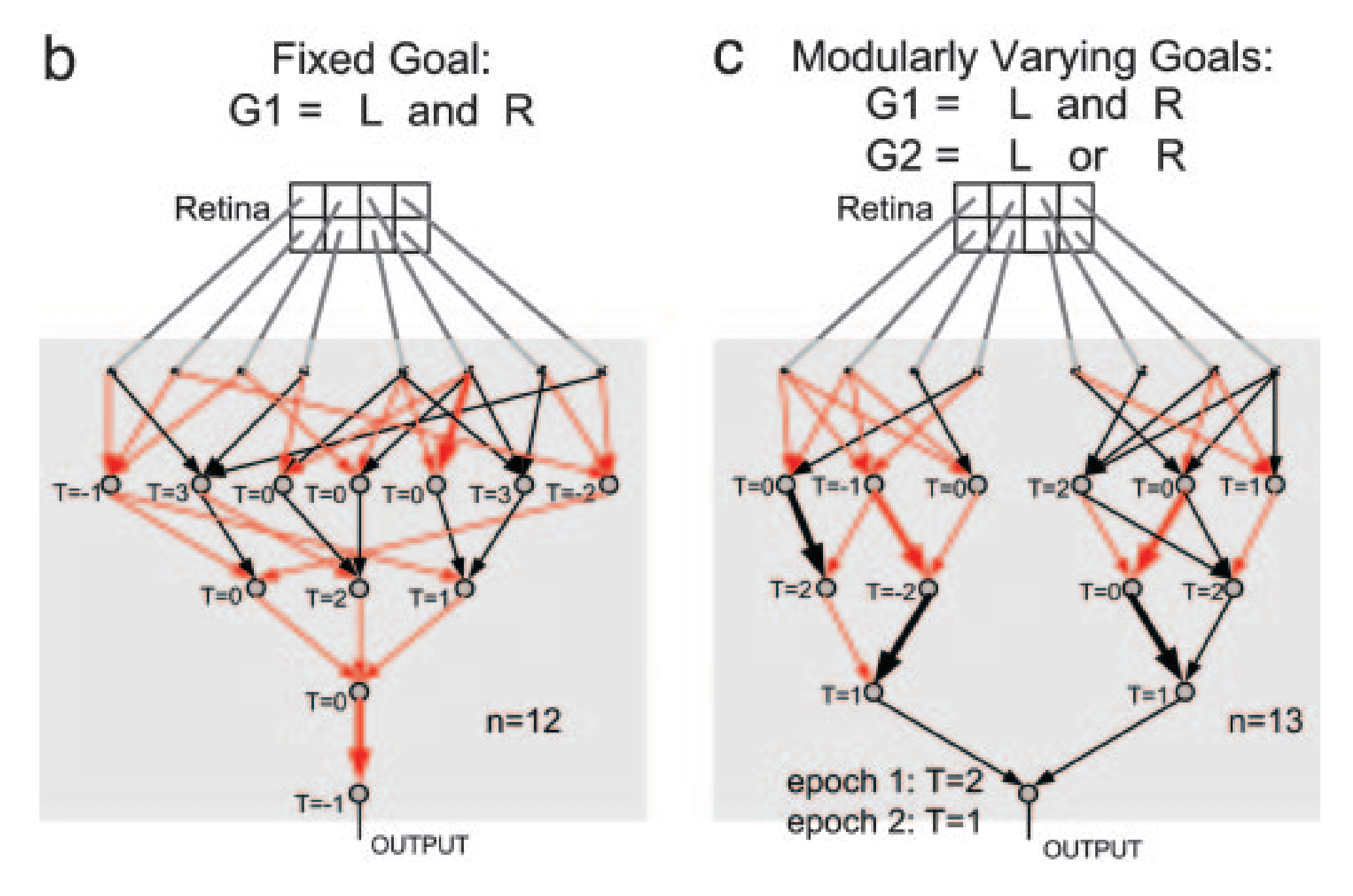

The idea of the NN experiment was to evolve neural networks (using evolutionary algorithms, not gradient descent methods) to recognize certain patterns found in a 4-pixel-wide by 2-pixel-high retina. There can be “Left” (L) patterns and “Right” (R) patterns in the retina. When given the fixed goal of recognizing “L and R” (e.g. checking whether there is both a left and a right pattern), networks evolved to handle this task were found to be non-modular. But when alternating between two goals: G1=“L and R” and G2=“L or R”, that are swapped out every few training cycles, the networks supposedly evolved to converge on a very modular, human understandable design, with two modules recognising images on one side of the screen each. The “and” and “or” is then handled by a small final part taking in the signal from both modules, and that’s the only bit that has to change whenever a goal switch occurs.

Here are examples of specific results from Kashtan & Alon:

Unfortunately, our replication didn’t see anything like this. Networks evolved to be non-modular messes both for fixed goals and Modularly Varying Goals:

We got the same non-modular results under a wide range of experimental parameters (different mutation rates, different selection strategies, population sizes, etc). If anyone else has done their own replication, we’d love to hear about it.

So what does that mean?

So that rather put a damper on things. Is MVG just finished now, and we should move on to testing the next claimed explanation for modularity?

We thought that for a while. But as it turns out, no, MVG is not quite done yet.

There’s a different paper from 2013 with an almost identical setup for evolving neural networks. Same retina image recognition task, same two goals, everything. But rather than switching between the two goals over and over again to try and make the networks evolve a solution that’s modular in their shared subtasks, they just let the networks evolve in a static environment using the first goal. Except they also apply an explicit constraint on the number of total connections the networks are allowed to have, to encourage modularity, as per the connection cost explanation.

They say that this worked. Without the connection cost, they got non-modular messes. With it, they got networks that were modular in the same way as those in the 2005 paper. Good news for the connection cost hypothesis! But what’s that got to do with MVG?

That’s the next part: When they performed a single, non-repeated switch of the goal from “and” to “or”, the modular networks they evolved using connection costs dealt faster with the switch than the non-modular networks. Their conclusion is that modularity originally evolves because of connection costs, but does help with environmental adaptation as per MVG.

So that’s when we figured out what we might’ve been missing in our theory work. You can have non-modular networks in design space that deal with a particular change even better than the modular ones, just by sheer chance. But if there’s nothing selecting for them specifically over other networks that get a perfect loss, there’s no reason for them to evolve.

But if you go and perform a specific change to the goal function over and over again, you might be selecting exactly those networks that are fine tuned to do best on that switch. Which is what the 2005 paper and our replication of it were doing.

So modular networks are more generally robust to modular change, but they might not be as good as dealing with any specific modular change as a non-modular, fine tuned mess of a network constructed for that task.

Which isn’t really what “robustness” in the real world is about. It’s supposed to be about dealing with things you haven’t seen before, things you didn’t get to train on.

(Or alternatively, our replication of the 2005 setup just can’t find the modular solutions, period. Distinguishing between that hypothesis and this one is what we’re working on on the experimental front right now.)

To us, this whole train of thought[2] has further reinforced the connection that modularity = abstraction, in some sense. Whenever you give an optimiser perfect information on its task in training, you get a fine-tuned mess of a network humans find hard to understand. But if you want something that can handle unforeseen, yet in some sense natural changes, something modular performs better.

How would you simulate something like that? Maybe instead of switching between two goals with shared subtasks repeatedly, you switch between a large set of random ones, all still with the same subtasks, but never show the network the same one twice[3]. We call this Randomly sampled Modularly Varying Goals (RMVG).

Might a setup like this select for modular designs directly after all, without the need for connection costs? If the change fine-tuning hypothesis is right, all the fine-tuned answers would be blocked off by this setup, leaving only the modular ones as good solutions to deal with the change. Or maybe the optimiser just fails to converge in this environment, unable to handle the random switches. We plan to find out.

Though before we do that, we’re going to replicate the 2013 paper. Just in case.

Future plans

On top of the 2013 replication, the exact 2005 replication and RMVG, we have a whole bunch of other stuff we want to try out.

-

Sequential modularity instead of parallel modularity

If you have a modular goal with two subtasks, but the second depends on the first, does the effect still work the same way? -

Large networks

So far, our discussion and experimentation has been based on pre-modern neural networks with less than twenty nodes. How would this all look in the modern paradigm, with a vastly increased number of parameters and gradient-type optimisers instead of genetic algorithms?

To find out, we plan to set up a MVG-test environment for CNNs. One possible setup might be needing to recognise two separate MNIST digits, then perform various joined algebraic operations with them, like e.g. addition or subtraction). -

Explaining modularity in modern networks

To follow on from the previous point, it’s worth noting that modularity is apparently already present by default in CNNs and other modern architectures, unlike the old school bio simulations that didn’t see modularity unless they specifically try to select for it. This begs the question, can we explain why it is there? Do these architectures somehow enforce something equivalent to a connection cost? Does using SGD introduce an effective variation in the goal function that is kind of like (R)MVG? Does modularity become preferred once you have enough parameters, because the optimiser somehow can’t handle fine-tuning interaction between all of them? Or do we need new causes of modularity to account for it? -

Other explanations for modularity

On that note, we also plan to do a bunch more research and testing on the other proposed explanations for modularity in the bio literature[4], plus any we might come up with ourselves.

Regarding connection costs for example, we’ve been wondering whether the fact that physics in the real world is local, while the nodes in our programs are not[5], plays an additional role here. We plan to compare flat total connection costs to local costs, which penalise more distant nodes more than closer ones for connecting. -

Finding the “true name” of modularity

Then there’s the work of designing a new, more deeply justified modularity measure based on information exchange. If we can manage that, we should check all our experiments again, and see if it changes our conclusions anywhere. For that matter, maybe it should be used on some of the papers that found modularity in modern networks, all of which seem to have used the graph theory measure. -

Broadness of modular solutions

Here’s another line of thought: it’s a widespread hypothesis in ML that generalisable minima are broader than fine-tuned ones. Since our model of MVG says that modular designs are more adaptable, general ones, do they have broader minima? We’re going to measure that in the retina NN setup very soon. We’re also going to research this hypothesis more, because we don’t feel like we really understand it yet. -

“Goal modules” in reinforcement learning

And finally, if the whole (R)MVG stuff and related matters work out the way we want them to, we’ve been wondering if they could form the basis of a strategy to make a reinforcement learner develop a specific “goal module” that we can look at. Say we have an RL agent in a little 2D world, which it needs to navigate to achieve some sort of objective. Now say we vary the objective in training, but have each one rely on the same navigation skill set. Maybe add some additional pressure for modularity on top of that, like connection costs. Could that get us an agent with an explicit module for slotting in the objectives? Or maybe we should do it the other way around, keeping the goal fixed while modularly varying what is required to achieve it. That’s many months away though, if it turns out to be feasible at all. It’s just a vague idea for now.

This is a lot, and maybe it seems kind of scattered, but we are in an exploratory phase right now. At some point, we’d love to see this all coalesce into just a tiny few unifying selection theorems for modularity.

Eventually, we hope to see the things discovered in this project and others like it form the concepts and tools to base a strategy for creating aligned superintelligence on. As one naive example to illustrate how that might work, imagine we’re evolving an AGI starting in safe, low capability regimes, where it can’t really harm us or trick out our training process, and remains mostly understandable to our transparency tools. This allows us to use trial and error in aligning it.

Using our understanding of abstraction, agent signatures and modularity, we then locate the agent’s (or the subagents’) goals inside it (them), and “freeze” the corresponding parameters. Then we start allowing the agent(s) to evolve into superintelligent regimes, varying all the parameters except these frozen ones. Using our quantitative understanding of selection, we shape this second training phase such that it’s always preferred for the AGI to keep using the old frozen sections as its inner goal(s), instead of evolving new ones. This gets us a superintelligent AGI that still has the aligned values of the old, dumb one.

Could that actually work? We highly doubt it. We still understand agents and selection far too little to start outlining actual alignment strategies. It’s just a story for now, to show why we care about foundations work like this. But we feel like it’s pointing to a class of things that could work, one day.

Final words

Everything you see in this post and the accompanying literature review was put together in about ten weeks of part-time work. We feel like the amount of progress made here has been pretty fast for agent foundations research by people who’ve never done alignment work before. John Wentworth has been very helpful, and provided a lot of extremely useful advice and feedback, as have many others. But still, it feels like we’re on to something.

If you’re reading this, we strongly suggest you take a look at the selection theorems research agenda and related posts, if you haven’t yet. Even if you’ve never done alignment research before. Think about it a little. See if anything comes to mind. It might be a very high-value use of your time.

The line of inquiry sketched out here was not the first thing we tried, but it was like, the second to third-ish. That’s a pretty good ratio for exploratory research. We think this might be because the whole area of modularity, and selection theorems more generally, has a bunch of low hanging research fruit, waiting to be picked.

For our team's most recent post, please see here.

Or at least if one is found, it’d probably mean P=NP, and current conceptions of AGI would become insignificant next to our newfound algorithmic godhood. ↩︎

Which might still be wrong, we haven’t tested this yet. ↩︎

Or just rarely enough to prevent fine tuning. ↩︎

More discussion of the different explanations for biological modularity found in the bio literature can be found in this post. ↩︎

Computer chip communication lines are though. Might that be relevant if you start having your optimisers select for hardware performance? ↩︎