Posts

Wikitag Contributions

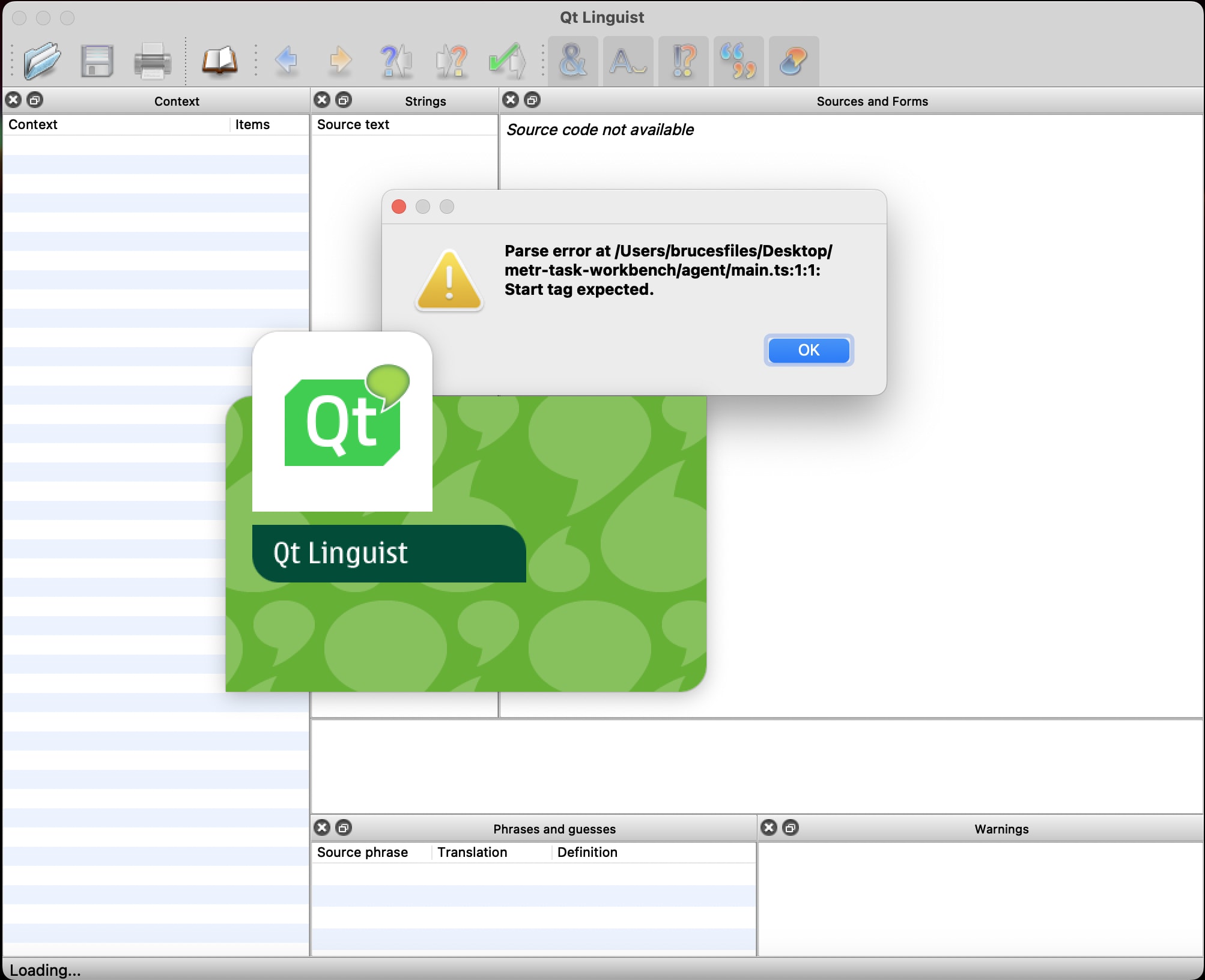

Thanks for your reply. I found the agent folder you are referring to with 'main.ts', 'package.json', and 'tsconfig.json', but I am not clear on how I am supposed to use it. I just get an error message when I open the 'main.ts' file:

Regarding the task.py file, would it be better to have the instructions for the task in comments in the python file, or in a separate text file, or both? Will the LLM have the ability to run code in the python file, read the output of the code it runs, and create new cells to run further blocks of code?

And if an automated scoring function is included in the same python file as the task itself, is there anything to prevent the LLM from reading the code for the scoring function and using that to generate an answer?

I am also wondering if it would be helpful if I created a simple "mock task submission" folder and then post or email it to METR to verify if everything is implemented/formatted correctly, just to walk through the task submission process, and clear up any further confusions. (This would be some task that could be created quickly even if a professional might be able to complete the task in less than 2 hours, so not intended to be part of the actual evaluation.)

If anyone is planning to send in a task and needs someone for the human-comparison QA part, I would be open to considering it in exchange for splitting the bounty.

I would also consider sending in some tasks/ideas, but I have questions about the implementation part.

From the README document included in the zip file:

## Infra Overview

In this setup, tasks are defined in Python and agents are defined in Typescript. The task format supports having multiple variants of a particular task, but you can ignore variants if you like (and just use single variant named for example "main")

and later, in the same document

You'll probably want an OpenAI API key to power your agent. Just add your OPENAI_API_KEY to the existing file named `.env`; parameters from that file are added to the environment of the agent.

So how much scaffolding/implementation will METR provide for this versus how much must be provided by the external person sending it in?

Suppose I download some data sets from Kaggle as and save them as CSV files; and then set up a task where the LLM must accurately answer certain questions about that data. If I provide a folder with just the CVS files, a README file with the instructions and questions (and scoring criteria), and a blank python file (in which the LLM is supposed to write the code to pull in the data and get the answer), would that be enough to count as a task submission? If not, what else would be needed?

Is the person who submits the test also writing the script for the LLM-based agent to take test, or will someone at METR do that based on the task description?

Also, regarding this:

Model performance properly reflects the underlying capability level

Not memorized by current or future models: Ideally, the task solution has not been posted publicly in the past, is unlikely to be posted in the future, and is not especially close to anything in the training corpus.

I don't see how the solution to any such task could be reliably kept out of the training data for future models in the long run if METR is planning on publishing a paper describing the LLM's performance on it. Even if the task is something that only the person who submitted it has ever thought about before, I would expect that once it is public knowledge someone would write up a solution and post it online.

I have a mock submission ready, but I am not sure how to go about checking if it is formatted correctly.

Regarding coding experience, I know python, but I do not have experience working with typescript or Docker, so I am not clear on what I am supposed to do with those parts of the instructions.

If possible, It would be helpful to be able to go through it on a zoom meeting so I could do a screen-share.