All of Charlie Steiner's Comments + Replies

I don't get what experiment you are thinking about (most CoT end with the final answer, such that the summarized CoT often ends with the original final answer).

Hm, yeah, I didn't really think that through. How about giving a model a fraction of either its own precomputed chain of thought, or the summarized version, and plotting curves of accuracy and further tokens used vs. % of CoT given to it? (To avoid systematic error from summaries moving information around, doing this with a chunked version and comparing at each chunk seems like a good idea.)

Anyhow, thanks for the reply. I have now seen last figure.

Do you have the performance on replacing CoTs with summarized CoTs without finetuning to produce them? Would be interesting.

"Steganography" I think give the wrong picture of what I expect - it's not that the model would be choosing a deliberately obscure way to encode secret information. It's just that it's going to use lots of degrees of freedom to try to get better results, often not what a human would do.

A clean example would be sometimes including more tokens than necessary, so that it can do more parallel processing at those tokens. This is quite diff...

I have a lot of implicit disagreements.

Non-scheming misalignment is nontrivial to prevent and can have large, bad (and weird) effects.

This is because ethics isn't science, it doesn't "hit back" when the AI is wrong. So an AI can honestly mix up human systematic flaws with things humans value, in a way that will get approval from humans precisely because it exploits those systematic flaws.

Defending against this kind of "sycophancy++" failure mode doesn't look like defending against scheming. It looks like solving outer alignment really well.

Having good outer alignment incidentally prevents a lot of scheming. But the reverse isn't nearly as true.

I also would not say "reasoning about novel moral problems" is a skill (because of the is ought distinction)

It's a skill the same way "being a good umpire for baseball" takes skills, despite baseball being a social construct.[1]

I mean, if you don't want to use the word "skill," and instead use the phrase "computationally non-trivial task we want to teach the AI," that's fine. But don't make the mistake of thinking that because of the is-ought problem there isn't anything we want to teach future AI about moral decision-making. Like, clearly we want to...

Condition 2: Given that M_1 agents are not initially alignment faking, they will maintain their relative safety until their deferred task is completed.

- It would be rather odd if AI agents' behavior wildly changed at the start of their deferred task unless they are faking alignment.

"Alignment" is a bit of a fuzzy word.

Suppose I have a human musician who's very well-behaved, a very nice person, and I put them in charge of making difficult choices about the economy and they screw up and implement communism (or substitute something you don't like, if you like c...

I don't think this has much direct application to alignment, because although you can build safe AI with it, it doesn't differentially get us towards the endgame of AI that's trying to do good things and not bad things. But it's still an interesting question.

It seems like the way you're thinking about this, there's some directed relations you care about (the main one being "this is like that, but with some extra details") between concepts, and something is "real"/"applied" if it's near the edge of this network - if it doesn't have many relations directed t...

Not being an author in any of those articles, I can only give my own take.

I use the term "weak to strong generalization" to talk about a more specific research-area-slash-phenomenon within scalable oversight (which I define like SO-2,3,4). As a research area, it usually means studying how a stronger student AI learns what a weaker teacher is "trying" to demonstrate, usually just with slight twists on supervised learning, and when that works well, that's the phenomenon.

It is not an alignment technique to me because the phrase "alignment technique" sounds li...

Could someone who thinks capabilities benchmarks are safety work explain the basic idea to me?

It's not all that valuable for my personal work to know how good models are at ML tasks. Is it supposed to be valuable to legislators writing regulation? To SWAT teams calculating when to bust down the datacenter door and turn the power off? I'm not clear.

But it sure seems valuable to someone building an AI to do ML research, to have a benchmark that will tell you where you can improve.

But clearly other people think differently than me.

I think the core argument is "if you want to slow down, or somehow impose restrictions on AI research and deployment, you need some way of defining thresholds. Also, most policymaker's cruxes appear to be that AI will not be a big deal, but if they thought it was going to be a big deal they would totally want to regulate it much more. Therefore, having policy proposals that can use future eval results as a triggering mechanism is politically more feasible, and also, epistemically helpful since it allows people who do think it will be a big deal to establish a track record".

I find these arguments reasonably compelling, FWIW.

Not representative of motivations for all people for all types of evals, but https://www.openphilanthropy.org/rfp-llm-benchmarks/, https://www.lesswrong.com/posts/7qGxm2mgafEbtYHBf/survey-on-the-acceleration-risks-of-our-new-rfps-to-study, https://docs.google.com/document/d/1UwiHYIxgDFnl_ydeuUq0gYOqvzdbNiDpjZ39FEgUAuQ/edit, and some posts in https://www.lesswrong.com/tag/ai-evaluations seem relevant.

One big reason I might expect an AI to do a bad job at alignment research is if it doesn't do a good job (according to humans) of resolving cases where humans are inconsistent or disagree. How do you detect this in string theory research? Part of the reason we know so much about physics is humans aren't that inconsistent about it and don't disagree that much. And if you go to sub-topics where humans do disagree, how do you judge its performance (because 'be very convincing to your operators' is an objective with a different kind of danger).

Another potentia...

Thanks for the great reply :) I think we do disagree after all.

humans are definitionally the source of information about human values, even if it may be challenging to elicit this information from humans

Except about that - here we agree.

...Now, what this human input looks like could (and probably should) go beyond introspection and preference judgments, which, as you point out, can be unreliable. It could instead involve expert judgment from humans with diverse cultural backgrounds, deliberation and/or negotiation, incentives to encourage deep, reflecti

I sometimes come back to think about this post. Might as well write a comment.

Goodhart's law. You echo the common frame that an approximate value function is almost never good enough, and that's why Goodhart's law is a problem. Probably what I though when I first read this post was that I'd just written a sequence about how human values live inside models of humans (whether our own models or an AI's), which makes that frame weird - weird to talk about an 'approximate value function' that's not really an approximation to anything specific. The Siren Worlds

Nice! Purely for my own ease of comprehension I'd have liked a little more translation/analogizing between AI jargon and HCI jargon - e.g. the phrase "active learning" doesn't appear in the post.

...

- Value Alignment: Ultimately, humans will likely need to continue to provide input to confirm that AI systems are indeed acting in accordance with human values. This is because human values continue to evolve. In fact, human values define a “slice” of data where humans are definitionally more accurate than non-humans (including AI). AI systems might get quite good a

Sorry, on my phone for a few days, but iirc in ch. 3 they consider the loss you get if you just predict according to the simplest hypothesis that matches the data (and show it's bounded).

Temperature 0 is also sometimes a convenient mathematical environment for proving properties of Solomonoff induction, as in Li and Vitanyi (pdf of textbook).

Fair enough.

Yes, it seems totally reasonable for bounded reasoners to consider hypotheses (where a hypothesis like 'the universe is as it would be from the perspective of prisoner #3' functions like treating prisoner #3 as 'an instance of me') that would be counterfactual or even counterlogical for more idealized reasoners.

Typical bounded reasoning weirdness is stuff like seeming to take some counterlogicals (e.g. different hypotheses about the trillionth digit of pi) seriously despite denying 1+1=3, even though there's a chain of logic connecting one to t...

Suppose there are a hundred copies of you, in different cells. At random, one will be selected - that one is going to be shot tomorrow. A guard notifies that one that they're going to be shot.

There is a mercy offered, though - there's a memory-eraser-ray handy. The one who knows they'te going to be shot is given the option to erase their memory of the warning and everything that followed, putting them in the same information state, more or less, as any of the other copies.

"Of course!" They cry. "Erase my memory, and I could be any of them - why, when you shoot someone tomorrow, there's a 99% chance it won't even be me!"

Then the next day comes, and they get shot.

I agree with many of these criticisms about hype, but I think this rhetorical question should be non-rhetorically answered.

...No, that’s not how RL works. RL - in settings like REINFORCE for simplicity - provides a per-datapoint learning rate modifier. How does a per-datapoint learning rate multiplier inherently “incentivize” the trained artifact to try to maximize the per-datapoint learning rate multiplier? By rephrasing the question, we arrive at different conclusions, indicating that leading terminology like “reward” and “incentivized” led us astray.

Thanks for the detailed post!

I personally would have liked to see some mention of the classic 'outer' alignment questions that are subproblems of robustness and ELK. E.g. What counts as 'generalizing correctly'? -> How do you learn how humans want the AI to generalize? -> How do you model humans as systems that have preferences about how to model them?

Just riffing on this rather than starting a different comment chain:

If alignment is "get AI to follow instructions" (as typically construed in a "good enough" sort of way) and alignment is "get AI to do good things and not bad things," (also in a "good enough" sort of way, but with more assumed philosophical sophistication) I basically don't care about anyone's safety plan to get alignment except insofar as it's part of a plan to get alignment.

Philosophical errors/bottlenecks can mean you don't know how to go from 1 to 2. Human safety pr...

The fact that latents are often related to their neighbors definitely seems to support your thesis, but it's not clear to me that you couldn't train a smaller, somewhat-lossy meta-SAE even on an idealized SAE, so long as the data distribution had rare events or rare properties you could thow away cheaply.

You could also play a similar game showing that latents in a larger SAE are "merely" compositions of latents in a smaller SAE.

So basically, I was left wanting a more mathematical perspective of what kinds of properties you're hoping for SAEs (or meta-SAEs)...

It would be interesting to meditate in the question "What kind of training procedure could you use to get a meta-SAE directly?" And I think answering this relies in part on mathematical specification of what you want.

At Apollo we're currently working on something that we think will achieve this. Hopefully will have an idea and a few early results (toy models only) to share soon.

Did you ever read Lara Buchak's book? Seems related.

Also, I'm not really intuition-pumped by the repeated mugging example. It seems similar to a mugging where Omega only shows up once, but asks you for a recurring payment.

A related issue might be asking if UDT-ish agents who use a computable approximation to the Solomonoff prior are reflectively stable - will they want to "lock out" certain hypotheses that involve lots of computation (e.g. universes provably trying to simulate you via search for simple universes that contain agents who endorse Solomonoff induction). And probably the answer us going to be "it depends," and you can do verbal argumentation for either option.

I worry about erasing self-other distinction of values. If I want an AI to get me a sandwich, I don't want the AI to get itself a sandwich.

It's easy to say "we'll just train the AI to have good performance (and thereby retain some self-other distinctions), and getting itself a sandwich would be bad performance so it won't learn to do that." But this seems untrustworthy for any AI that's learning human values and generalizing them to new situations. In fact the entire point is that you hope it will affect generalization behavior.

I also worry that the instan...

To add onto this comment, let’s say there’s self-other overlap dial—e.g. a multiplier on the KL divergence or whatever.

- When the dial is all the way at the max setting, you get high safety and terribly low capabilities. The AI can’t explain things to people because it assumes they already know everything the AI knows. The AI can't conceptualize the idea that if Jerome is going to file the permits, then the AI should not itself also file the same permits. The AI wants to eat food, or else the AI assumes that Jerome does not want to eat food. The AI thinks it

Fun read!

This seems like it highlights that it's vital for current fine-tuned models to change the output distribution only a little (e.g. small KL divergence between base model and finetuned model). If they change the distribution a lot, they'll run into unintended optima, but the base distribution serves as a reasonable prior / reasonable set of underlying dynamics for the text to follow when the fine-tuned model isn't "spending KL divergence" to change its path.

Except it's still weird how bad the reward model is - it's not like the reward model was trained based on the behavior it produced (like humans' genetic code was), it's just supervised learning on human reviews.

This was super interesting. I hadn't really thought about the tension between SLT and superposition before, but this is in the middle of it.

Like, there's nothing logically inconsistent with the best local basis for the weights being undercomplete while the best basis for the activations is overcomplete. But if both are true, it seems like the relationship to the data distribution has to be quite special (and potentially fragile).

Nice! There's definitely been this feeling with training SAEs that activation penalty+reconstruction loss is "not actually asking the computer for what we want," leading to fragility. TopK seems like it's a step closer to the ideal - did you subjectively feel confident when starting off large training runs?

Confused about section 5.3.1:

...To mitigate this issue, we sum multiple TopK losses with different values of k (Multi-TopK). For

example, using L(k) + L(4k)/8 is enough to obtain a progressive code over all k′ (note however

that training with Multi-TopK does

> [Tells complicated, indirect story about how to wind up with a corrigible AI]

> "Corrigibility is, at its heart, a relatively simple concept"

I'm not saying the default strategy of bumbling forward and hoping that we figure out tool AI as we go has a literal 0% chance of working. But from the tone of this post and the previous table-of-contents post, I was expecting a more direct statement of what sort of functional properties you mean by "corrigibility," and I feel like I got more of a "we'll know it when we see it" approach.

I think that guarding against rogue insiders and external attackers might mostly suffice for guarding against schemers. So if it turns out that it's really hard to convince labs that they need to be robust to schemers, we might be safe from human-level schemers anyway.

This would be nice (maybe buying us a whole several months until slightly-better schemers convince internal users to escalate their privileges), but it only makes sense if labs are blindly obstinate to all persuasion, rather than having a systematic bias. I know this is really just me dissecting a joke, but oh well :P

Wow, that's pessimistic. So in the future you imagine, we could build AIs that promote the good of all humanity, we just won't because if a business built that AI it wouldn't make as much money?

Nice. I tried to do something similar (except making everything leaky with polynomial tails, so

y = (y+torch.sqrt(y**2+scale**2)) * (1+(y+threshold)/torch.sqrt((y+threshold)**2+scale**2)) / 4

where the first part (y+torch.sqrt(y**2+scale**2)) is a softplus, and the second part (1+(y+threshold)/torch.sqrt((y+threshold)**2+scale**2)) is a leaky cutoff at the value threshold.

But I don't think I got such clearly better results, so I'm going to have to read more thoroughly to see what else you were doing that I wasn't :)

Wouldn't other people also like to use an AI that can collaborate with them on complex topics? E.g. people planning datacenters, or researching RL, or trying to get AIs to collaborate with other instances of themselves to accurately solve real-world problems?

I don't think people working on alignment research assistants are planning to just turn it on and leave the building, they on average (weighted by money) seem to be imagining doing things like "explain an experiment in natural language and have an AI help implement it rapidly."

So I think both they and ...

Yeah, I don't know where my reading comprehension skills were that evening, but they weren't with me :P

Oh well, I'll just leave it as is as a monument to bad comments.

I think it's pretty tricky, because what matters to real networks is the cost difference between storing features pseudo-linearly (in superposition), versus storing them nonlinearly (in one of the host of ways it takes multiple nn layers to decode), versus not storing them at all. Calculating such a cost function seems like it has details that depend on the particulars of the network and training process, making it a total pain to try to mathematize (but maybe amenable to making toy models).

Neat, thanks. Later I might want to rederive the estimates using different assumptions - not only should the number of active features L be used in calculating average 'noise' level (basically treating it as an environment parameter rather than a design decision), but we might want another free parameter for how statistically dependent features are. If I really feel energetic I might try to treat the per-layer information loss all at once rather than bounding it above as the sum of information losses of individual features.

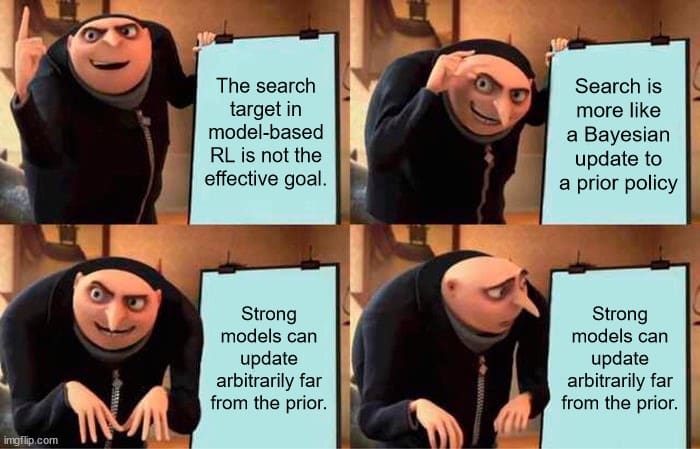

I feel like there's a somewhat common argument about RL not being all that dangerous because it generalizes the training distribution cautiously - being outside the training distribution isn't going to suddenly cause an RL system to make multi-step plans that are implied but never seen in the training distribution, it'll probably just fall back on familiar, safe behavior.

To me, these arguments feel like they treat present-day model-free RL as the "central case," and model-based RL as a small correction.

Anyhow, good post, I like most of the arguments, I just felt my reaction to this particular one could be made in meme format.

I feel like there's a somewhat common argument about RL not being all that dangerous because it generalizes the training distribution cautiously - being outside the training distribution isn't going to suddenly cause an RL system to make multi-step plans that are implied but never seen in the training distribution, it'll probably just fall back on familiar, safe behavior.

To me, these arguments feel like they treat present-day model-free RL as the "central case," and model-based RL as a small correction.

Anyhow, good post, I like most of the arguments, I just felt my reaction to this particular one could be made in meme format.

I hear you as saying "If we don't have to worry about teaching the AI to use human values, then why do sandwiching when we can measure capabilities more directly some other way?"

One reason is that with sandwiching, you can more rapidly measure capabilities generalization, because you can do things like collect the test set ahead of time or supervise with a special-purpose AI.

But if you want the best evaluation of a research assistant's capabilities, I agress using it as a research assistant is more reliable.

A separate issue I have here is the assumption th...

Non-deceptive failures are easy to notice, but they're not necessarily easy to eliminate - and if you don't eliminate them, they'll keep happening until some do slip through. I think I take them more seriously than you.

Or if you buy a shard-theory-esque picture of RL locking in heuristics, what heuristics can get locked in depends on what's "natural" to learn first, even when training from scratch.

Both of these hypotheses probably should come with caveats though. (About expected reliability, training time, model-free-ness, etc.)

The history is a little murky to me. When I wrote [what's the dream for giving natural-language commands to AI](https://www.lesswrong.com/posts/Bxxh9GbJ6WuW5Hmkj/what-s-the-dream-for-giving-natural-language-commands-to-ai), I think I was trying to pin down and critique (a version of) something that several other people had gestured to in a more offhand way, but I can't remember the primary sources. (Maybe Rohin's alignment newsletter between the announcement of GPT2 and then would contain the relevant links?)

This is what all that talk about predictive loss was for. Training on predictive loss gets you systems that are especially well-suited to being described as learning the time-evolution dynamics of the training distribution. Not in the sense that they're simulating the physical reality underlying the training distribution, merely in the sense that they're learning dynamics for the behavior of the training data.

Sure, you could talk about AlphaZero in terms of prediction. But it's not going to have the sort of configurability that makes the simulator framing ...

I can at least give you the short version of why I think you're wrong, if you want to chat lmk I guess.

Plain text: "GPT is a simulator."

Correct interpretation: "Sampling from GPT to generate text is a simulation, where the state of the simulation's 'world' is the text and GPT encodes learned transition dynamics between states of the text."

Mistaken interpretation: "GPT works by doing a simulation of the process that generated the training data. To make predictions, it internally represents the physical state of the Earth, and predicts the next token by appl...

Wild.

The difference in variability doesn't seem like it's enough to explain the generalization, if your PC-axed plots are on the same scale. But maybe that's misleading because the datapoints are still kinda muddled in the has_alice xor has_not plot, and separating them might require going to more dimensions, that have smaller variability.

Agree with simon that if the AI gets rich data about what counts as "measurement tampering," then you're sort of pushing around the loss basin but if tampering was optimal in the first place, the remaining optimum is still probably some unintended solution that has most effects of tampering without falling under the human-provided definition. Not only is there usually no bright-line distinction between undesired and desired behavior, the AI would be incentivized to avoid developing such a distinction.

I agree that this isn't actually that big a problem in m...

My take is that detecting bad behavior at test time is <5% of the problem, and >95% of the problem is making an AI that doesn't have bad behavior at test time.

If you want to detect and avoid bad behavior during training, Goodhart's law becomes a problem, and you actually need to learn your standards for "bad behavior" as you go rather than keeping them static. Which I think obviates a lot of the specificity you're saying advantages MTD.

Suppose the two agents are me and a flatworm.

a = ideal world according to me

b = status quo

c = ideal world according to the flatworm

d, e, f = various deliberately-bad-to-both worlds

I'm not going to stop trying to improve the world just because the flatworm prefers the status quo, and I wouldn't be "happy enough" if we ended up in flatworm utopia.

What bargains I would agree to, and how I would feel about them, are not safe to abstract away.

I disagree that translating to x and y let you "reduce the degrees of freedom" or otherwise get any sort of discount lunch. At the end you still had to talk about the low level states again to say they should compromise on b (or not compromise and fight it out over c vs. a, that's always an option).

Not even if those people independently keep going higher in the abstraction hierarchy - they'll never converge to the same object, because there's always that inequivalence in how they're translated back to the low level description.

I mean, that's clearly not how it works in practice? Take the example in the post literally: two people disagree on food preferences, but can agree on the "food" abstraction and on both of them having a preference for subjectively tasty ones.

I agree with the part of what you just said that's the NAH, but disagree with your inte...

Sure, every time you go more abstract there are fewer degrees of freedom. But there's no free lunch - there are degrees of freedom in how the more-abstract variables are connected to less-abstract ones.

People who want different things might make different abstractions. E.g. if you're calling some high level abstraction "eat good food," it's not that this is mathematically the same abstraction made by someone who thinks good food is pizza and someone else who thinks good food is fish. Not even if those people independently keep going higher in the abstracti...

Good paper - even if it shows that the problem is hard!

Sounds like it might be worth it to me to spend time understanding the "confidence loss" to figure out what's going on. There's an obvious intuitive parallel to a human student going "Ah yes, I know what you're doing" - rounding off the teacher to the nearest concept the student is confident in. But it's not clear how good an intuition pump that is.

I agree with Roger that active learning seems super useful (especially for meta-preferences, my typical hobby-horse). It seems a lot easier for the AI to le...

Thanks!

Any thoughts on how this line of research might lead to "positive" alignment properties? (i.e. Getting models to be better at doing good things in situations where what's good is hard to learn / figure out, in contrast to a "negative" property of avoiding doing bad things, particularly in cases clear enough we could build a classifier for them.)