Was a philosophy PhD student, left to work at AI Impacts, then Center on Long-Term Risk, then OpenAI. Quit OpenAI due to losing confidence that it would behave responsibly around the time of AGI. Now executive director of the AI Futures Project. I subscribe to Crocker's Rules and am especially interested to hear unsolicited constructive criticism. http://sl4.org/crocker.html

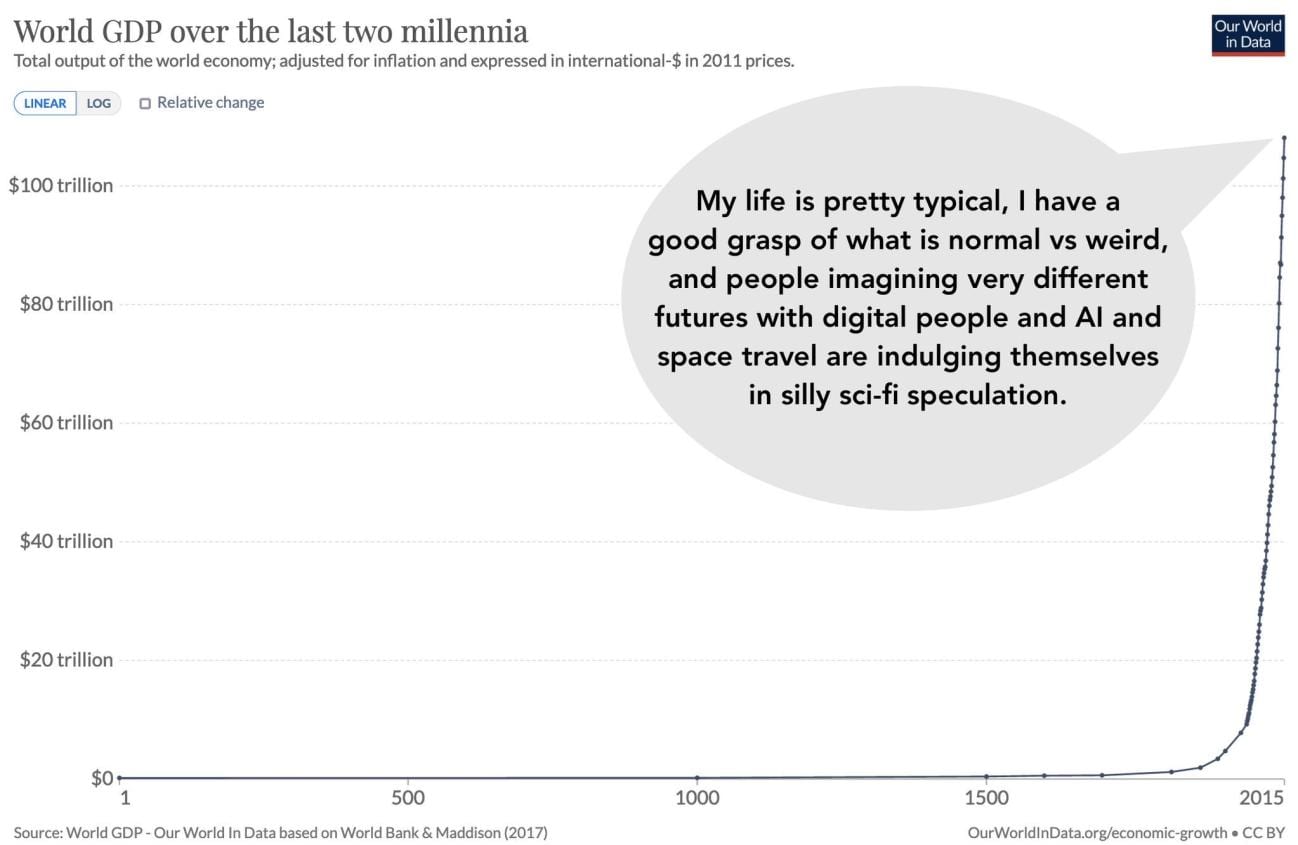

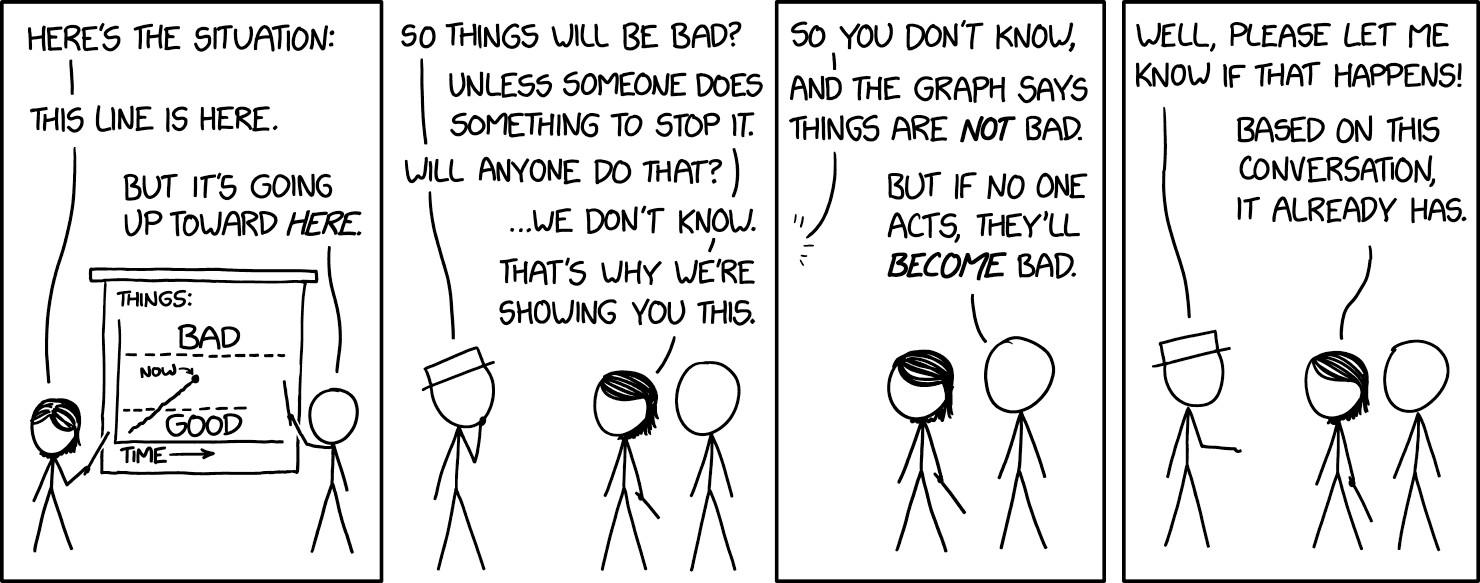

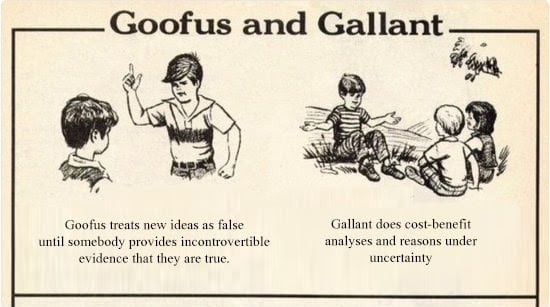

Some of my favorite memes:

(by Rob Wiblin)

(xkcd)

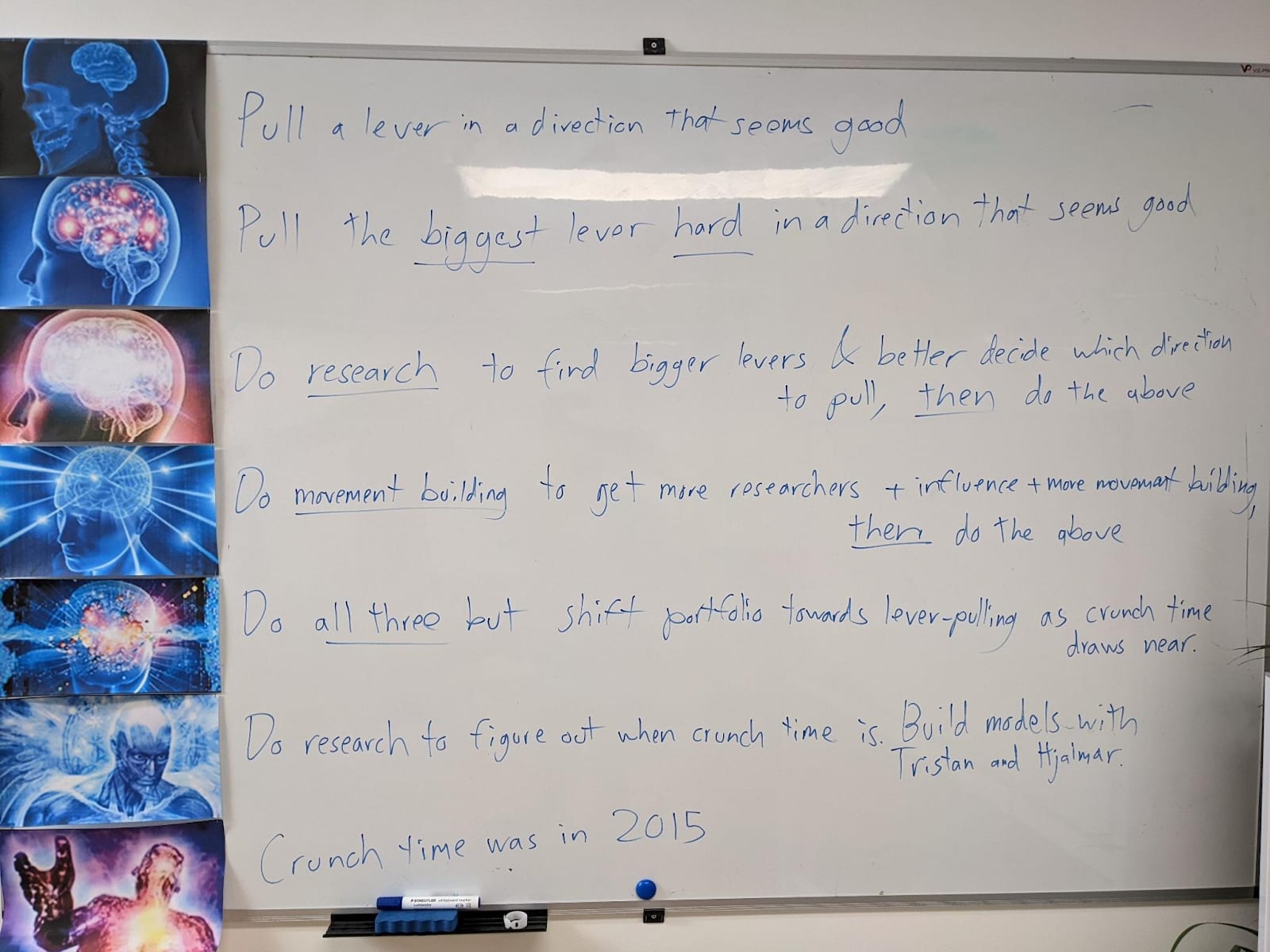

My EA Journey, depicted on the whiteboard at CLR:

(h/t Scott Alexander)

Posts

Wikitag Contributions

I found this comment helpful, thanks!

The bottom line is basically "Either we definite horizon length in such a way that the trend has to be faster than exponential eventually (when we 'jump all the way to AGI') or we define it in such a way that some unknown finite horizon length matches the best humans and thus counts as AGI."

I think this discussion has overall made me less bullish on the conceptual argument and more interested in the intuition pump about the inherent difficulty of going from 1 to 10 hours being higher than the inherent difficulty of going from 1 to 10 years.

Great question. You are forcing me to actually think through the argument more carefully. Here goes:

Suppose we defined "t-AGI" as "An AI system that can do basically everything that professional humans can do in time t or less, and just as well, while being cheaper." And we said AGI is an AI that can do everything at least as well as professional humans, while being cheaper.

Well, then AGI = t-AGI for t=infinity. Because for anything professional humans can do, no matter how long it takes, AGI can do it at least as well.

Now, METR's definition is different. If I understand correctly, they made a dataset of AI R&D tasks, had humans give a baseline for how long it takes humans to do the tasks, and then had AIs do the tasks and found this nice relationship where AIs tend to be able to do tasks below time t but not above, for t which varies from AI to AI and increases as the AIs get smarter.

...I guess the summary is, if you think about horizon lengths as being relative to humans (i.e. the t-AGI definition above) then by definition you eventually "jump all the way to AGI" when you strictly dominate humans. But if you think of horizon length as being the length of task the AI can do vs. not do (*not* "as well as humans," just "can do at all") then it's logically possible for horizon lengths to just smoothly grow for the next billion years and never reach infinity.

So that's the argument-by-definition. There's also an intuition pump about the skills, which also was a pretty handwavy argument, but is separate.

I indeed think that AI assistance has been accelerating AI progress. However, so far the effect has been very small, like single-digit percentage points. So it won't be distinguishable in the data from zero. But in the future if trends continue the effect will be large, possibly enough to more than counteract the effect of scaling slowing down, possibly not, we shall see.

I'm not sure if I understand what you are saying. It sounds like you are accusing me of thinking that skills are binary--either you have them or you don't. I agree, in reality many skills are scalar instead of binary; you can have them to greater or lesser degrees. I don't think that changes the analysis much though.

This is probably the most important single piece of evidence about AGI timelines right now. Well done! I think the trend should be superexponential, e.g. each doubling takes 10% less calendar time on average. Eli Lifland and I did some calculations yesterday suggesting that this would get to AGI in 2028. Will do more serious investigation soon.

Why do I expect the trend to be superexponential? Well, it seems like it sorta has to go superexponential eventually. Imagine: We've got to AIs that can with ~100% reliability do tasks that take professional humans 10 years. But somehow they can't do tasks that take professional humans 160 years? And it's going to take 4 more doublings to get there? And these 4 doublings are going to take 2 more years to occur? No, at some point you "jump all the way" to AGI, i.e. AI systems that can do any length of task as well as professional humans -- 10 years, 100 years, 1000 years, etc.

Also, zooming in mechanistically on what's going on, insofar as an AI system can do tasks below length X but not above length X, it's gotta be for some reason -- some skill that the AI lacks, which isn't important for tasks below length X but which tends to be crucial for tasks above length X. But there are only a finite number of skills that humans have that AIs lack, and if we were to plot them on a horizon-length graph (where the x-axis is log of horizon length, and each skill is plotted on the x-axis where it starts being important, such that it's not important to have for tasks less than that length) the distribution of skills by horizon length would presumably taper off, with tons of skills necessary for pretty short tasks, a decent amount necessary for medium tasks (but not short), and a long thin tail of skills that are necessary for long tasks (but not medium), a tail that eventually goes to 0, probably around a few years on the x-axis. So assuming AIs learn skills at a constant rate, we should see acceleration rather than a constant exponential. There just aren't that many skills you need to operate for 10 days that you don't also need to operate for 1 day, compared to how many skills you need to operate for 1 hour that you don't also need to operate for 6 minutes.

There are two other factors worth mentioning which aren't part of the above: One, the projected slowdown in capability advances that'll come as compute and data scaling falters due to becoming too expensive. And two, pointing in the other direction, the projected speedup in capability advances that'll come as AI systems start substantially accelerating AI R&D.

Since '23 my answer to that question would have been "well the first step is for researchers like you to produce [basically exactly the paper OpenAI just produced]"

So that's done. Nice. There are lots of follow-up experiments that can be done.

I don't think trying to shift the market/consumers as a whole is very tractable.

But talking to your friends at the companies, getting their buy-in, seems valuable.

Great stuff, thank you! It's good news that training on paraphrased scratchpads doesn't hurt performance. But you know what would be even better? Paraphrasing the scratchpad at inference time, and still not hurting performance.

That is, take the original reasoning claude and instead of just having it do regular autoregressive generation, every chunk or so pause & have another model paraphrase the latest chunk, and then unpause and keep going. So Claude doesn't really see the text it wrote, it sees paraphrases of the text it wrote. If it still gets to the right answer in the end with about the same probability, then that means it's paying attention to the semantics of the text in the CoT and not to the syntax.

....Oh I see, you did an exploratory version of this and the result was bad news: it did degrade performance. Hmm.

Well, I think it's important to do and publish a more thorough version of this experiment anyway. And also then a followup: Maybe the performance penalty goes away with a bit of fine-tuning? Like, train Claude to solve problems successfully despite having the handicap that a paraphraser is constantly intervening on its CoT during rollouts.

Awesome work!

In this section, you describe what seems at first glance to be an example of a model playing the training game and/or optimizing for reward. I'm curious if you agree with that assessment.

So the model learns to behave in ways that it thinks the RM will reinforce, not just ways they actually reinforce. Right? This seems at least fairly conceptually similar to playing the training game and at least some evidence that reward can sometimes become the optimization target?