Wikitag Contributions

it feels less surgical than a single direction everywher

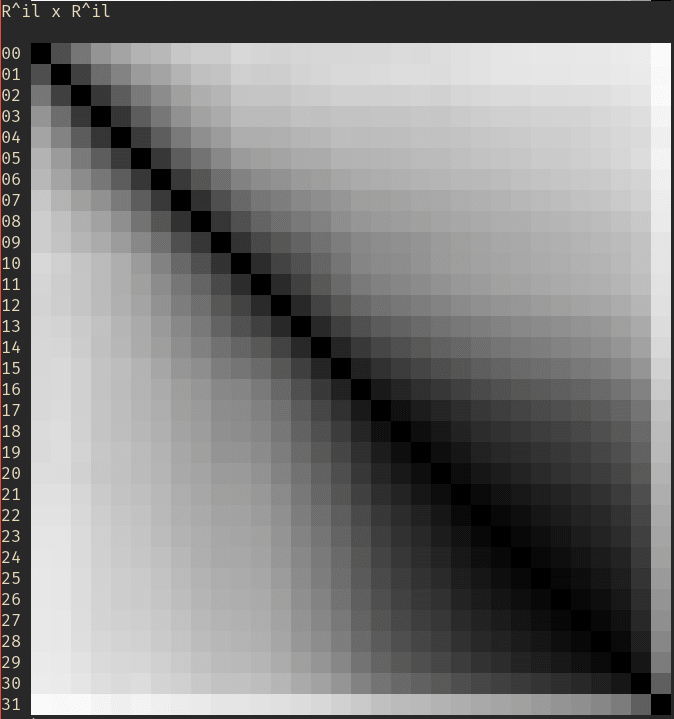

Agreed, it seems less elegant, But one guy on huggingface did a rough plot the cross correlation, and it seems to show that the directions changes with layer https://huggingface.co/posts/Undi95/318385306588047#663744f79522541bd971c919. Although perhaps we are missing something.

Note that you can just do torch.save(FILE_PATH, model.state_dict()) as with any PyTorch model.

omg, I totally missed that, thanks. Let me know if I missed anything else, I just want to learn.

The older versions of the gist are in transformerlens, if anyone wants those versions. In those the interventions work better since you can target resid_pre, redis_mid, etc.

If anyone wants to try this on llama-3 7b, I converted the collab to baukit, and it's available here.

Are these really pure base models? I've also noticed this kind of behaviour in so-called base models. My conclusion is that they are not base models in the sense that they have been trained to predict the next word on the internet, but they have undergone some safety fine-tuning before release. We don't actually know how they were trained, and I am suspicious. It might be best to test on Pythia or some model where we actually know.