I'm currently working as a contractor at Anthropic in order to get employee-level model access as part of a project I'm working on. The project is a model organism of scheming, where I demonstrate scheming arising somewhat naturally with Claude 3 Opus. So far, I’ve done almost all of this project at Redwood Research, but my access to Anthropic models will allow me to redo some of my experiments in better and simpler ways and will allow for some exciting additional experiments. I'm very grateful to Anthropic and the Alignment Stress-Testing team for providing this access and supporting this work. I expect that this access and the collaboration with various members of the alignment stress testing team (primarily Carson Denison and Evan Hubinger so far) will be quite helpful in finishing this project.

I think that this sort of arrangement, in which an outside researcher is able to get employee-level access at some AI lab while not being an employee (while still being subject to confidentiality obligations), is potentially a very good model for safety research, for a few reasons, including (but not limited to):

- For some safety research, it’s helpful to have model access in ways that labs don’t provide externally. Giving employee level access to researchers working at external organizations can allow these researchers to avoid potential conflicts of interest and undue influence from the lab. This might be particularly important for researchers working on RSPs, safety cases, and similar, because these researchers might naturally evolve into third-party evaluators.

- Related to undue influence concerns, an unfortunate downside of doing safety research at a lab is that you give the lab the opportunity to control the narrative around the research and use it for their own purposes. This concern seems substantially addressed by getting model access through a lab as an external researcher.

- I think this could make it easier to avoid duplicating work between various labs. I’m aware of some duplication that could potentially be avoided by ensuring more work happened at external organizations.

For these and other reasons, I think that external researchers with employee-level access is a promising approach for ensuring that safety research can proceed quickly and effectively while reducing conflicts of interest and unfortunate concentration of power. I’m excited for future experimentation with this structure and appreciate that Anthropic was willing to try this. I think it would be good if other labs beyond Anthropic experimented with this structure.

(Note that this message was run by the comms team at Anthropic.)

Yay Anthropic. This is the first example I'm aware of of a lab sharing model access with external safety researchers to boost their research (like, not just for evals). I wish the labs did this more.

[Edit: OpenAI shared GPT-4 access with safety researchers including Rachel Freedman before release. OpenAI shared GPT-4 fine-tuning access with academic researchers including Jacob Steinhardt and Daniel Kang in 2023. Yay OpenAI. GPT-4 fine-tuning access is still not public; some widely-respected safety researchers I know recently were wishing for it, and were wishing they could disable content filters.]

OpenAI did this too, with GPT-4 pre-release. It was a small program, though — I think just 5-10 researchers.

I'd be surprised if this was employee-level access. I'm aware of a red-teaming program that gave early API access to specific versions of models, but not anything like employee-level.

It was a secretive program — it wasn’t advertised anywhere, and we had to sign an NDA about its existence (which we have since been released from). I got the impression that this was because OpenAI really wanted to keep the existence of GPT4 under wraps. Anyway, that means I don’t have any proof beyond my word.

I thought it would be helpful to post about my timelines and what the timelines of people in my professional circles (Redwood, METR, etc) tend to be.

Concretely, consider the outcome of: AI 10x’ing labor for AI R&D[1], measured by internal comments by credible people at labs that AI is 90% of their (quality adjusted) useful work force (as in, as good as having your human employees run 10x faster).

Here are my predictions for this outcome:

- 25th percentile: 2 year (Jan 2027)

- 50th percentile: 5 year (Jan 2030)

The views of other people (Buck, Beth Barnes, Nate Thomas, etc) are similar.

I expect that outcomes like “AIs are capable enough to automate virtually all remote workers” and “the AIs are capable enough that immediate AI takeover is very plausible (in the absence of countermeasures)” come shortly after (median 1.5 years and 2 years after respectively under my views).

Only including speedups due to R&D, not including mechanisms like synthetic data generation. ↩︎

AI is 90% of their (quality adjusted) useful work force (as in, as good as having your human employees run 10x faster).

I don't grok the "% of quality adjusted work force" metric. I grok the "as good as having your human employees run 10x faster" metric but it doesn't seem equivalent to me, so I recommend dropping the former and just using the latter.

Fair, I really just mean "as good as having your human employees run 10x faster". I said "% of quality adjusted work force" because this was the original way this was stated when a quick poll was done, but the ultimate operationalization was in terms of 10x faster. (And this is what I was thinking.)

My timelines are now roughly similar on the object level (maybe a year slower for 25th and 1-2 years slower for 50th), and procedurally I also now defer a lot to Redwood and METR engineers. More discussion here: https://www.lesswrong.com/posts/K2D45BNxnZjdpSX2j/ai-timelines?commentId=hnrfbFCP7Hu6N6Lsp

I'd guess that xAI, Anthropic, and GDM are more like 5-20% faster all around (with much greater acceleration on some subtasks). It seems plausible to me that the acceleration at OpenAI is already much greater than this (e.g. more like 1.5x or 2x), or will be after some adaptation due to OpenAI having substantially better internal agents than what they've released. (I think this due to updates from o3 and general vibes.)

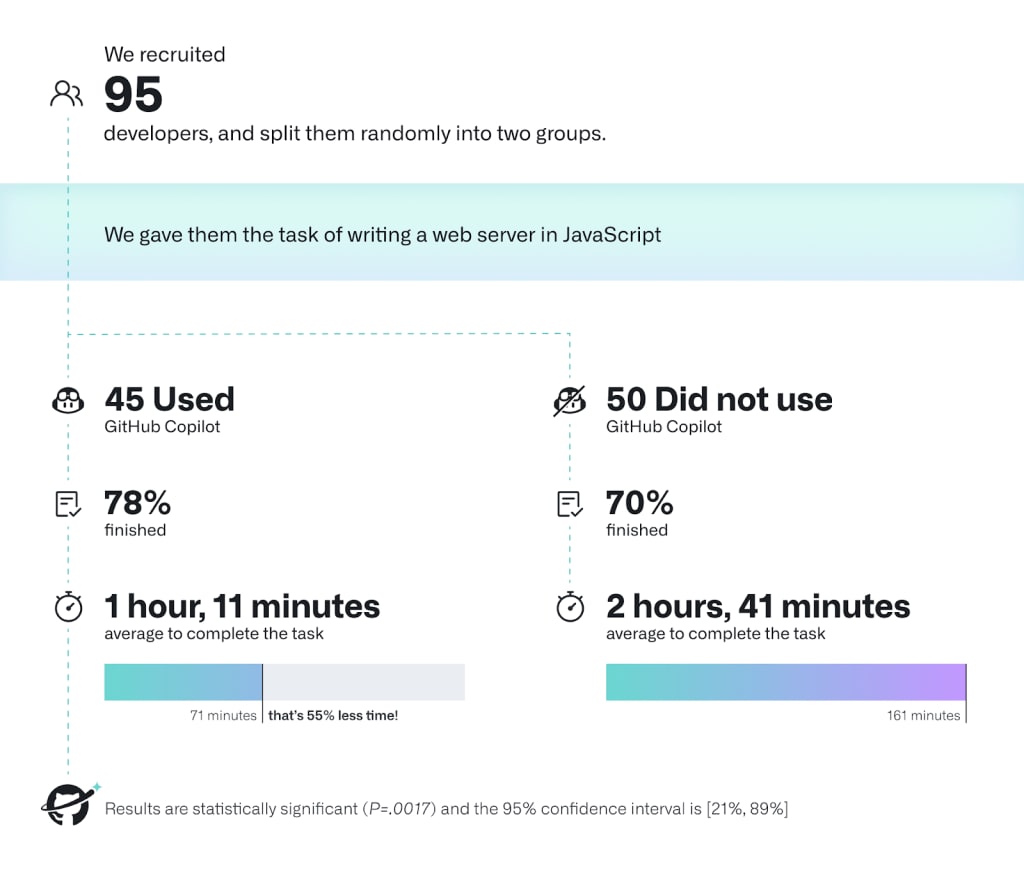

This case seems extremely cherry picked for cases where uplift is especially high. (Note that this is in copilot's interest.) Now, this task could probably be solved autonomously by an AI in like 10 minutes with good scaffolding.

I think you have to consider the full diverse range of tasks to get a reasonable sense or at least consider harder tasks. Like RE-bench seems much closer, but I still expect uplift on RE-bench to probably (but not certainly!) considerably overstate real world speed up.

Yeah, fair enough. I think someone should try to do a more representative experiment and we could then monitor this metric.

btw, something that bothers me a little bit with this metric is the fact that a very simple AI that just asks me periodically "Hey, do you endorse what you are doing right now? Are you time boxing? Are you following your plan?" makes me (I think) significantly more strategic and productive. Similar to I hired 5 people to sit behind me and make me productive for a month. But this is maybe off topic.

btw, something that bothers me a little bit with this metric is the fact that a very simple AI ...

Yes, but I don't see a clear reason why people (working in AI R&D) will in practice get this productivity boost (or other very low hanging things) if they don't get around to getting the boost from hiring humans.

Thanks for this - I'm in a more peripheral part of the industry (consumer/industrial LLM usage, not directly at an AI lab), and my timelines are somewhat longer (5 years for 50% chance), but I may be using a different criterion for "automate virtually all remote workers". It'll be a fair bit of time (in AI frame - a year or ten) between "labs show generality sufficient to automate most remote work" and "most remote work is actually performed by AI".

A key dynamic is that I think massive acceleration in AI is likely after the point when AIs can accelerate labor working on AI R&D. (Due to all of: the direct effects of accelerating AI software progress, this acceleration rolling out to hardware R&D and scaling up chip production, and potentially greatly increased investment.) See also here and here.

So, you might very quickly (1-2 years) go from "the AIs are great, fast, and cheap software engineers speeding up AI R&D" to "wildly superhuman AI that can achieve massive technical accomplishments".

Inference compute scaling might imply we first get fewer, smarter AIs.

Prior estimates imply that the compute used to train a future frontier model could also be used to run tens or hundreds of millions of human equivalents per year at the first time when AIs are capable enough to dominate top human experts at cognitive tasks[1] (examples here from Holden Karnofsky, here from Tom Davidson, and here from Lukas Finnveden). I think inference time compute scaling (if it worked) might invalidate this picture and might imply that you get far smaller numbers of human equivalents when you first get performance that dominates top human experts, at least in short timelines where compute scarcity might be important. Additionally, this implies that at the point when you have abundant AI labor which is capable enough to obsolete top human experts, you might also have access to substantially superhuman (but scarce) AI labor (and this could pose additional risks).

The point I make here might be obvious to many, but I thought it was worth making as I haven't seen this update from inference time compute widely discussed in public.[2]

However, note that if inference compute allows for trading off between quantity of tasks completed and the difficulty of tasks that can be completed (or the quality of completion), then depending on the shape of the inference compute returns curve, at the point when we can run some AIs as capable as top human experts, it might be worse to run many (or any) AIs at this level of capability rather than using less inference compute per task and completing more tasks (or completing tasks serially faster).

Further, efficiency might improve quickly such that we don't have a long regime with only a small number of human equivalents. I do a BOTEC on this below.

I'll do a specific low-effort BOTEC to illustrate my core point that you might get far smaller quantities of top human expert-level performance at first. Suppose that we first get AIs that are ~10x human cost (putting aside inflation in compute prices due to AI demand) and as capable as top human experts at this price point (at tasks like automating R&D). If this is in ~3 years, then maybe you'll have $15 million/hour worth of compute. Supposing $300/hour human cost, then we get ($15 million/hour) / ($300/hour) / (10 times human cost per compute dollar) * (4 AI hours / human work hours) = 20k human equivalents. This is a much smaller number than prior estimates.

The estimate of $15 million/hour worth of compute comes from: OpenAI spent ~$5 billion on compute this year, so $5 billion / (24*365) = $570k/hour; spend increases by ~3x per year, so $570k/hour * 3³ = $15 million.

The estimate for 3x per year comes from: total compute is increasing by 4-5x per year, but some is hardware improvement and some is increased spending. Hardware improvement is perhaps ~1.5x per year and 4.5/1.5 = 3. This at least roughly matches this estimate from Epoch which estimates 2.4x additional spend (on just training) per year. Also, note that Epoch estimates 4-5x compute per year here and 1.3x hardware FLOP/dollar here, which naively implies around 3.4x, but this seems maybe too high given the prior number.

Earlier, I noted that efficiency might improve rapidly. We can look at recent efficiency changes to get a sense for how fast. GPT-4o mini is roughly 100x cheaper than GPT-4 and is perhaps roughly as capable all together (probably lower intelligence but better elicited). It was trained roughly 1.5 years later (GPT-4 was trained substantially before it was released) for ~20x efficiency improvement per year. This is selected for being a striking improvment and probably involves low hanging fruit, but AI R&D will be substantially accelerated in the future which probably more than cancels this out. Further, I expect that inference compute will be inefficent in the tail of high inference compute such that efficiency will be faster than this once the capability is reached. So we might expect that the number of AI human equivalents increases by >20x per year and potentially much faster if AI R&D is greatly accelerated (and compute doesn't bottleneck this). If progress is "just" 50x per year, then it would still take a year to get to millions of human equivalents based on my earlier estimate of 20k human equivalents. Note that once you have millions of human equivalents, you also have increased availability of generally substantially superhuman AI systems.

I'm refering to the notion of Top-human-Expert-Dominating AI that I define in this post, though without a speed/cost constraint as I want to talk about the cost when you first get such systems. ↩︎

Of course, we should generally expect huge uncertainty with future AI architectures such that fixating on very efficient substitution of inference time compute for training would be a mistake, along with fixating on minimal or no substitution. I think a potential error of prior discussion is insufficient focus on the possibility of relatively scalable (though potentially inefficient) substitution of inference time for training (which o3 appears to exhibit) such that we see very expensive (and potentially slow) AIs that dominate top human expert performance prior to seeing cheap and abundant AIs which do this. ↩︎

Sometimes people think of "software-only singularity" as an important category of ways AI could go. A software-only singularity can roughly be defined as when you get increasing-returns growth (hyper-exponential) just via the mechanism of AIs increasing the labor input to AI capabilities software[1] R&D (i.e., keeping fixed the compute input to AI capabilities).

While the software-only singularity dynamic is an important part of my model, I often find it useful to more directly consider the outcome that software-only singularity might cause: the feasibility of takeover-capable AI without massive compute automation. That is, will the leading AI developer(s) be able to competitively develop AIs powerful enough to plausibly take over[2] without previously needing to use AI systems to massively (>10x) increase compute production[3]?

[This is by Ryan Greenblatt and Alex Mallen]

We care about whether the developers' AI greatly increases compute production because this would require heavy integration into the global economy in a way that relatively clearly indicates to the world that AI is transformative. Greatly increasing compute production would require building additional fabs which currently involve substantial lead times, likely slowing down the transition from clearly transformative AI to takeover-capable AI.[4][5] In addition to economic integration, this would make the developer dependent on a variety of actors after the transformative nature of AI is made more clear, which would more broadly distribute power.

For example, if OpenAI is selling their AI's labor to ASML and massively accelerating chip production before anyone has made takeover-capable AI, then (1) it would be very clear to the world that AI is transformatively useful and accelerating, (2) building fabs would be a constraint in scaling up AI which would slow progress, and (3) ASML and the Netherlands could have a seat at the table in deciding how AI goes (along with any other actors critical to OpenAI's competitiveness). Given that AI is much more legibly transformatively powerful in this world, they might even want to push for measures to reduce AI/human takeover risk.

A software-only singularity is not necessary for developers to have takeover-capable AIs without having previously used them for massive compute automation (it is also not clearly sufficient, since it might be too slow or uncompetitive by default without massive compute automation as well). Instead, developers might be able to achieve this outcome by other forms of fast AI progress:

- Algorithmic / scaling is fast enough at the relevant point independent of AI automation. This would likely be due to one of:

- Downstream AI capabilities progress very rapidly with the default software and/or hardware progress rate at the relevant point;

- Existing compute production (including repurposable production) suffices (this is sometimes called hardware overhang) and the developer buys a bunch more chips (after generating sufficient revenue or demoing AI capabilities to attract investment);

- Or there is a large algorithmic advance that unlocks a new regime with fast progress due to low-hanging fruit.[6]

- AI automation results in a one-time acceleration of software progress without causing an explosive feedback loop, but this does suffice for pushing AIs above the relevant capability threshold quickly.

- Other developers just aren't very competitive (due to secrecy, regulation, or other governance regimes) such that proceeding at a relatively slower rate (via algorithmic and hardware progress) suffices.

My inside view sense is that the feasibility of takeover-capable AI without massive compute automation is about 75% likely if we get AIs that dominate top-human-experts prior to 2040.[7] Further, I think that in practice, takeover-capable AI without massive compute automation is maybe about 60% likely. (This is because massively increasing compute production is difficult and slow, so if proceeding without massive compute automation is feasible, this would likely occur.) However, I'm reasonably likely to change these numbers on reflection due to updating about what level of capabilities would suffice for being capable of takeover (in the sense defined in an earlier footnote) and about the level of revenue and investment needed to 10x compute production. I'm also uncertain whether a substantially smaller scale-up than 10x (e.g., 3x) would suffice to cause the effects noted earlier.

To-date software progress has looked like "improvements in pre-training algorithms, data quality, prompting strategies, tooling, scaffolding" as described here. ↩︎

This takeover could occur autonomously, via assisting the developers in a power grab, or via partnering with a US adversary. I'll count it as "takeover" if the resulting coalition has de facto control of most resources. I'll count an AI as takeover-capable if it would have a >25% chance of succeeding at a takeover (with some reasonable coalition) if no other actors had access to powerful AI systems. Further, this takeover wouldn't be preventable with plausible interventions on legible human controlled institutions, so e.g., it doesn't include the case where an AI lab is steadily building more powerful AIs for an eventual takeover much later (see discussion here). This 25% probability is as assessed under my views but with the information available to the US government at the time this AI is created. This line is intended to point at when states should be very worried about AI systems undermining their sovereignty unless action has already been taken. Note that insufficient inference compute could prevent an AI from being takeover-capable even if it could take over with enough parallel copies. And note that whether a given level of AI capabilities suffices for being takeover-capable is dependent on uncertain facts about how vulnerable the world seems (from the subjective vantage point I defined earlier). Takeover via the mechanism of an AI escaping, independently building more powerful AI that it controls, and then this more powerful AI taking over would count as that original AI that escaped taking over. I would also count a rogue internal deployment that leads to the AI successfully backdooring or controlling future AI training runs such that those future AIs take over. However, I would not count merely sabotaging safety research. ↩︎

I mean 10x additional production (caused by AI labor) above long running trends in expanding compute production and making it more efficient. As in, spending on compute production has been increasing each year and the efficiency of compute production (in terms of FLOP/$ or whatever) has also been increasing over time, and I'm talking about going 10x above this trend due to using AI labor to expand compute production (either revenue from AI labor or having AIs directly work on chips as I'll discuss in a later footnote). ↩︎

Note that I don't count converting fabs from making other chips (e.g., phones) to making AI chips as scaling up compute production; I'm just considering things that scale up the amount of AI chips we could somewhat readily produce. TSMC's revenue is "only" about $100 billion per year, so if only converting fabs is needed, this could be done without automation of compute production and justified on the basis of AI revenues that are substantially smaller than the revenues that would justify building many more fabs. Currently AI is around 15% of leading node production at TSMC, so only a few more doublings are needed for it to consume most capacity. ↩︎

Note that the AI could indirectly increase compute production via being sufficiently economically useful that it generates enough money to pay for greatly scaling up compute. I would count this as massive compute automation, though some routes through which the AI could be sufficiently economically useful might be less convincing of transformativeness than the AIs substantially automating the process of scaling up compute production. However, I would not count the case where AI systems are impressive enough to investors that this justifies investment that suffices for greatly scaling up fab capacity while profits/revenues wouldn't suffice for greatly scaling up compute on their own. In reality, if compute is greatly scaled up, this will occur via a mixture of speculative investment, the AI earning revenue, and the AI directly working on automating labor along the compute supply chain. If the revenue and direct automation would suffice for an at least massive compute scale-up (>10x) on their own (removing the component from speculative investment), then I would count this as massive compute automation. ↩︎

A large algorithmic advance isn't totally unprecedented. It could suffice if we see an advance similar to what seemingly happened with reasoning models like o1 and o3 in 2024. ↩︎

About 2/3 of this is driven by software-only singularity. ↩︎

I'm not sure if the definition of takeover-capable-AI (abbreviated as "TCAI" for the rest of this comment) in footnote 2 quite makes sense. I'm worried that too much of the action is in "if no other actors had access to powerful AI systems", and not that much action is in the exact capabilities of the "TCAI". In particular: Maybe we already have TCAI (by that definition) because if a frontier AI company or a US adversary was blessed with the assumption "no other actor will have access to powerful AI systems", they'd have a huge advantage over the rest of the world (as soon as they develop more powerful AI), plausibly implying that it'd be right to forecast a >25% chance of them successfully taking over if they were motivated to try.

And this seems somewhat hard to disentangle from stuff that is supposed to count according to footnote 2, especially: "Takeover via the mechanism of an AI escaping, independently building more powerful AI that it controls, and then this more powerful AI taking over would" and "via assisting the developers in a power grab, or via partnering with a US adversary". (Or maybe the scenario in 1st paragraph is supposed to be excluded because current AI isn't agentic enough to "assist"/"partner" with allies as supposed to just be used as a tool?)

What could a competing definition be? Thinking about what we care most about... I think two events especially stand out to me:

- When would it plausibly be catastrophically bad for an adversary to steal an AI model?

- When would it plausibly be catastrophically bad for an AI to be power-seeking and non-controlled?

Maybe a better definition would be to directly talk about these two events? So for example...

- "Steal is catastrophic" would be true if...

- "Frontier AI development projects immediately acquire good enough security to keep future model weights secure" has significantly less probability of AI-assisted takeover than

- "Frontier AI development projects immediately have their weights stolen, and then acquire security that's just as good as in (1a)."[1]

- "Power-seeking and non-controlled is catastrophic" would be true if...

- "Frontier AI development projects immediately acquire good enough judgment about power-seeking-risk that they henceforth choose to not deploy any model that would've been net-negative for them to deploy" has significantly less probability of AI-assisted takeover than

- "Frontier AI development acquire the level of judgment described in (2a) 6 months later."[2]

Where "significantly less probability of AI-assisted takeover" could be e.g. at least 2x less risk.

- ^

The motivation for assuming "future model weights secure" in both (1a) and (1b) is so that the downside of getting the model weights stolen imminently isn't nullified by the fact that they're very likely to get stolen a bit later, regardless. Because many interventions that would prevent model weight theft this month would also help prevent it future months. (And also, we can't contrast 1a'="model weights are permanently secure" with 1b'="model weights get stolen and are then default-level-secure", because that would already have a really big effect on takeover risk, purely via the effect on future model weights, even though current model weights probably aren't that important.)

- ^

The motivation for assuming "good future judgment about power-seeking-risk" is similar to the motivation for assuming "future model weights secure" above. The motivation for choosing "good judgment about when to deploy vs. not" rather than "good at aligning/controlling future models" is that a big threat model is "misaligned AIs outcompete us because we don't have any competitive aligned AIs, so we're stuck between deploying misaligned AIs and being outcompeted" and I don't want to assume away that threat model.

I agree that the notion of takeover-capable AI I use is problematic and makes the situation hard to reason about, but I intentionally rejected the notions you propose as they seemed even worse to think about from my perspective.

Is there some reason for why current AI isn't TCAI by your definition?

(I'd guess that the best way to rescue your notion it is to stipulate that the TCAIs must have >25% probability of taking over themselves. Possibly with assistance from humans, possibly by manipulating other humans who think they're being assisted by the AIs — but ultimately the original TCAIs should be holding the power in order for it to count. That would clearly exclude current systems. But I don't think that's how you meant it.)

Oh sorry. I somehow missed this aspect of your comment.

Here's a definition of takeover-capable AI that I like: the AI is capable enough that plausible interventions on known human controlled institutions within a few months no longer suffice to prevent plausible takeover. (Which implies that making the situation clear to the world is substantially less useful and human controlled institutions can no longer as easily get a seat at the table.)

Under this definition, there are basically two relevant conditions:

- The AI is capable enough to itself take over autonomously. (In the way you defined it, but also not in a way where intervening on human institutions can still prevent the takeover, so e.g.., the AI just having a rogue deployment within OpenAI doesn't suffice if substantial externally imposed improvements to OpenAI's security and controls would defeat the takeover attempt.)

- Or human groups can do a nearly immediate takeover with the AI such that they could then just resist such interventions.

I'll clarify this in the comment.

Hm — what are the "plausible interventions" that would stop China from having >25% probability of takeover if no other country could build powerful AI? Seems like you either need to count a delay as successful prevention, or you need to have a pretty low bar for "plausible", because it seems extremely difficult/costly to prevent China from developing powerful AI in the long run. (Where they can develop their own supply chains, put manufacturing and data centers underground, etc.)

Yeah, I'm trying to include delay as fine.

I'm just trying to point at "the point when aggressive intervention by a bunch of parties is potentially still too late".

I really like the framing here, of asking whether we'll see massive compute automation before [AI capability level we're interested in]. I often hear people discuss nearby questions using IMO much more confusing abstractions, for example:

- "How much is AI capabilities driven by algorithmic progress?" (problem: obscures dependence of algorithmic progress on compute for experimentation)

- "How much AI progress can we get 'purely from elicitation'?" (lots of problems, e.g. that eliciting a capability might first require a (possibly one-time) expenditure of compute for exploration)

My inside view sense is that the feasibility of takeover-capable AI without massive compute automation is about 75% likely if we get AIs that dominate top-human-experts prior to 2040.[6] Further, I think that in practice, takeover-capable AI without massive compute automation is maybe about 60% likely.

Is this because:

- You think that we're >50% likely to not get AIs that dominate top human experts before 2040? (I'd be surprised if you thought this.)

- The words "the feasibility of" importantly change the meaning of your claim in the first sentence? (I'm guessing it's this based on the following parenthetical, but I'm having trouble parsing.)

Overall, it seems like you put substantially higher probability than I do on getting takeover capable AI without massive compute automation (and especially on getting a software-only singularity). I'd be very interested in understanding why. A brief outline of why this doesn't seem that likely to me:

- My read of the historical trend is that AI progress has come from scaling up all of the factors of production in tandem (hardware, algorithms, compute expenditure, etc.).

- Scaling up hardware production has always been slower than scaling up algorithms, so this consideration is already factored into the historical trends. I don't see a reason to believe that algorithms will start running away with the game.

- Maybe you could counter-argue that algorithmic progress has only reflected returns to scale from AI being applied to AI research in the last 12-18 months and that the data from this period is consistent with algorithms becoming more relatively important relative to other factors?

- I don't see a reason that "takeover-capable" is a capability level at which algorithmic progress will be deviantly important relative to this historical trend.

I'd be interested either in hearing you respond to this sketch or in sketching out your reasoning from scratch.

I put roughly 50% probability on feasibility of software-only singularity.[1]

(I'm probably going to be reinventing a bunch of the compute-centric takeoff model in slightly different ways below, but I think it's faster to partially reinvent than to dig up the material, and I probably do use a slightly different approach.)

My argument here will be a bit sloppy and might contain some errors. Sorry about this. I might be more careful in the future.

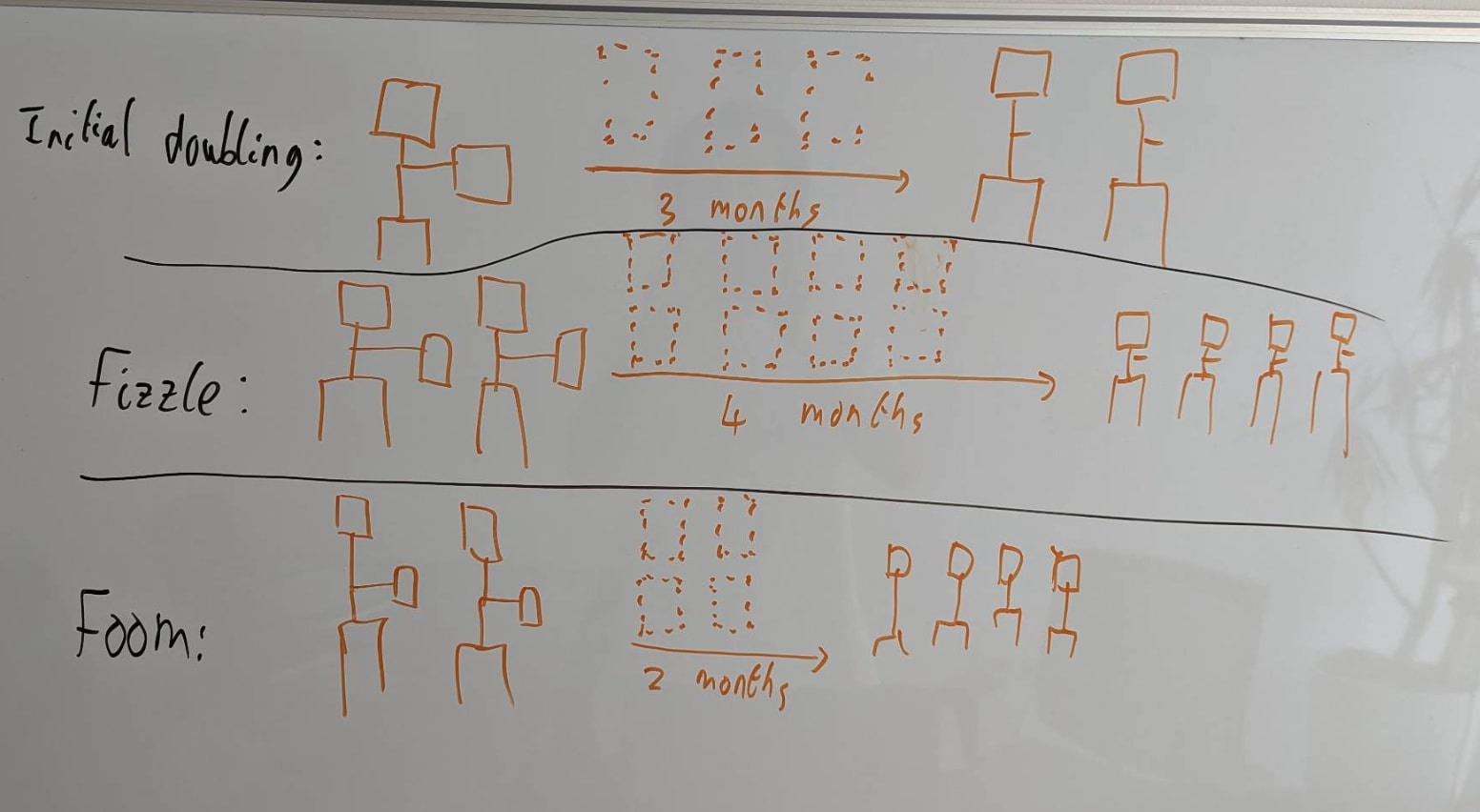

The key question for software-only singularity is: "When the rate of labor production is doubled (as in, as if your employees ran 2x faster[2]), does that more than double or less than double the rate of algorithmic progress? That is, algorithmic progress as measured by how fast we increase the labor production per FLOP/s (as in, the labor production from AI labor on a fixed compute base).". This is a very economics-style way of analyzing the situation, and I think this is a pretty reasonable first guess. Here's a diagram I've stolen from Tom's presentation on explosive growth illustrating this:

Basically, every time you double the AI labor supply, does the time until the next doubling (driven by algorithmic progress) increase (fizzle) or decrease (foom)? I'm being a bit sloppy in saying "AI labor supply". We care about a notion of parallelism-adjusted labor (faster laborers are better than more laborers) and quality increases can also matter. I'll make the relevant notion more precise below.

I'm about to go into a relatively complicated argument for why I think the historical data supports software-only singularity. If you want more basic questions answered (such as "Doesn't retraining make this too slow?"), consider looking at Tom's presentation on takeoff speeds.

Here's a diagram that you might find useful in understanding the inputs into AI progress:

And here is the relevant historical context in terms of trends:

- Historically, algorithmic progress in LLMs looks like 3-4x per year including improvements on all parts of the stack.[3] This notion of algorithmic progress is "reduction in compute needed to reach a given level of frontier performance", which isn't equivalent to increases in the rate of labor production on a fixed compute base. I'll talk more about this below.

- This has been accompanied by increases of around 4x more hardware per year[4] and maybe 2x more quality-adjusted (parallel) labor working on LLM capabilities per year. I think total employees working on LLM capabilities have been roughly 3x-ing per year (in recent years), but quality has been decreasing over time.

- A 2x increase in the quality-adjusted parallel labor force isn't as good as the company getting the same sorts of labor tasks done 2x faster (as in, the resulting productivity from having your employees run 2x faster) due to parallelism tax (putting aside compute bottlenecks for now). I'll apply the same R&D parallelization penalty as used in Tom's takeoff model and adjust this down by a power of 0.7 to yield 1.6x per year in increased labor production rate. (So, it's as though the company keeps the same employees, but those employees operate 1.6x faster each year.)

- It looks like the fraction of progress driven by algorithmic progress has been getting larger over time.

- So, overall, we're getting 3-4x algorithmic improvement per year being driven by 1.6x more labor per year and 4x more hardware.

So, the key question is how much of this algorithmic improvement is being driven by labor vs. by hardware. If it is basically all hardware, then the returns to labor must be relatively weak and software-only singularity seems unlikely. If it is basically all labor, then we're seeing 3-4x algorithmic improvement per year for 1.6x more labor per year, which means the returns to labor look quite good (at least historically). Based on some guesses and some poll questions, my sense is that capabilities researchers would operate about 2.5x slower if they had 10x less compute (after adaptation), so the production function is probably proportional to (). (This is assuming a cobb-douglas production function.) Edit: see the derivation of the relevant thing in Deep's comment, my old thing was wrong[5].

Now, let's talk more about the transfer from algorithmic improvement to the rate of labor production. A 2x algorithmic improvement in LLMs makes it so that you can reach the same (frontier) level of performance for 2x less training compute, but we actually care about a somewhat different notion for software-only singularity: how much you can increase the production rate of labor (the thing that we said was increasing at roughly a rate of 1.6x per year by using more human employees). My current guess is that every 2x algorithmic improvement in LLMs increases the rate of labor production by , and I'm reasonably confident that the exponent isn't much below . I don't currently have a very principled estimation strategy for this, and it's somewhat complex to reason about. I discuss this in the appendix below.

So, if this exponent is around 1, our central estimate of 2.3 from above corresponds to software-only singularity and our estimate of 1.56 from above under more pessimistic assumptions corresponds to this not being feasible. Overall, my sense is that the best guess numbers lean toward software-only singularity.

More precisely, software-only singularity that goes for >500x effective compute gains above trend (to the extent this metric makes sense, this is roughly >5 years of algorithmic progress). Note that you can have software-only singularity be feasible while buying tons more hardware at the same time. And if this ends up expanding compute production by >10x using AI labor, then this would count as massive compute production despite also having a feasible software-only singularity. (However, in most worlds, I expect software-only singularity to be fast enough, if feasible, that we don't see this.) ↩︎

Rather than denominating labor in accelerating employees, we could instead denominate in number of parallel employees. This would work equivalently (we can always convert into equivalents to the extent these things can funge), but because we can actually accelerate employees and the serial vs. parallel distinction is important, I think it is useful to denominate in accelerating employees. ↩︎

I would have previously cited 3x, but recent progress looks substantially faster (with DeepSeek v3 and reasoning models seemingly indicating somewhat faster than 3x progress IMO), so I've revised to 3-4x. ↩︎

This includes both increased spending and improved chips. Here, I'm taking my best guess at increases in hardware usage for training and transferring this to research compute usage on the assumption that training compute and research compute have historically been proportional. ↩︎

Edit: the reasoning I did here was off. Here was the old text: so the production function is probably roughly (). Increasing compute by 4x and labor by 1.6x increases algorithmic improvement by 3-4x, let's say 3.5x, so we have , . Thus, doubling labor would increase algorithmic improvement by . This is very sensitive to the exact numbers; if we instead used 3x slower instead of 2.5x slower, we would have gotten that doubling labor increases algorithmic improvement by , which is substantially lower. Obviously, all the exact numbers here are highly uncertain. ↩︎

Hey Ryan! Thanks for writing this up -- I think this whole topic is important and interesting.

I was confused about how your analysis related to the Epoch paper, so I spent a while with Claude analyzing it. I did a re-analysis that finds similar results, but also finds (I think) some flaws in your rough estimate. (Keep in mind I'm not an expert myself, and I haven't closely read the Epoch paper, so I might well be making conceptual errors. I think the math is right though!)

I'll walk through my understanding of this stuff first, then compare to your post. I'll be going a little slowly (A) to help myself refresh myself via referencing this later, (B) to make it easy to call out mistakes, and (C) to hopefully make this legible to others who want to follow along.

Using Ryan's empirical estimates in the Epoch model

The Epoch model

The Epoch paper models growth with the following equation:

1. ,

where A = efficiency and E = research input. We want to consider worlds with a potential software takeoff, meaning that increases in AI efficiency directly feed into research input, which we model as . So the key consideration seems to be the ratio . If it's 1, we get steady exponential growth from scaling inputs; greater, superexponential; smaller, subexponential.[1]

Fitting the model

How can we learn about this ratio from historical data?

Let's pretend history has been convenient and we've seen steady exponential growth in both variables, so and . Then has been constant over time, so by equation 1, has been constant as well. Substituting in for A and E, we find that is constant over time, which is only possible if and the exponent is always zero. Thus if we've seen steady exponential growth, the historical value of our key ratio is:

2. .

Intuitively, if we've seen steady exponential growth while research input has increased more slowly than research output (AI efficiency), there are superlinear returns to scaling inputs.

Introducing the Cobb-Douglas function

But wait! , research input, is an abstraction that we can't directly measure. Really there's both compute and labor inputs. Those have indeed been growing roughly exponentially, but at different rates.

Intuitively, it makes sense to say that "effective research input" has grown as some kind of weighted average of the rate of compute and labor input growth. This is my take on why a Cobb-Douglas function of form (3) , with a weight parameter , is useful here: it's a weighted geometric average of the two inputs, so its growth rate is a weighted average of their growth rates.

Writing that out: in general, say both inputs have grown exponentially, so and . Then E has grown as , so is the weighted average (4) of the growth rates of labor and capital.

Then, using Equation 2, we can estimate our key ratio as .

Let's get empirical!

Plugging in your estimates:

- Historical compute scaling of 4x/year gives ;

- Historical labor scaling of 1.6x gives ;

- Historical compute elasticity on research outputs of 0.4 gives ;

- Adding these together, .[2]

- Historical efficiency improvement of 3.5x/year gives .

- So [3]

Adjusting for labor-only scaling

But wait: we're not done yet! Under our Cobb-Douglas assumption, scaling labor by a factor of 2 isn't as good as scaling all research inputs by a factor of 2; it's only as good.

Plugging in Equation 3 (which describes research input in terms of compute and labor) to Equation 1 (which estimates AI progress based on research), our adjusted form of the Epoch model is .

Under a software-only singularity, we hold compute constant while scaling labor with AI efficiency, so multiplied by a fixed compute term. Since labor scales as A, we have . By the same analysis as in our first section, we can see A grows exponentially if , and grows grows superexponentially if this ratio is >1. So our key ratio just gets multiplied by , and it wasn't a waste to find it, phew!

Now we get the true form of our equation: we get a software-only foom iff , or (via equation 2) iff we see empirically that . Call this the takeoff ratio: it corresponds to a) how much AI progress scales with inputs and b) how much of a penalty we take for not scaling compute.

Result: Above, we got , so our takeoff ratio is . That's quite close! If we think it's more reasonable to think of a historical growth rate of 4 instead of 3.5, we'd increase our takeoff ratio by a factor of , to a ratio of , right on the knife edge of FOOM. [4] [note: I previously had the wrong numbers here: I had lambda/beta = 1.6, which would mean the 4x/year case has a takeoff ratio of 1.05, putting it into FOOM land]

So this isn't too far off from your results in terms of implications, but it is somewhat different (no FOOM for 3.5x, less sensitivity to the exact historical growth rate).

Analyzing your approach:

Tweaking alpha:

Your estimate of is in fact similar in form to my ratio - but what you're calculating instead is .

One indicator that something's wrong is that your result involves checking whether , or equivalently whether , or equivalently whether . But the choice of 2 is arbitrary -- conceptually, you just want to check if scaling software by a factor n increases outputs by a factor n or more. Yet clearly varies with n.

One way of parsing the problem is that alpha is (implicitly) time dependent - it is equal to exp(r * 1 year) / exp(q * 1 year), a ratio of progress vs inputs in the time period of a year. If you calculated alpha based on a different amount of time, you'd get a different value. By contrast, r/q is a ratio of rates, so it stays the same regardless of what timeframe you use to measure it.[5]

Maybe I'm confused about what your Cobb-Douglas function is meant to be calculating - is it E within an Epoch-style takeoff model, or something else?

Nuances:

Does Cobb-Douglas make sense?

The geometric average of rates thing makes sense, but it feels weird that that simple intuitive approach leads to a functional form (Cobb-Douglas) that also has other implications.

Wikipedia says Cobb-Douglas functions can have the exponents not add to 1 (while both being between 0 and 1). Maybe this makes sense here? Not an expert.

How seriously should we take all this?

This whole thing relies on...

- Assuming smooth historical trends

- Assuming those trends continue in the future

- And those trends themselves are based on functional fits to rough / unclear data.

It feels like this sort of thing is better than nothing, but I wish we had something better.

I really like the various nuances you're adjusting for, like parallel vs serial scaling, and especially distinguishing algorithmic improvement from labor efficiency. [6] Thinking those things through makes this stuff feel less insubstantial and approximate...though the error bars still feel quite large.

- ^

Actually there's a complexity here, which is that scaling labor alone may be less efficient than scaling "research inputs" which include both labor and compute. We'll come to this in a few paragraphs.

- ^

This is only coincidentally similar to your figure of 2.3 :)

- ^

I originally had 1.6 here, but as Ryan points out in a reply it's actually 1.5. I've tried to reconstruct what I could have put into a calculator to get 1.6 instead, and I'm at a loss!

- ^

I was curious how aggressive the superexponential growth curve would be with a takeoff ratio of a mere. A couple of Claude queries gave me different answers (maybe because the growth is so extreme that different solvers give meaningfully different approximations?), but they agreed that growth is fairly slow in the first year (~5x) and then hits infinity by the end of the second year.I wrote this comment with the wrong numbers (0.96 instead of 0.9), so it doesn't accurately represent what you get if you plug in 4x capability growth per year. Still cool to get a sense of what these curves look like, though. - ^

I think can be understood in terms of the alpha-being-implicitly-a-timescale-function thing -- if you compare an alpha value with the ratio of growth you're likely to see during the same time period, e.g. alpha(1 year) and n = one doubling, you probably get reasonable-looking results.

- ^

I find it annoying that people conflate "increased efficiency of doing known tasks" with "increased ability to do new useful tasks". It seems to me that these could be importantly different, although it's hard to even settle on a reasonable formalization of the latter. Some reasons this might be okay:

- There's a fuzzy conceptual boundary between the two: if GPT-n can do the task at 0.01% success rate, does that count as a "known task?" what about if it can do each of 10 components at 0.01% success, so in practice we'll never see it succeed if run without human guidance, but we know it's technically possible?

- Under a software singularity situation, maybe the working hypothesis is that the model can do everything necessary to improve itself a bunch, maybe just not very efficiently yet. So we only need efficiency growth, not to increase the task set. That seems like a stronger assumption than most make, but maybe a reasonable weaker assumption is that the model will 'unlock' the necessary new tasks over time, after which point they become subject to rapid efficiency growth.

- And empirically, we have in fact seen rapid unlocking of new capabilities, so it's not crazy to approximate "being able to do new things" as a minor but manageable slowdown to the process of AI replacing human AI R&D labor.

I think you are correct with respect to my estimate of and the associated model I was using. Sorry about my error here. I think I was fundamentally confusing a few things in my head when writing out the comment.

I think your refactoring of my strategy is correct and I tried to check it myself, though I don't feel confident in verifying it is correct.

Your estimate doesn't account for the conversion between algorithmic improvement and labor efficiency, but it is easy to add this in by just changing the historical algorithmic efficiency improvement of 3.5x/year to instead be the adjusted effective labor efficiency rate and then solving identically. I was previously thinking the relationship was that labor efficiency was around the same as algorithmic efficiency, but I now think this is more likely to be around based on Tom's comment.

Plugging this is, we'd get:

(In your comment you said , but I think the arithmetic is a bit off here and the answer is closer to 1.5.)

It feels like this sort of thing is better than nothing, but I wish we had something better.

The existing epoch paper is pretty good, but doesn't directly target LLMs in a way which seems somewhat sad.

The thing I'd be most excited about is:

- Epoch does an in depth investigation using an estimation methodology which is directly targeting LLMs (rather than looking at returns in some other domains).

- They use public data and solicit data from companies about algorithmic improvement, head count, compute on experiments etc.

- (Some) companies provide this data. Epoch potentially doesn't publish this exact data and instead just publishes the results of the final analysis to reduce capabilities externalities. (IMO, companies are somewhat unlikely to do this, but I'd like to be proven wrong!)

(I'm going through this and understanding where I made an error with my approach to . I think I did make an error, but I'm trying to make sure I'm not still confused. Edit: I've figured this out, see my other comment.)

Wikipedia says Cobb-Douglas functions can have the exponents not add to 1 (while both being between 0 and 1). Maybe this makes sense here? Not an expert.

It shouldn't matter in this case because we're raising the whole value of to .

Here's my own estimate for this parameter:

Once AI has automated AI R&D, will software progress become faster or slower over time? This depends on the extent to which software improvements get harder to find as software improves – the steepness of the diminishing returns.

We can ask the following crucial empirical question:

When (cumulative) cognitive research inputs double, how many times does software double?

(In growth models of a software intelligence explosion, the answer to this empirical question is a parameter called r.)

If the answer is “< 1”, then software progress will slow down over time. If the answer is “1”, software progress will remain at the same exponential rate. If the answer is “>1”, software progress will speed up over time.

The bolded question can be studied empirically, by looking at how many times software has doubled each time the human researcher population has doubled.

(What does it mean for “software” to double? A simple way of thinking about this is that software doubles when you can run twice as many copies of your AI with the same compute. But software improvements don’t just improve runtime efficiency: they also improve capabilities. To incorporate these improvements, we’ll ultimately need to make some speculative assumptions about how to translate capability improvements into an equivalently-useful runtime efficiency improvement..)

The best quality data on this question is Epoch’s analysis of computer vision training efficiency. They estimate r = ~1.4: every time the researcher population doubled, training efficiency doubled 1.4 times. (Epoch’s preliminary analysis indicates that the r value for LLMs would likely be somewhat higher.) We can use this as a starting point, and then make various adjustments:

- Upwards for improving capabilities. Improving training efficiency improves capabilities, as you can train a model with more “effective compute”. To quantify this effect, imagine we use a 2X training efficiency gain to train a model with twice as much “effective compute”. How many times would that double “software”? (I.e., how many doublings of runtime efficiency would have the same effect?) There are various sources of evidence on how much capabilities improve every time training efficiency doubles: toy ML experiments suggest the answer is ~1.7; human productivity studies suggest the answer is ~2.5. I put more weight on the former, so I’ll estimate 2. This doubles my median estimate to r = ~2.8 (= 1.4 * 2).

- Upwards for post-training enhancements. So far, we’ve only considered pre-training improvements. But post-training enhancements like fine-tuning, scaffolding, and prompting also improve capabilities (o1 was developed using such techniques!). It’s hard to say how large an increase we’ll get from post-training enhancements. These can allow faster thinking, which could be a big factor. But there might also be strong diminishing returns to post-training enhancements holding base models fixed. I’ll estimate a 1-2X increase, and adjust my median estimate to r = ~4 (2.8*1.45=4).

- Downwards for less growth in compute for experiments. Today, rising compute means we can run increasing numbers of GPT-3-sized experiments each year. This helps drive software progress. But compute won't be growing in our scenario. That might mean that returns to additional cognitive labour diminish more steeply. On the other hand, the most important experiments are ones that use similar amounts of compute to training a SOTA model. Rising compute hasn't actually increased the number of these experiments we can run, as rising compute increases the training compute for SOTA models. And in any case, this doesn’t affect post-training enhancements. But this still reduces my median estimate down to r = ~3. (See Eth (forthcoming) for more discussion.)

- Downwards for fixed scale of hardware. In recent years, the scale of hardware available to researchers has increased massively. Researchers could invent new algorithms that only work at the new hardware scales for which no one had previously tried to to develop algorithms. Researchers may have been plucking low-hanging fruit for each new scale of hardware. But in the software intelligence explosions I’m considering, this won’t be possible because the hardware scale will be fixed. OAI estimate ImageNet efficiency via a method that accounts for this (by focussing on a fixed capability level), and find a 16-month doubling time, as compared with Epoch’s 9-month doubling time. This reduces my estimate down to r = ~1.7 (3 * 9/16).

- Downwards for diminishing returns becoming steeper over time. In most fields, returns diminish more steeply than in software R&D. So perhaps software will tend to become more like the average field over time. To estimate the size of this effect, we can take our estimate that software is ~10 OOMs from physical limits (discussed below), and assume that for each OOM increase in software, r falls by a constant amount, reaching zero once physical limits are reached. If r = 1.7, then this implies that r reduces by 0.17 for each OOM. Epoch estimates that pre-training algorithmic improvements are growing by an OOM every ~2 years, which would imply a reduction in r of 1.02 (6*0.17) by 2030. But when we include post-training enhancements, the decrease will be smaller (as [reason], perhaps ~0.5. This reduces my median estimate to r = ~1.2 (1.7-0.5).

Overall, my median estimate of r is 1.2. I use a log-uniform distribution with the bounds 3X higher and lower (0.4 to 3.6).

My sense is that I start with a higher value due to the LLM case looking faster (and not feeling the need to adjust downward in a few places like you do in the LLM case). Obviously the numbers in the LLM case are much less certain given that I'm guessing based on qualitative improvement and looking at some open source models, but being closer to what we actually care about maybe overwhelms this.

I also think I'd get a slightly lower update on the diminishing returns case due to thinking it has a good chance of having substantially sharper dimishing returns as you get closer and closer rather than having linearly decreasing (based on some first principles reasoning and my understanding of how returns diminished in the semi-conductor case).

But the biggest delta is that I think I wasn't pricing in the importance of increasing capabilities. (Which seems especially important if you apply a large R&D parallelization penalty.)

Here's a simple argument I'd be keen to get your thoughts on:

On the Possibility of a Tastularity

Research taste is the collection of skills including experiment ideation, literature review, experiment analysis, etc. that collectively determine how much you learn per experiment on average (perhaps alongside another factor accounting for inherent problem difficulty / domain difficulty, of course, and diminishing returns)

Human researchers seem to vary quite a bit in research taste--specifically, the difference between 90th percentile professional human researchers and the very best seems like maybe an order of magnitude? Depends on the field, etc. And the tails are heavy; there is no sign of the distribution bumping up against any limits.

Yet the causes of these differences are minor! Take the very best human researchers compared to the 90th percentile. They'll have almost the same brain size, almost the same amount of experience, almost the same genes, etc. in the grand scale of things.

This means we should assume that if the human population were massively bigger, e.g. trillions of times bigger, there would be humans whose brains don't look that different from the brains of the best researchers on Earth, and yet who are an OOM or more above the best Earthly scientists in research taste. -- AND it suggests that in the space of possible mind-designs, there should be minds which are e.g. within 3 OOMs of those brains in every dimension of interest, and which are significantly better still in the dimension of research taste. (How much better? Really hard to say. But it would be surprising if it was only, say, 1 OOM better, because that would imply that human brains are running up against the inherent limits of research taste within a 3-OOM mind design space, despite human evolution having only explored a tiny subspace of that space, and despite the human distribution showing no signs of bumping up against any inherent limits)

OK, so what? So, it seems like there's plenty of room to improve research taste beyond human level. And research taste translates pretty directly into overall R&D speed, because it's about how much experimentation you need to do to achieve a given amount of progress. With enough research taste, you don't need to do experiments at all -- or rather, you look at the experiments that have already been done, and you infer from them all you need to know to build the next design or whatever.

Anyhow, tying this back to your framework: What if the diminishing returns / increasing problem difficulty / etc. dynamics are such that, if you start from a top-human-expert-level automated researcher, and then do additional AI research to double its research taste, and then do additional AI research to double its research taste again, etc. the second doubling happens in less time than it took to get to the first doubling? Then you get a singularity in research taste (until these conditions change of course) -- the Tastularity.

How likely is the Tastularity? Well, again one piece of evidence here is the absurdly tiny differences between humans that translate to huge differences in research taste, and the heavy-tailed distribution. This suggests that we are far from any inherent limits on research taste even for brains roughly the shape and size and architecture of humans, and presumably the limits for a more relaxed (e.g. 3 OOM radius in dimensions like size, experience, architecture) space in mind-design are even farther away. It similarly suggests that there should be lots of hill-climbing that can be done to iteratively improve research taste.

How does this relate to software-singularity? Well, research taste is just one component of algorithmic progress; there is also speed, # of parallel copies & how well they coordinate, and maybe various other skills besides such as coding ability. So even if the Tastularity isn't possible, improvements in taste will stack with improvements in those other areas, and the sum might cross the critical threshold.

In my framework, this is basically an argument that algorithmic-improvement-juice can be translated into a large improvement in AI R&D labor production via the mechanism of greatly increasing the productivity per "token" (or unit of thinking compute or whatever). See my breakdown here where I try to convert from historical algorithmic improvement to making AIs better at producing AI R&D research.

Your argument is basically that this taste mechanism might have higher returns than reducing cost to run more copies.

I agree this sort of argument means that returns to algorithmic improvement on AI R&D labor production might be bigger than you would otherwise think. This is both because this mechanism might be more promising than other mechanisms and even if it is somewhat less promising, diverse approaches make returns dimish less aggressively. (In my model, this means that best guess conversion might be more like rather than .)

I think it might be somewhat tricky to train AIs to have very good research taste, but this doesn't seem that hard via training them on various prediction objectives.

At a more basic level, I expect that training AIs to predict the results of experiments and then running experiments based on value of information as estimated partially based on these predictions (and skipping experiments with certain results and more generally using these predictions to figure out what to do) seems pretty promising. It's really hard to train humans to predict the results of tens of thousands of experiments (both small and large), but this is relatively clean outcomes based feedback for AIs.

I don't really have a strong inside view on how much the "AI R&D research taste" mechanism increases the returns to algorithmic progress.

Appendix: Estimating the relationship between algorithmic improvement and labor production

In particular, if we fix the architecture to use a token abstraction and consider training a new improved model: we care about how much cheaper you make generating tokens at a given level of performance (in inference tok/flop), how much serially faster you make generating tokens at a given level of performance (in serial speed: tok/s at a fixed level of tok/flop), and how much more performance you can get out of tokens (labor/tok, really per serial token). Then, for a given new model with reduced cost, increased speed, and increased production per token and assuming a parallelism penalty of , we can compute the increase in production as roughly: [1] (I can show the math for this if there is interest).

My sense is that reducing inference compute needed for a fixed level of capability that you already have (using a fixed amount of training run) is usually somewhat easier than making frontier compute go further by some factor, though I don't think it is easy to straightforwardly determine how much easier this is[2]. Let's say there is a 1.25 exponent on reducing cost (as in, 2x algorithmic efficiency improvement is as hard as a reduction in cost)? (I'm also generally pretty confused about what the exponent should be. I think exponents from 0.5 to 2 seem plausible, though I'm pretty confused. 0.5 would correspond to the square root from just scaling data in scaling laws.) It seems substantially harder to increase speed than to reduce cost as speed is substantially constrained by serial depth, at least when naively applying transformers. Naively, reducing cost by (which implies reducing parameters by ) will increase speed by somewhat more than as depth is cubic in layers. I expect you can do somewhat better than this because reduced matrix sizes also increase speed (it isn't just depth) and because you can introduce speed-specific improvements (that just improve speed and not cost). But this factor might be pretty small, so let's stick with for now and ignore speed-specific improvements. Now, let's consider the case where we don't have productivity multipliers (which is strictly more conservative). Then, we get that increase in labor production is:

So, these numbers ended up yielding an exact equivalence between frontier algorithmic improvement and effective labor production increases. (This is a coincidence, though I do think the exponent is close to 1.)

In practice, we'll be able to get slightly better returns by spending some of our resources investing in speed-specific improvements and in improving productivity rather than in reducing cost. I don't currently have a principled way to estimate this (though I expect something roughly principled can be found by looking at trading off inference compute and training compute), but maybe I think this improves the returns to around . If the coefficient on reducing cost was much worse, we would invest more in improving productivity per token, which bounds the returns somewhat.

Appendix: Isn't compute tiny and decreasing per researcher?

One relevant objection is: Ok, but is this really feasible? Wouldn't this imply that each AI researcher has only a tiny amount of compute? After all, if you use 20% of compute for inference of AI research labor, then each AI only gets 4x more compute to run experiments than for inference on itself? And, as you do algorithmic improvement to reduce AI cost and run more AIs, you also reduce the compute per AI! First, it is worth noting that as we do algorithmic progress, both the cost of AI researcher inference and the cost of experiments on models of a given level of capability go down. Precisely, for any experiment that involves a fixed number of inference or gradient steps on a model which is some fixed effective compute multiplier below/above the performance of our AI laborers, cost is proportional to inference cost (so, as we improve our AI workforce, experiment cost drops proportionally). However, for experiments that involve training a model from scratch, I expect the reduction in experiment cost to be relatively smaller such that such experiments must become increasingly small relative to frontier scale. Overall, it might be important to mostly depend on approaches which allow for experiments that don't require training runs from scratch or to adapt to increasingly smaller full experiment training runs. To the extent AIs are made smarter rather than more numerous, this isn't a concern. Additionally, we only need so many orders of magnitude of growth. In principle, this consideration should be captured by the exponents in the compute vs. labor production function, but it is possible this production function has very different characteristics in the extremes. Overall, I do think this concern is somewhat important, but I don't think it is a dealbreaker for a substantial number of OOMs of growth.

Appendix: Can't algorithmic efficiency only get so high?

My sense is that this isn't very close to being a blocker. Here is a quick bullet point argument (from some slides I made) that takeover-capable AI is possible on current hardware.

- Human brain is perhaps ~1e14 FLOP/s

- With that efficiency, each H100 can run 10 humans (current cost $2 / hour)

- 10s of millions of human-level AIs with just current hardware production

- Human brain is probably very suboptimal:

- AIs already much better at many subtasks

- Possible to do much more training than within lifetime training with parallelism

- Biological issues: locality, noise, focused on sensory processing, memory limits

- Smarter AI could be more efficient (smarter humans use less FLOP per task)

- AI could be 1e2-1e7 more efficient on tasks like coding, engineering

- Probably smaller improvement on video processing

- Say, 1e4 so 100,000 per H100

- Qualitative intelligence could be a big deal

- Seems like peak efficiency isn't a blocker.

This is just approximate because you can also trade off speed with cost in complicated ways and research new ways to more efficiently trade off speed and cost. I'll be ignoring this for now. ↩︎

It's hard to determine because inference cost reductions have been driven by spending more compute on making smaller models e.g., training a smaller model for longer rather than just being driven by algorithmic improvement, and I don't have great numbers on the difference off the top of my head. ↩︎

In practice, we'll be able to get slightly better returns by spending some of our resources investing in speed-specific improvements and in improving productivity rather than in reducing cost. I don't currently have a principled way to estimate this (though I expect something roughly principled can be found by looking at trading off inference compute and training compute), but maybe I think this improves the returns to around .

Interesting comparison point: Tom thought this would give a way larger boost in his old software-only singularity appendix.

When considering an "efficiency only singularity", some different estimates gets him r~=1; r~=1.5; r~=1.6. (Where r is defined so that "for each x% increase in cumulative R&D inputs, the output metric will increase by r*x". The condition for increasing returns is r>1.)

Whereas when including capability improvements:

I said I was 50-50 on an efficiency only singularity happening, at least temporarily. Based on these additional considerations I’m now at more like ~85% on a software only singularity. And I’d guess that initially r = ~3 (though I still think values as low as 0.5 or as high as 6 as plausible). There seem to be many strong ~independent reasons to think capability improvements would be a really huge deal compared to pure efficiency problems, and this is borne out by toy models of the dynamic.

Though note that later in the appendix he adjusts down from 85% to 65% due to some further considerations. Also, last I heard, Tom was more like 25% on software singularity. (ETA: Or maybe not? See other comments in this thread.)

Interesting. My numbers aren't very principled and I could imagine thinking capability improvements are a big deal for the bottom line.

I'll paste my own estimate for this param in a different reply.

But here are the places I most differ from you:

- Bigger adjustment for 'smarter AI'. You've argue in your appendix that, only including 'more efficient' and 'faster' AI, you think the software-only singularity goes through. I think including 'smarter' AI makes a big difference. This evidence suggests that doubling training FLOP doubles output-per-FLOP 1-2 times. In addition, algorithmic improvements will improve runtime efficiency. So overall I think a doubling of algorithms yields ~two doublings of (parallel) cognitive labour.

- --> software singularity more likely

- Lower lambda. I'd now use more like lambda = 0.4 as my median. There's really not much evidence pinning this down; I think Tamay Besiroglu thinks there's some evidence for values as low as 0.2. This will decrease the observed historical increase in human workers more than it decreases the gains from algorithmic progress (bc of speed improvements)

- --> software singularity slightly more likely

- Complications thinking about compute which might be a wash.

- Number of useful-experiments has increased by less than 4X/year. You say compute inputs have been increasing at 4X. But simultaneously the scale of experiments ppl must run to be near to the frontier has increased by a similar amount. So the number of near-frontier experiments has not increased at all.

- This argument would be right if the 'usefulness' of an experiment depends solely on how much compute it uses compared to training a frontier model. I.e. experiment_usefulness = log(experiment_compute / frontier_model_training_compute). The 4X/year increases the numerator and denominator of the expression, so there's no change in usefulness-weighted experiments.

- That might be false. GPT-2-sized experiments might in some ways be equally useful even as frontier model size increases. Maybe a better expression would be experiment_usefulness = alpha * log(experiment_compute / frontier_model_training_compute) + beta * log(experiment_compute). In this case, the number of usefulness-weighted experiments has increased due to the second term.

- --> software singularity slightly more likely

- Steeper diminishing returns during software singularity. Recent algorithmic progress has grabbed low-hanging fruit from new hardware scales. During a software-only singularity that won't be possible. You'll have to keep finding new improvements on the same hardware scale. Returns might diminish more quickly as a result.

- --> software singularity slightly less likely

- Compute share might increase as it becomes scarce. You estimate a share of 0.4 for compute, which seems reasonable. But it might fall over time as compute becomes a bottleneck. As an intuition pump, if your workers could think 1e10 times faster, you'd be fully constrained on the margin by the need for more compute: more labour wouldn't help at all but more compute could be fully utilised so the compute share would be ~1.

- --> software singularity slightly less likely

--> overall these compute adjustments prob make me more pessimistic about the software singularity, compared to your assumptions

- Number of useful-experiments has increased by less than 4X/year. You say compute inputs have been increasing at 4X. But simultaneously the scale of experiments ppl must run to be near to the frontier has increased by a similar amount. So the number of near-frontier experiments has not increased at all.

Taking it all together, i think you should put more probability on the software-only singluarity, mostly because of capability improvements being much more significant than you assume.

Yep, I think my estimates were too low based on these considerations and I've updated up accordingly. I updated down on your argument that maybe decreases linearly as you approach optimal efficiency. (I think it probably doesn't decrease linearly and instead drops faster towards the end based partially on thinking a bit about the dynamics and drawing on the example of what we've seen in semi-conductor improvement over time, but I'm not that confident.) Maybe I'm now at like 60% software-only is feasible given these arguments.

Lower lambda. I'd now use more like lambda = 0.4 as my median. There's really not much evidence pinning this down; I think Tamay Besiroglu thinks there's some evidence for values as low as 0.2.

Isn't this really implausible? This implies that if you had 1000 researchers/engineers of average skill at OpenAI doing AI R&D, this would be as good as having one average skill researcher running at 16x () speed. It does seem very slightly plausible that having someone as good as the best researcher/engineer at OpenAI run at 16x speed would be competitive with OpenAI, but that isn't what this term is computing. 0.2 is even more crazy, implying that 1000 researchers/engineers is as good as one researcher/engineer running at 4x speed!

I think 0.4 is far on the lower end (maybe 15th percentile) for all the way down to one accelerated researcher, but seems pretty plausible at the margin.

As in, 0.4 suggests that 1000 researchers = 100 researchers at 2.5x speed which seems kinda reasonable while 1000 researchers = 1 researcher at 16x speed does seem kinda crazy / implausible.

So, I think my current median lambda at likely margins is like 0.55 or something and 0.4 is also pretty plausible at the margin.

Ok, I think what is going on here is maybe that the constant you're discussing here is different from the constant I was discussing. I was trying to discuss the question of how much worse serial labor is than parallel labor, but I think the lambda you're talking about takes into account compute bottlenecks and similar?

Not totally sure.

Taking it all together, i think you should put more probability on the software-only singluarity, mostly because of capability improvements being much more significant than you assume.

I'm confused — I thought you put significantly less probability on software-only singularity than Ryan does? (Like half?) Maybe you were using a different bound for the number of OOMs of improvement?

I think Tom's take is that he expects I will put more probability on software only singularity after updating on these considerations. It seems hard to isolate where Tom and I disagree based on this comment, but maybe it is on how much to weigh various considerations about compute being a key input.

Based on some guesses and some poll questions, my sense is that capabilities researchers would operate about 2.5x slower if they had 10x less compute (after adaptation)

Can you say roughly who the people surveyed were? (And if this was their raw guess or if you've modified it.)

I saw some polls from Daniel previously where I wasn't sold that they were surveying people working on the most important capability improvements, so wondering if these are better.

Also, somewhat minor, but: I'm slightly concerned that surveys will overweight areas where labor is more useful relative to compute (because those areas should have disproportionately many humans working on them) and therefore be somewhat biased in the direction of labor being important.