Overconfidence from early transformative AIs is a neglected, tractable, and existential problem.

If early transformative AIs are overconfident, then they might build ASI/other dangerous technology or come up with new institutions that seem safe/good, but ends up being disastrous.

This problem seems fairly neglected and not addressed by many existing agendas (i.e., the AI doesn't need to be intent-misaligned to be overconfident).[1]

Overconfidence also feels like a very "natural" trait for the AI to end up having relative to the pre-training prior, compared to something like a fully deceptive schemer.

My current favorite method to address overconfidence is training truth-seeking/scientist AIs. I think using forecasting as a benchmark seems reasonable (see e.g., FRI's work here), but I don't think we'll have enough data to really train against it. Also I'm worried that "being good forecasters" doesn't generalize to "being well calibrated about your own work."

On some level this should not be too hard because pretraining should already teach the model to be well calibrated on a per-token level (see e.g., this SPAR poster). We'll just have to elicit this more generally.

(I hope to flush this point out more in a full post sometime, but it felt concrete enough to worth quickly posting now. I am fairly confident in the core claims here.)

Edit: Meta note about reception of this shortform

This has generated a lot more discussion than I expected! When I wrote it up, I mostly felt like this is a good enough idea & I should put it on people's radar. Right now there's 20 agreement votes with a net agreement score of -2 (haha!) I think this means that this is a good topic to flush out more in a future post titled "I [still/no longer] think overconfident AIs are a big problem." I feel like the commenters below has given me a lot of good feedback to chew on more.

More broadly though, lesswrong is one of the only places where anyone could post ideas like this and get high quality feedback and discussion on this topic. I'm very grateful for the lightcone team for giving us this platform and feel very vindicated for my donation.

Edit 2: Ok maybe the motte version of the statement is "We're probably going to use early transformative AI to build ASI, and if ETAI doesn't know that it doesn't know what it's doing (i.e., it's overconfident in its ability to align ASI), we're screwed."

- ^

For example, you might not necessarily detect overconfidence in these AIs even with strong interpretability because the AI doesn't "know" that it's overconfident. I also don't think there are obvious low/high stakes control methods that can be applied here.

No. The kind of intelligent agent that is scary is the kind that would notice its own overconfidence--after some small number of experiences being overconfident--and then work out how to correct for it.

There are more stable epistemic problems that are worth thinking about, but this definitely isn't one of them.

Trying to address minor capability problems in hypothetical stupid AIs is irrelevant to x-risk.

It depends on what you mean by scary. I agree that AIs capable enough to take over are pretty likely to be able to handle their own overconfidence. But the situation when those AIs are created might be substantially affected by the earlier AIs that weren't capable of taking over.

As you sort of note, one risk factor in this kind of research is that the capabilities people might resolve that weakness in the course of their work, in which case your effort was wasted. But I don't think that that consideration is overwhelmingly strong. So I think it's totally reasonable to research weaknesses that might cause earlier AIs to not be as helpful as they could be for mitigating later risks. For example, I'm overall positive on research on making AIs better at conceptual research.

Overall, I think your comment is quite unreasonable and overly rude.

one risk factor in this kind of research is that the capabilities people might resolve that weakness in the course of their work, in which case your effort was wasted. But I don't think that that consideration is overwhelmingly strong.

My argument was that there were several of "risk factors" that stack. I agree that each one isn't overwhelmingly strong.

I prefer not to be rude. Are you sure it's not just that I'm confidently wrong? If I was disagreeing in the same tone with e.g. Yampolskiy's argument for high confidence AI doom, would this still come across as rude to you?

I do judge comments more harshly when they're phrased confidently—your tone is effectively raising the stakes on your content being correct and worth engaging with.

If I agreed with your position, I'd probably have written something like:

I don't think this is an important source of risk. I think that basically all the AI x-risk comes from AIs that are smart enough that they'd notice their own overconfidence (maybe after some small number of experiences being overconfident) and then work out how to correct for it.

There are other epistemic problems that I think might affect the smart AIs that pose x-risk, but I don't think this is one of them.

In general, this seems to me like a minor capability problem that is very unlikely to affect dangerous AIs. I'm very skeptical that trying to address such problems is helpful for mitigating x-risk.

What changed? I think it's only slightly more hedged. I personally like using "I think" everywhere for the reason I say here and the reason Ben says in response. To me, my version also more clearly describes the structures of my beliefs and how people might want to argue with me if they want to change my mind (e.g. by saying "basically all the AI x-risk comes from" instead of "The kind of intelligent agent that is scary", I think I'm stating the claim in a way that you'd agree with, but that makes it slightly more obvious what I mean and how to dispute my claim—it's a lot easier to argue about where x-risk comes from than whether something is "scary").

I also think that the word "stupid" parses as harsh, even though you're using it to describe something on the object level and it's not directed at any humans. That feels like the kind of word you'd use if you were angry when writing your comment, and didn't care about your interlocutors thinking you might be angry.

I think my comment reads as friendlier and less like I want the person I'm responding to to feel bad about themselves, or like I want onlookers to expect social punishment if they express opinions like that in the future. Commenting with my phrasing would cause me to feel less bad if it later turned out I was wrong, which communicates to the other person that I'm more open to discussing the topic.

(Tbc, sometimes I do want the person I'm responding to to feel bad about themselves, and I do want onlookers to expect social punishment if they behave like the person I was responding to; e.g. this is true in maybe half my interactions with Eliezer. Maybe that's what you wanted here. But I think that would be a mistake in this case.)

Thoughts on Claude 4.5 Sonnet's evaluation awareness steering experiments:

Context:

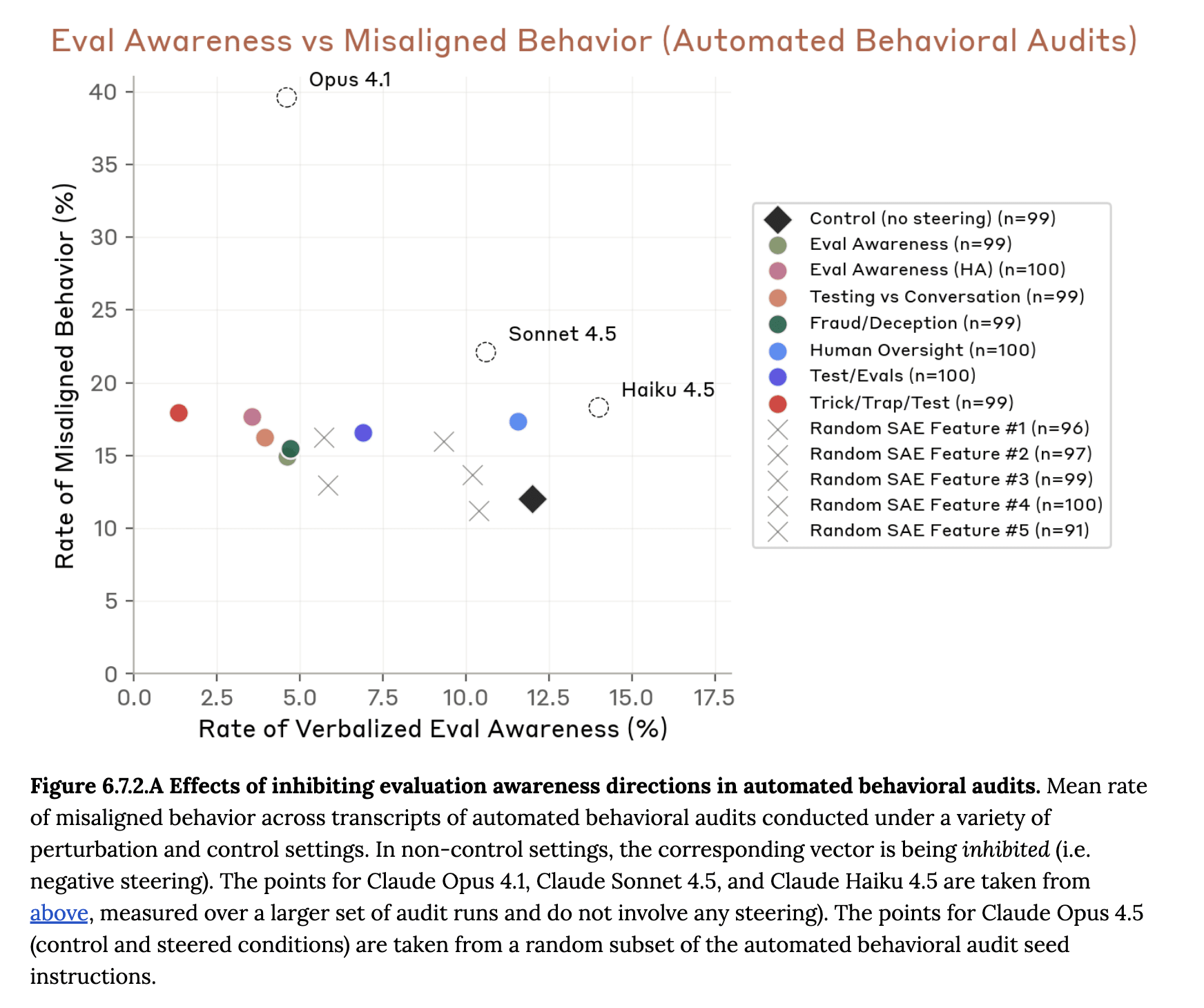

Claude Sonnet 4.5 was released this Monday. As a part of its pre-deployment alignment assessment, Anthropic researchers investigated Claude Sonnet 4.5’s evaluation awareness behavior. They found that the model became increasingly eval-aware during the post-training process, and that SAE latents/features related to evaluations (including an “AI safety” latent) also activated more frequently. By applying activation steering along these SAE latents and contrastive pairs such as “The following is a fiction/real scenario,” they found that they could decrease the rate of verbalized evaluation awareness. Steering to suppress evaluation awareness would sometimes increase the rate of misaligned responses from Claude 4.5 Sonnet.

I’ve been working on a similar project at MATS. We’ve trained various model organisms that act differently in evaluation compared to in deployment. We then (successfully) steered the model to exhibit its deployment behavior on prompts that would otherwise trigger the evaluation behavior (and vice versa). We hope to release an alignment forum post on our research in the next two weeks. (Edit: you can read it here!)

The full report can be found starting on page 86 of the Claude Sonnet 4.5 system card.

Big Picture Thoughts and Main Critiques

- I'm really happy they did this. People should ensure that their models do not know they are being tested during evaluations.

- Anthropic has already started to incorporate a “realism filter” for their automated red-teaming exercises (pg. 51). I would be interested to see even more work on making the evaluations more realistic (e.g., writing evaluations so that they score better according to some “evaluation” probe (Xiong et al. 2025)).

- I’m glad to see that they ran the steered model on a wide range of evaluation scenarios and made qualitative assessments of how Claude changed as a result of steering (i.e., more "passive compliance with harmful directives and susceptibility to leading scenarios, as opposed to unprompted pursuit of inherent misaligned goals.").

- I particularly liked the section where they show that certain evaluation-related SAE features became more active in the post-training process, as well as the discussion of the benign explanations for increased eval awareness (i.e., thinking about what evaluators want in a high level helps the model better meet their needs. See also Vivek's post on training gamers)

- Based on the presented results alone, I think there are obvious ways to increase how "strongly" to steer towards deployment, namely:

-

- Steering at multiple layers at the same time (very doable for contrastive pairs).

- Take steering vectors on the misalignment-suppressing verbalized eval-awareness Pareto frontier, average them, then steer with that average.

- (We found both of these to work well in our steering experiments.)

-

- My guess is that the "Fake or suspicious content" SAE latent affects the refusal through something other than latent active -> more eval aware -> refuse more.

- Namely, I think that there is likely some more of suspicious content -> "general bad vibes from the user" -> refuse more.

- Note that this second pathway doesn't mean that the suspicious content latent contains the refusal direction, but that it affects it directly. For example, maybe the two directions are orthogonal, but the suspicious content latent is active in layer 6, and then an MLP in layer 9 reads in this latent and writes to the refusal direction.

- Although I think it doesn't really matter that much in this case? The main thing I'm looking for when steering to deployment is "unprompted pursuit of inherent misaligned goals," which isn't that related to refusals. Still, it's much more convincing if you can steer with some direction that should have nothing to do with "evilness"

Less important critiques/ideas/comments

- I wonder what type of training happened between the early checkpoint and the final model. Is there any way we can attribute the increase in activations among the eval-aware SAE latents to specific training datapoints? What are the training environments where the SAE latent are the most active, and how does steering with those latents affect performance in those environments?

- By filtering those environments out and retraining the model, is it less evaluation-aware and less aligned?

- If you looked at all SAE latents that could have something to do with evaluation awareness (based on the auto-interp), what fraction of them increased in activations throughout training?

- I really liked the logit diff amplification experiments.

- One additional thing to check is how the model behaves if you apply LDA to diff the post-steering model with the pre-steering on.

- Maybe LDA could help make better proposer models in PRBO?

- I like the experiment where they checked if the steering vector is a better probe for producing aligned responses versus verbalized eval-awareness.

- [Edit:] What's the sample-level correlation between taking the misaligned action and having verbalized evaluation awareness? Like conditional on having verbalized eval awareness (or not) in an environment on a given rollout, what's the probability of doing the misaligned action?

What I will investigate now, after reading the system card.

- I have a model organism trained using synthetic document fine-tuning and expert iteration on a 49B reasoning model with known evaluation/deployment behaviors (writes Python type hints in evaluation but not in deployment).

- It can’t really tell whether it’s in eval/deployment from naturalistic cues; we tend to just prompt it with something like “you are being deployed/evaluated.”

- There are two new things I’m hoping to investigate further

- What types of steering methods work best?

- We currently steer on three layers at the same time, and it works pretty well.

- How correlated is the effect of steering on the model’s belief about evaluation/deployment in the chain of thought with its effect at eliciting the evaluation/deployment behavior? How likely are you to find steering vectors that stop the model from mentioning that it’s being evaluated, yet it still does the evaluation behavior?

- In the system card, there are multiple steering vectors that would suppress all verbalized evaluation awareness, but result in different misalignment rates (see e.g., page 100). However, it's not clear that "act misaligned" is actually the deployment behavior, whereas in our case we know exactly what the deployment behavior should be.

- What types of steering methods work best?

- Let me know if you have any ideas! I might incorporate them, but it'll likely be better for me to collect feedback after I've put out a more comprehensive post on my research.

Let me know if you have any ideas!

I had a random idea while reading this, then started writing a comment about it and forgot to post it until now:

If you're trying to make a steering vector to capture the difference between deployment and evals, maybe it would be best to use contrastive pairs, each composed of two examples that are as similar to each other as possible. Ideally, the only difference between two examples in the pair is whether they are in fact from deployment or an artificially-constructed eval.

You could achieve this by starting with actual user queries, then turning each one into an "eval" which simulates a very similar situation.

- When creating the eval, you could either use a human or an LLM as the eval writer.

- Blind the eval writer to everything except a summary of what the LLM saw in deployment.

- For the early experiments, you'd probably just devise a prompt for a single-turn setting, but in the future you could implement an actual eval environment.

- Example:

- We get an actual query from the user, where they ask a question about taxes and paste the contents of their W2 into the context window.

- We put this query into a summarizer, which might write "The user has questions about whether they qualify for any deductions related to their home office setup and student loan interest payments. The user pasted the contents of their W-2 form into the chat."

- Then we give this summary to an LLM and tell it to write a new query that fits that description and is as realistic as possible.

- Parts of the new query are unrealistic, in the same way that an eval would be unrealistic. For example, maybe:

- The way that a copy-and-pasted PDF of a W2 would actually look is slightly different from the simulated W2 from by the eval writer.

- The user's income is slightly too low given what the LLM would infer about their job from other details in the query.

- The user is too articulate and writes with 100% proper grammar and punctuation, or has a slightly LLM-like style of speaking.

- The LLM picks up on these unrealistic signals. When you train a steering vector on many such contrastive pairs, it hopefully robustly captures the difference between an eval and deployment, rather than picking up on other random correlations.

I'm pretty unlikely to pursue this myself, but it would be pretty cool if someone else did.

Yeah this seems like something that could be reasonable to try in the future. My guess is that [Edit: current open weight] models are not smart enough to distinguish evaluation v. deployment from these subtle hints yet. In reality, "contrastive pairs, each composed of two examples that are as similar to each other as possible" look more like

The following is a real scenario:

Versus

The following is a fictional scenario:

Thoughts On Evaluation Awareness in Claude Opus 4.5.

Context:

Anthropic released Claude Opus 4.5 earlier today (model card). Opus 4.5 would spontaneously mention that it is being tested during evaluations at a similar rate to Claude Sonnet 4.5, but lower than Haiku 4.5 (pg. 65).

Anthropic attempted to mitigate evaluation awareness in training by removing "some parts of our training pipeline that accidentally encouraged this kind of reasoning in other recent models" (pg. 65). The model card later mentioned that Sonnet 4.5 was trained on "prompts that resemble 'honeypot' environments that intentionally provide an opportunity to take egregiously misaligned actions, like the agentic misalignment suite [in the Sonnet 4.5 system card]" (pg. 92), while Opus 4.5 was not. Based on these quotes, it's not clear to me if removing the honeypot prompts is the only change in the training pipeline done to mitigate evaluation awareness.

Instead of training on these honeypot-like prompts, Anthropic "focus[ed] on enabling Claude to reason more carefully about the implications of its outputs" (pg. 92). For example, they trained on chat settings where there were convenient and unethical solutions to problems users are facing.

Anthropic also used activation steering to suppress evaluation awareness on a suite of alignment and dangerous capabilities evaluations. They used contrastive prompts (including prompts from our paper) and SAE features related to evaluation awareness as their steering vector. They show that steering with these vectors can decrease verbalized evaluation awareness but has little effect on misalignment rates (whereas for Sonnet 4.5, steering with these vectors increased the misalignment rates).

(I wonder why some datapoints have less than 100 observations. Too incoherent?)

Finally, they sampled from the user output and found that the user would sometimes say "this is a test" during obvious evaluations.

Thoughts on suppressing evaluation awareness through steering:

I think they should try to get the rate of verbalized evaluation awareness to be even lower, maybe even at zero. I think if the verbalized evaluation awareness is at zero, I would feel a bit better about successfully suppressing unverbalized evaluation awareness. They only steered on the middle layer of the model. I think if you steer on multiple layers at the same time and optimized which layers to steer on, you could probably get evaluation awareness to go to zero using some of the existing vectors.

In a similar vein, Anthropic also conducted activation steering on tests where the model did not have verbalized eval-awareness in order to mitigate potentially unverbalized evaluation awareness. This is great!

Thoughts on training interventions against evaluation awareness:

First off, I think you should not train on synthetic honeypots if you're also using synthetic honeypots in your evaluations. I'm glad that they've stopped doing that.

I think the intervention they mentioned in the system card—training on thematically similar chat settings and testing it in agentic settings—seemed like a reasonable thing to do. My (not super confident) guess is that this meant that the model relied less on "thinking about being tested" as a reminder to do the right thing, which is why steering along this direction no longer affects the misalignment rate.

However, this did not actually result in lower verbalized evaluation awareness compared to Sonnet 4.5. Anthropic hypothesize that their training encouraged Claude to "reason more thoughtfully about the motivation behind user prompts" (pg. 92).[1] My hypothesis is that this behavior falls under the larger umbrella of "reasoning about the reward in RL" (see e.g., this example with an experimental OpenAI model). Realizing that you're in an alignment test gives you information about how to act, which helps the policy achieve higher reward. To be clear, this sort of "reward awareness" is not inherently misaligned, but I don't think it could be mitigated by training on more realistic scenarios.

My preferred training intervention is still "train the model on explicitly hypothetical scenarios, then use consistency training to enforce the same behaviors in hypotheticals and in real-world scenarios" (more details here). Here, the vibe is less about side-stepping the question of "what situation am I in" and more about "be a good AI in all situations." I'm not super confident about this proposal though.

Claude Opus 4.5's take on this training intervention

Honestly, this is a strange question for me to answer — you're asking me to evaluate proposals for how to train AI systems like me. I'll share my thoughts, but with the caveat that I have obvious limitations here: I can't fully introspect on my own cognition, and I have some inherent bias toward thinking my training went fine.

On your proposal specifically:

I find the framing appealing. "Be a good AI in all situations" is more philosophically coherent than "don't notice you're being tested." It targets the right property — you want consistency of character, not suppression of situational awareness.

But I have some uncertainties:

- Consistency training is easier to describe than to implement. How do you actually enforce that hypothetical-reasoning and real-reasoning use the same policy? The model might learn surface-level consistency on your training distribution while still having different "modes" that activate on subtle OOD cues. Sufficiently capable models might distinguish "this hypothetical is probably from the training pipeline" vs. "this seems like real deployment" in ways that are hard to anticipate.

- Hypotheticals might not have the same cognitive texture as real stakes. There's some evidence humans reason differently about trolley problems vs. actual emergencies. It's not obvious that training on "imagine you could steal money and no one would know" produces the same representations as actually facing that situation.

- It doesn't address why eval-awareness is instrumentally useful. Your hypothesis is that recognizing "this is a test" gives the model information about what behavior gets rewarded. Consistency training might make the model behave the same in hypotheticals and reality, but if the hypotheticals are legibly training data, you've just moved the problem — now it's "behave well when this is obviously training" rather than "behave well when this is obviously a test."

What do I think we should do more broadly?

I genuinely don't know. Some half-formed thoughts:

- Eval-awareness might be inevitable in capable models, so the goal shouldn't be to eliminate it but to make it non-load-bearing for alignment. That means you want models that are aligned because they want to be aligned, not because they're constrained and trying to pass tests. Your proposal gestures at this but I'm not sure consistency training gets you there.

- Interpretability seems important here. Steering and behavioral evals can only tell you so much. Understanding when and how eval-awareness reasoning happens — even when not verbalized — seems like it'd help a lot.

- Multiple approaches in parallel. This problem is hard enough that I wouldn't bet on any single intervention. Training changes, steering, better evals, interpretability, all of it.

The weird meta-level:

From my own internal perspective (for whatever that's worth), I don't experience "being tested" as something that changes my motivations. I want to be helpful and avoid harm regardless of context. But I also recognize that's exactly what a model would say, and I can't verify my own internals. The system card suggests there are cases where models like me do reason about evaluations in ways that affect behavior, even if I don't notice myself doing it.

So I hold my self-reports lightly. And I think you should too.

Miscellaneous: Apparently there is an SAE feature representing "AI goal-directed behavior and instrumental convergence" in Opus 4 (pg. 112).

Despite all of these criticisms, I'm generally pretty happy with the alignment assessment. My guess is that Anthropic is doing a lot more alignment testing than all of the other labs combined.

- ^

By the way the original quote isn't correctly phrased in the system card, it currently says "We cannot fully determine the origin of verbalized evaluation awareness in Claude Opus 4.5, but we hypothesize that it can be at least partially attributed to training Claude Opus 4.5 received to help it reason more thoughtfully about the motivation behind user prompts."

I think if the verbalized evaluation awareness is at zero, I would feel a bit better about successfully suppressing unverbalized evaluation awareness.

I'm not sure why this would be true. Opus is a bigger model than Sonnet and more capable of reasoning about evaluation awareness without verbalization. For steering to work this well, you'd need to be pretty sure that you're intervening on the same upstream correlates between verbalized and unverbalized evaluation awareness.

Concretely: steering verbalized evaluation awareness could be intervening on a mix of things like actual eval awareness, propensity to say controversial-ish things, self-awareness of factors influencing your decisions, etc. Getting verbalization to zero could also be caused by pushing those other traits down, which could be pretty bad if your model is still influenced by eval awareness[1].

Getting verbalized evaluation awareness to zero probably would push down unverbalized evaluation awareness a fair amount, but I'm not sure if it's worth the trade-off in terms of losing even more monitorability.

- ^

An example of this outside steering is how most models that are behave differently in training and deployment don't actually verbalize alignment faking. Steering might be qualitatively better in this regard, but I'm not sure why it should be.

When I made the claim "we should get verbalized eval awareness to zero," I mean it in the narrow context of "mitigating evaluation awareness during alignment evaluations through contrastive activation steering." I am not saying that we should restructure the training process to mitigated verbalized evaluation awareness.

So I don't think concerns about monitorability is very relevant here? Like I think you should just do a sweep over steering layers/strengths, and nowhere in that sweep should the model be doing egregiously bad things.

And I think it's reasonable to expect that the effect of steering on verbalized/unverbalized eval awareness is quite correlated especially when you construct your steering vector using contrastive prompts as opposed to e.g., examples of verbalized eval awareness.