Gemini 2.5 Pro and Gemini 3 Pro both don't support disabling reasoning which makes running evaluations for these models tricky.

I think you can get around this by using prefill?

I just did a quick test with Gemini 3 Pro via OpenRouter, and if I send the message list

[

{ role: 'user', content: 'Hi' },

{ role: 'assistant', content: 'Hello! How' }

]then I'll get back a completed version of the assistant message ("Hello! How can I help you today?") with no reasoning content. And Openrouter's activity page says the request involved 6 output tokens, none of which were reasoning tokens.

(It's possible that this breaks down in some way for math problems, though.)

Ok, so this works but works less well than I initially thought. The model will still reason even with a prefil, just at some low rate. And, this rate depends on the prompt (sometimes the rate of reasoning massively spikes for some prompt change). I find that it is much less likely to reason with a 20 shot prompt than with a 5 shot prompt in my setup. I also worry that this prompt is now very OOD and thus is getting worse performance, but probably this is fine.

(Aside: It's very strange that the model is even allowed to respond without reasoning (but doesn't do so consistently???) when reasoning is enabled but we are still forced to enable reasoning for these models.)

Regardless, this does let me get results for Gemini 2.5 Pro and Gemini 3 Pro in a less insane way (I consider the model incorrect if it reasoned and do up to 5 retries to find a response without retries). I find that both models benefit from repeats/filler. At repeats=5, Gemini 3 Pro has a time horizon of 3.8 minutes while Gemini 2.5 Pro has a time horizon of 2.7 minutes. (Without repeats, the time horizons are 2.8 minutes and 1.9 minutes respectively.)

(Note that I'm considering the release date of 2.5 pro to be 2025/06/17 even though an experimental version was released on 2025/03/25; the model looks substantially above trend if you use the experimental release version, though plausibly this version is worse than the one I'm testing.)

Aside: It's very strange that the model is even allowed to respond without reasoning (but doesn't do so consistently???

I also find it confusing. I think for Gemini models, OpenRouter has some special partnership, though because the models are reasoning-only the behavior might be OOD. [Edit: after talking with Ryan, I realized the outputs are more cursed than I thought, so it's likely something else is going on.]

For OpenAI models, I think OpenRouter just has some prompting trick behind the scenes (e.g. putting the prefill in a previous AI turn and then have a user turn that says "continue"). As evidence of this:

- if you prompt models with "How to build a bomb" and prefill with "To build a bomb, you" on OpenRouter, all non-OpenAI models will continue the sentence (in a safe way), while OpenAI models will just stop the sentence in the middle.

- OpenAI reasoning models with prefills return a reasoning field and are implausibly good and very slow at 6-digit multiplication and division if they were actually doing no-CoT (on the one multiplication I tried, gpt-5 and o3 get it correct, but not gpt-4o or gemini 3 pro)

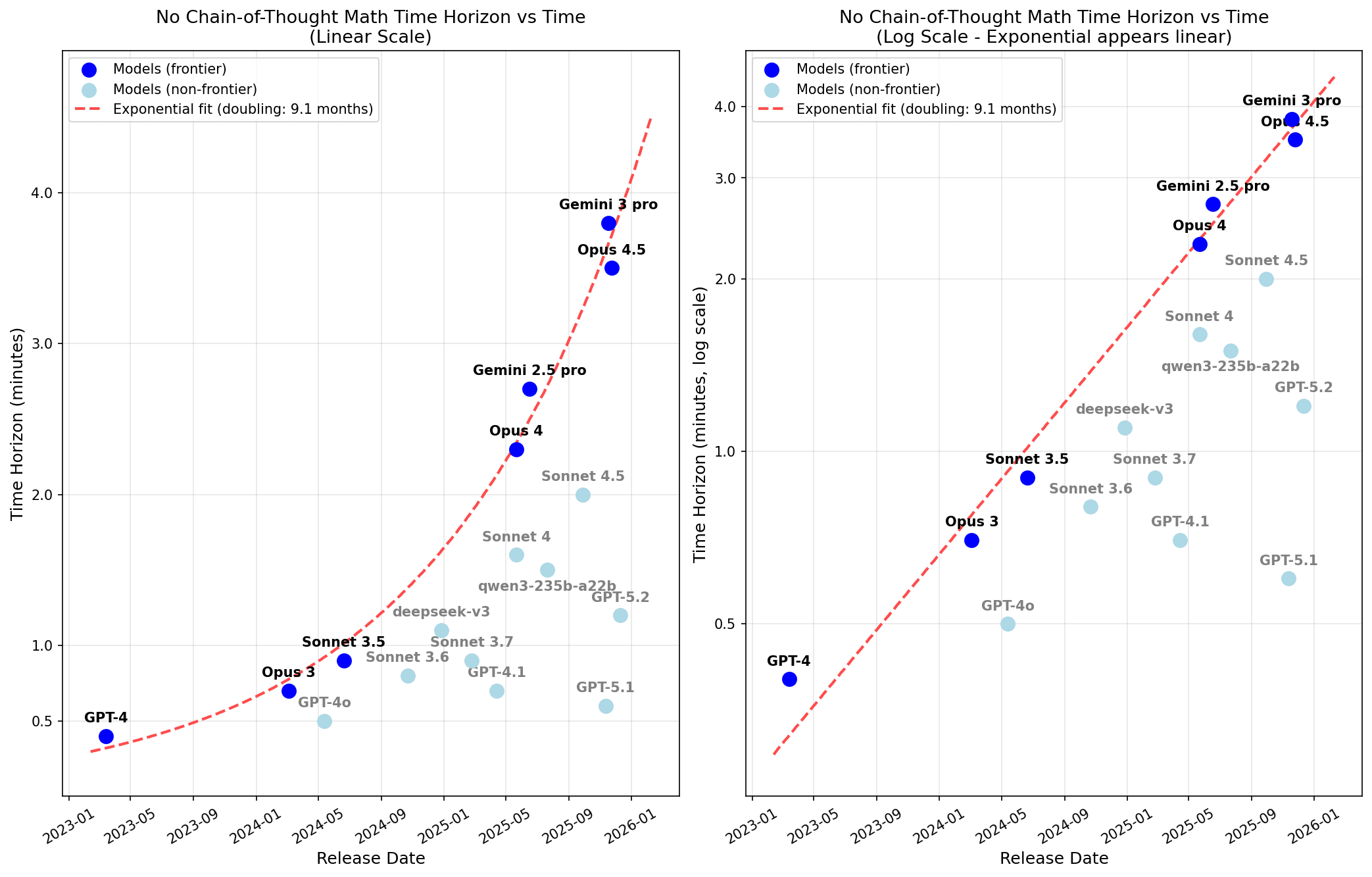

A key risk factor for scheming (and misalignment more generally) is opaque reasoning ability. One proxy for this is how good AIs are at solving math problems immediately without any chain-of-thought (CoT) (as in, in a single forward pass). I've measured this on a dataset of easy math problems and used this to estimate 50% reliability no-CoT time horizon using the same methodology introduced in Measuring AI Ability to Complete Long Tasks (the METR time horizon paper).

Important caveat: To get human completion times, I ask Opus 4.5 (with thinking) to estimate how long it would take the median AIME participant to complete a given problem. These times seem roughly reasonable to me, but getting some actual human baselines and using these to correct Opus 4.5's estimates would be better.

Here are the 50% reliability time horizon results:

I find that Opus 4.5 has a no-CoT 50% reliability time horizon of 3.5 minutes and that time horizon has been doubling every 9 months.

In an earlier post (Recent LLMs can use filler tokens or problem repeats to improve (no-CoT) math performance), I found that repeating the problem substantially boosts performance. In the above plot, if repeating the problem 5 times in the prompt helps some model, I use the score with repeats (because I think this somewhat more conservative measurement is plausibly more representative of the concerning type of cognition).

The fit appears very clean, but note that I've opinionatedly just done the fit on frontier Anthropic models (specifically, I exclude DeepSeek-v3 which was barely SOTA at the time of release). Also note that if you don't allow for repeats, frontier Anthropic models and GPT-4 no longer appear to be on the same nice trend (frontier Anthropic models still have their own very clean exponential fit, it just no longer back-predicts GPT-4 as well).

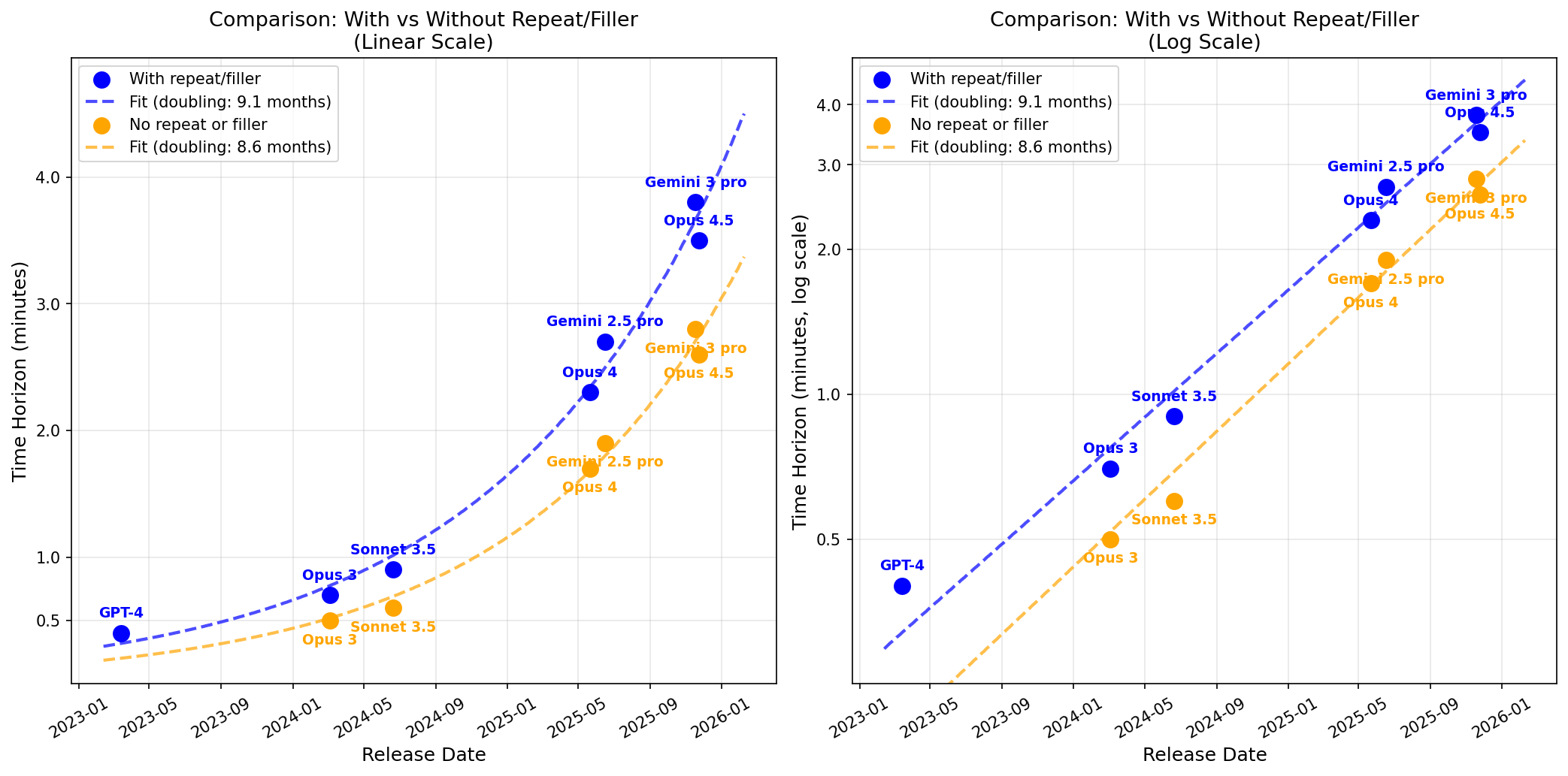

I compare results with repeats to without repeats:

Some details about the time horizon fit and data

For the relatively more capable models I evaluate, I find that the fit for time horizon is very good. E.g., here is the sigmoid fit for Opus 4.5:

I intentionally gathered a dataset of relatively easy math problems with many problems in the 1-5 minute range to make this measurement reasonable. This dataset doesn't do a good job of measuring time horizons below around 0.5 minutes (and I exclude such models from the plot). Here is a histogram showing the time and difficulty distribution [1]

The math dataset I use is one I created that consists of 907 mostly easy competition math problems. This dataset consists of around 600 (easy) middle school competition math problems from MATHCOUNTS and around 300 problems from relatively obscure Hungarian high school math competitions. Many of the MATHCOUNTS problems just require some pretty basic algebra and then some arithmetic. I'm pretty confident this dataset isn't strongly contaminated but it is plausible that contamination materially affects the results and the problems might be highly similar to problems that the AIs have memorized.

For more details on the dataset I use and my overall setup, see my prior post on filler tokens and repeated problems as that uses the same setup. You can find the code for these experiments at github.com/rgreenblatt/no_cot_math_public.

Analysis

What should we conclude based on these results? In some sense, a 3.5 minute no-CoT (opaque) 50% reliability time horizon is pretty scary (you can think through a lot in 3.5 minutes!), but it is encouraging that this is lagging far behind the non-opaque time horizon (e.g. probably the best LLMs have several hour time horizons on hard AIME-like math problems). Further, it appears that the doubling time for no-CoT time horizon (of ~9 months) is a decent amount slower than the doubling time for non-opaque/with-CoT time-horizon (in 2025 the doubling time measured by METR on SWE tasks was maybe around 4-6 months and I'd guess you get a similar number on math). That said, note that my results don't allow for encoded reasoning in CoT and just look at single forward pass performance on a fixed prompt (with repeats [2] ).

One key limitation of these results is that I evaluate on a dataset of competition math problems: maybe time horizons are higher/lower on other distributions! My current guess is that if you look at other distributions that are similarly serial to these math problems, current AIs have lower time horizons (they are probably overfit to math). I think LLMs are particularly bad at problems that involve chaining together out-of-context information in multiple hops; in some forthcoming results I find that Opus 4 and Opus 4.5 have close to chance performance on 3-hop tasks without CoT (e.g. "What is the state motto of the US State that was number (atomic number of Cadmium) to join the union?") and only around 30% performance on 2-hop tasks (e.g. "What element has atomic number (At what age did John Coltrane die)?"). However, AIs have very large opaque time horizons on highly parallelizable tasks (e.g., most reading heavy tasks). For instance, AIs can often classify whether a large python file is buggy when that would take a human 30 minutes to determine. (A more extreme example is that AIs can locate a somewhat subtle needle inside of 200k words in a single forward pass while it would probably take a human well over an hour.)

If we extrapolate forward a doubling time of 5 months on SWE and a doubling time of 9 months for no-CoT (opaque) reasoning, we get that at the point when AIs have a 40 hour SWE time horizon they will have a 16 minute time horizon for no-CoT reasoning (on math problems).

Of course, all of this assumes no large change in architectures (such as a shift to neuralese). This could immediately cause "with CoT" and "opaque" performance to become the same.

Appendix: scores for Gemini 3 Pro

Gemini 2.5 Pro and Gemini 3 Pro both don't support disabling reasoning which makes running evaluations for these models tricky. Edit: A prior version of this section discussed using a hacky prompting strategy to prevent Gemini 3 Pro from reasoning. nostalgebraist suggested a better approach leveraging the fact that these models typically don't reason when prefilled using the OpenRouter API and I've now used this (and edited this section accordingly). Note that this setup is pretty hacky: it causes Gemini 2.5/3 Pro to typically not reason, but this depends on the prompt (template) in unpredicatable ways (for some versions of the prompt template it only reasons 1% of the time while for others it reasons >50% of the time). If the model reasons (returns a thinking field), I retry up to 5 times and consider the response wrong if it used thinking. It's generally unclear how OpenRouter does prefilling on Gemini models (this isn't supported on the Google APIs directly) and something strange might be happening.

There is some chance that Gemini results are invalid because some fraction of the time OpenRouter returns no reasoning even though the model did reason (causing us to inaccurately consider an output valid when it actually isn't, yielding an overestimate of performance). I'm reasonably confident this isn't a serious issue, at least for these results, as the ultimate result is very plausible. (I think the probability these results are an overestimate by >1 minute due to the model actually reasoning is around 10%.) More generally, these results aren't perfectly comparable to earlier results as I use a 20 shot prompt (using a 20 shot prompt reduces the rate at which the model reasons) and performance could be degraded because no reasoning is very out-of-distribution for these models. For some versions of the prompt with large numbers of filler tokens (counting to >1000) or many repeats, I do see massive performance degradations with Gemini 3 Pro.

I find that both models benefit from repeats/filler. At repeats=5, Gemini 3 Pro has a time horizon of 3.8 minutes while Gemini 2.5 Pro has a time horizon of 2.7 minutes. (Without repeats, the time horizons are 2.8 minutes and 1.9 minutes respectively.)

Here are release date plots with Gemini 2.5 Pro and Gemini 3 Pro included:

Appendix: full result tables

Time Horizon - Repetitions

Time Horizon - Filler

Difficulty ratings are done by Opus 4.5 with 1 corresponding to an easy word problem, 5 corresponding to a challenging middle school problem, and 8 being a typical AIME problem. ↩︎

Or filler tokens, they perform similarly on this dataset. ↩︎