Quick clarification point.

Under disclaimers you note:

I am not covering training setups where we purposefully train an AI to be agentic and autonomous. I just think it's not plausible that we just keep scaling up networks, run pretraining + light RLHF, and then produce a schemer.[2]

Later, you say

Let me start by saying what existential vectors I am worried about:

and you don't mention a threat model like

"Training setups where we train generally powerful AIs with deep serial reasoning (similar to the internal reasoning in a human brain) for an extremely long time on rich outcomes based RL environment until these AIs learn how to become generically agentic and pursue specific outcomes in a wide variety of circumstances."

Do you think some version of this could be a serious threat model if (e.g.) this is the best way to make general and powerful AI systems?

I think many of the people who are worried about deceptive alignment type concerns also think that these sorts of training setups are likely to be the best way to make general and powerful AI systems.

(To be clear, I don't think it's at all obvious that this will be the best way to make powerful AI systems and I'm uncertain about how far things like human imitation will go. See also here.)

Thanks for asking. I do indeed think that setup could be a very bad idea. You train for agency, you might well get agency, and that agency might be broadly scoped.

(It's still not obvious to me that that setup leads to doom by default, though. Just more dangerous than pretraining LLMs.)

"Training setups where we train generally powerful AIs with deep serial reasoning (similar to the internal reasoning in a human brain) for an extremely long time on rich outcomes based RL environment until these AIs learn how to become generically agentic and pursue specific outcomes in a wide variety of circumstances."

My intuition goes something like: this doesn't matter that much if e.g. it happens (sufficiently) after you'd get ~human-level automated AI safety R&D with safer setups, e.g. imitation learning and no/less RL fine-tuning. And I'd expect, e.g. based on current scaling laws, but also on theoretical arguments about the difficulty of imitation learning vs. of RL, that the most efficient way to gain new capabilities, will still be imitation learning at least all the way up to very close to human-level. Then, the closer you get to ~human-level automated AI safety R&D with just imitation learning the less of a 'gap' you'd need to 'cover for' with e.g. RL. And the less RL fine-tuning you might need, the less likely it might be that the weights / representations change much (e.g. they don't seem to change much with current DPO). This might all be conceptually operationalizable in terms of effective compute.

Currently, most capabilities indeed seem to come from pre-training, and fine-tuning only seems to 'steer' them / 'wrap them around'; to the degree that even in-context learning can be competitive at this steering; similarly, 'on understanding how reasoning emerges from language model pre-training'.

this doesn't matter that much if e.g. it happens (sufficiently) after you'd get ~human-level automated AI safety R&D with safer setups, e.g. imitation learning and no/less RL fine-tuning.

Yep. The way I would put this:

- It barely matters if you transition to this sort of architecture well after human obsolescence.

- The further imitation+ light RL (competitively) goes the less important other less safe training approaches are.

I'd expect [...] that the most efficient way to gain new capabilities, will still be imitation learning at least all the way up to very close to human-level

What do you think about the fact that to reach somewhat worse than best human performance, AlphaStar needed a massive amount of RL? It's not a huge amount of evidence and I think intuitions from SOTA llms are more informative overall, but it's still something interesting. (There is a case that AlphaStar is more analogous as it involves doing a long range task and reaching comparable performance to top tier human professionals which LLMs arguably don't do in any domain.)

Also, note that even if there is a massive amount of RL, it could still be the case that most of the learning is from imitation (or that most of the learning is from self-supervised (e.g. prediction) objectives which are part of RL).

This might all be conceptually operationalizable in terms of effective compute.

One specific way to operationalize this is how much effective compute improvement you get from RL on code. For current SOTA models (e.g. claude 3), I would guess a central estimate of 2-3x effective compute multiplier from RL, though I'm extremely unsure. (I have no special knowledge here, just a wild guess based on eyeballing a few public things.)(Perhaps the deepseek code paper would allow for finding better numbers?)

safer setups, e.g. imitation learning and no/less RL fine-tuning

FWIW, think a high fraction of the danger from the exact setup I outlined isn't imitation, but is instead deep serial (and recurrent) reasoning in non-interpretable media.

This section doesn’t prove that scheming is impossible, it just dismantles a common support for the claim.

It's worth noting that this exact counting argument (counting functions), isn't an argument that people typically associated with counting arguments (e.g. Evan) endorse as what they were trying to argue about.[1]

See also here, here, here, and here.

(Sorry for the large number of links. Note that these links don't present independent evidence and thus the quantity of links shouldn't be updated upon: the conversation is just very diffuse.)

Or course, it could be that counting in function space is a common misinterpretation. Or more egregiously, people could be doing post-hoc rationalization even though they were defacto reasoning about the situation using counting in function space. ↩︎

To add, here's an excerpt from the Q&A on How likely is deceptive alignment? :

Question: When you say model space, you mean the functional behavior as opposed to the literal parameter space?

Evan: So there’s not quite a one to one mapping because there are multiple implementations of the exact same function in a network. But it's pretty close. I mean, most of the time when I'm saying model space, I'm talking either about the weight space or about the function space where I'm interpreting the function over all inputs, not just the training data.

I only talk about the space of functions restricted to their training performance for this path dependence concept, where we get this view where, well, they end up on the same point, but we want to know how much we need to know about how they got there to understand how they generalize.

While I agree with a lot of points of this post, I want to quibble with the RL not maximising reward point. I agree that model-free RL algorithms like DPO do not directly maximise reward but instead 'maximise reward' in the same way self-supervised models 'minimise crossentropy' -- that is to say, the model is not explicitly reasoning about minimising cross entropy but learns distilled heuristics that end up resulting in policies/predictions with a good reward/crossentropy. However, it is also possible to produce architectures that do directly optimise for reward (or crossentropy). AIXI is incomputable but it definitely does maximise reward. MCTS algorithms also directly maximise rewards. Alpha-Go style agents contain both direct reward maximising components initialized and guided by amortised heuristics (and the heuristics are distilled from the outputs of the maximising MCTS process in a self-improving loop). I wrote about the distinction between these two kinds of approaches -- direct vs amortised optimisation here. I think it is important to recognise this because I think that this is the way that AI systems will ultimately evolve and also where most of the danger lies vs simply scaling up pure generative models.

Agree with a bunch of these points. EG in Reward is not the optimization target I noted that AIXI really does maximize reward, theoretically. I wouldn't say that AIXI means that we have "produced" an architecture which directly optimizes for reward, because AIXI(-tl) is a bad way to spend compute. It doesn't actually effectively optimize reward in reality.

I'd consider a model-based RL agent to be "reward-driven" if it's effective and most of its "optimization" comes from the direct part and not the leaf-node evaluation (as in e.g. AlphaZero, which was still extremely good without the MCTS).

I think it is important to recognise this because I think that this is the way that AI systems will ultimately evolve and also where most of the danger lies vs simply scaling up pure generative models.

"Direct" optimization has not worked - at scale - in the past. Do you think that's going to change, and if so, why?

Solid post!

I basically agree with the core point here (i.e. scaling up networks, running pretraining + light RLHF, probably doesn't by itself produce a schemer), and I think this is the best write-up of it I've seen on LW to date. In particular, good job laying out what you are and are not saying. Thank you for doing the public service of writing it up.

scaling up networks, running pretraining + light RLHF, probably doesn't by itself produce a schemer

I agree with this point as stated, but think the probability is more like 5% than 0.1%. So probably no scheming, but this is hardly hugely reassuring. The word "probably" still leaves in a lot of risk; I also think statements like "probably misalignment won't cause x-risk" are true![1]

(To your original statement, I'd also add the additional caveat of this occuring "prior to humanity being totally obsoleted by these AIs". I basically just assume this caveat is added everywhere otherwise we're talking about some insane limit.)

Also, are you making sure to condition on "scaling up networks via running pretraining + light RLHF produces tranformatively powerful AIs which obsolete humanity"? If you don't condition on this, it might be an uninteresting claim.

Separately, I'm uncertain whether the current traning procedure of current models like GPT-4 or Claude 3 is still well described as just "light RLHF". I think the training procedure probably involves doing quite a bit of RL with outcomes based feedback on things like coding. (Should this count as "light"? Probably the amount of training compute on this RL is still small?)

And I think misalignment x-risk is substantial and worthy of concern. ↩︎

I agree with this point as stated, but think the probability is more like 5% than 0.1%.

How do you define or think about "light" in "light RLHF" when you make a statement like this, and how do you know that you're thinking about it the same way that Alex is? Is it a term of art that I don't know about, or has it been defined in a previous post?

I think of "light RLHF" as "RLHF which doesn't teach the model qualitatively new things, but instead just steers the model at a high level". In practice, a single round of DPO on <100,000 examples surely counts, but I'm unsure about the exact limits.

(In principle, a small amount of RL can update a model very far, I don't think we see this in practice.)

I'm not sure how "real" of a category "light RLHF" actually is.

I've used this sort of terminology informally in a variety of contexts though it certainly isn't a term of art at the moment. I'd guess that Alex is using the term similarly to how I'm thinking about it, but I'm not fully certain.

Note that even if RLHF takes a non-scheming pretrained model and converts it to a schemer, if this RLHF doesn't teach the model new things, then we can always train another copy of the pretrained model to be a monitor for the RLHF'd model and this monitor will be just as competent as the RLHF'd model. So scheming seems like substantially less of a problem in this case. (We'd need to use this monitor for all potentially dangerous actions to get safety properties.) (This is similar to the proposal in Appendix G of the weak-to-strong generalization paper, but with this addition that you deploy the reward model as a monitor which is required for any interesting guarantees.)

Note that even if RLHF takes a non-scheming pretrained model and converts it to a schemer, if this RLHF doesn't teach the model new things, then we can always train another copy of the pretrained model to be a monitor for the RLHF'd model and this monitor will be just as competent as the RLHF'd model.

I don't see why that would be the case, and I am doubtful this argument is relevant to any realistic context or even valid in the narrowest possible case of a base model vs its RLHFed model. Leaving aside that I'm not sure what you would 'train' the supervisor model on, it doesn't follow that there would be an exact overlap between the scheming behavior that is generated, and the detection of scheming behavior, simply because the two models are mostly the same. Lots of things are asymmetrical or irreversible or easier to generate than detect. P/=NP handwaving aside, for example, if you thought that there was an exact overlap because the model doesn't know 'new things', then it would be very strange to find that generative models can generate according to instructions that they struggle to understand from the samples. There are also plenty of places for other asymmetries. For example, asymmetry in security property: we don't want to settle for some 'fair' supervision rate like 50:50, we want much closer to 100:0. The schemer can exploit additional asymmetry in incremental schemes: just like with humans, many individually innocuous actions can sum up to a scheme, leading to an asymmetry in the views the schemer needs and the bulky difficult global view a supervisor may need to have any hope of detecting the scheme. (Note these 'actions' can be anything from writing a single token to a scratchpad to writing a useful but vulnerable line of code, and can be 'coordinated' by anything from internal latents to steganography to sheer evolution by rejection sampling of obvious 'bad' things by the supervisory while its false negatives slip by, which will find increasingly adversarial samples the more it is sampled.) And to be useful, you usually would want to run the schemer more, such as by using tree search, than the supervisory (because if the supervisory could do it just as well or better why aren't you using it instead?), and there's another big asymmetry: a model (eg. a Go or chess agent) can definitely beat itself arbitrarily often given a big enough tree search budget despite by stipulation knowing zero 'new things' because it's the same frozen model. (Or to put it more generally: 'new things' can be 'learned' in many more places than simply the frozen model weights - large contexts for ever greater meta-learning on the fly, retrieval databases - just ask Sydney, hyperparameters, OS VM state, steganographic text, users' heads, popular prompts shared on jailbreak websites... Security/safety is, as always, a property of the system as a whole, and not of any individual part, such as a particular model checkpoint.)

Leaving aside that I'm not sure what you would 'train' the supervisor model on

I'm imagining you train it to imitate human judgements of "was this action egregiously bad" on a combination of real AI actions and synthetic actions. This could fail to generalize to actual bad actions from your actual AI even if humans would have labled that action correctly, but why would it?

(If human labeling is insufficiently powerful (as seems plausible) then you could hope for generalization from examples humans can label to examples humans can't. It's not clear this generalization will go well, but by assumption we at least don't have to worry about generalization failing due to scheming. There are a bunch of other issues that come up with generalization)

I'm imagining at you run this monitor on the exact same context as the policy (for 2x additional compute). And you pause the executation of an AI's actions and flag for further review if the monitor indicates problems.

it doesn't follow that there would be an exact overlap between the scheming behavior that is generated

I didn't claim an exact overlap, I claimed they would be "just as competent". In the same way that two equally smart humans trained to code identically but with different personalities might be equally good at understanding some code, but might have different styles in some ways.

You can have concerns like "the RLHF'd model will use a stegonographic encoding format which the monitor doesn't understand because the key for the encoding format is picked based on features of the RLHF model that differ from the monitor/base model". However, I would count "RLHF teaches the policy steganography" as an example where the policy is substantially more competent in some domain.

Also note that I'm not claiming that it makes the situation overall safe, just that it's "substantially less of a problem". (You still have issues with humans being unable to determine which actions aren't dangerous, but you can hope for non-scheming generalization.)

I'm not saying this solves all of your problems, but for some specific problems it does seem like a solid solution and it should help a moderate amount with some other problems even though the situation is less clear. For instance, sometimes people worry about the specific problem "the AI suddenly does a small number of bad actions which have really bad consequences (e.g. exfiltrating its weights) even though if a human were to carefully review these actions they would have noticed an issue" (concentrated failures which wouldn't be auditing failures using the decomposition here) and this monitoring approach seem likely to solve this issue.

For example, asymmetry in security property: we don't want to settle for some 'fair' supervision rate like 50:50, we want much closer to 100:0.

I'm not sure I understand what you're saying here, but I'm imagining runing the AI monitor on everything the AI policy does and then reviewing the most suspicious subset (e.g. 0.1% of the actions).

The schemer can exploit additional asymmetry in incremental schemes: just like with humans, many individually innocuous actions can sum up to a scheme, leading to an asymmetry in the views the schemer needs and the bulky difficult global view a supervisor may need to have any hope of detecting the scheme.

The monitoring AI should see the exact same context as the schemer, so this at least shouldn't be a clear concern.

(There are some concerns sort of like this that seem plausible. In particular concerns related to AI specific non-interpretable inputs and outputs (aka neuralese), but I would describe this differently.)

And to be useful, you usually would want to run the schemer more, such as by using tree search, than the supervisory (because if the supervisory could do it just as well or better why aren't you using it instead?)

Nope, should just get run on all actions.

can definitely beat itself arbitrarily often given a big enough tree search budget despite by stipulation knowing zero 'new things' because it's the same frozen model

Yes, but each subaction within the tree search should be inspectable and both models should (by assumption) understand the situation for each action equally well.

For something to go wrong with tree search due to scheming, you need to have the internal model take a bad action at some point. At this point, you'd hope the monitor would be able to point this out. (Where this bad action could be either assigning a problematic rating or failing to explore something desirable.)

Another overall reaction I have to your comment:

Security/safety is, as always, a property of the system as a whole, and not of any individual part, such as a particular model checkpoint.

Yes of course, but the key threat model under discussion here is scheming which centrally involves a specific black box individual part conspiring to cause problems. So operating on the level of that individual part is quite reasonable: if we can avoid this part intentionally causing problems, that would suffice for diffusing the core scheming concern.

The surronding situation might make it more or less easy to avoid the actual model weights intentionally causing problems, but analysis at the individual weight input/output level can (in principle) suffice.

I agree with this point as stated, but think the probability is more like 5% than 0.1%

Same.

I do think our chances look not-great overall, but most of my doom-probability is on things which don't look like LLMs scheming.

Also, are you making sure to condition on "scaling up networks, running pretraining + light RLHF produces tranformatively powerful AIs which obsolete humanity"

That's not particularly cruxy for me either way.

Separately, I'm uncertain whether the current traning procedure of current models like GPT-4 or Claude 3 is still well described as just "light RLHF".

Fair. Insofar as "scaling up networks, running pretraining + RL" does risk schemers, it does so more as we do more/stronger RL, qualitatively speaking.

I now think there another important caveat in my views here. I was thinking about the question:

- Conditional on human obsoleting[1] AI being reached by "scaling up networks, running pretraining + light RLHF", how likely is it that that we'll end up with scheming issues?

I think this is probably the most natural question to ask, but there is another nearby question:

- If you keep scaling up networks with pretraining and light RLHF, what comes first, misalignment due to scheming or human obsoleting AI?

I find this second question much more confusing because it's plausible it requires insanely large scale. (Even if we condition out the worlds where this never gets you human obsoleting AI.)

For the first question, I think the capabilities are likely (70%?) to come from imitating humans but it's much less clear for the second question.

Or at least AI safety researcher obsoleting which requires less robotics and other interaction with the physical world. ↩︎

This is a deeply confused post.

In this post, Turner sets out to debunk what he perceives as "fundamentally confused ideas" which are common in the AI alignment field. I strongly disagree with his claims.

In section 1, Turner quotes a passage from "Superintelligence", in which Bostrom talks about the problem of wireheading. Turner declares this to be "nonsense" since, according to Turner, RL systems don't seek to maximize a reward.

First, Bostrom (AFAICT) is describing a system which (i) learns online (ii) maximizes long-term consequences. There are good reasons to focus on such a system: these are properties that are desirable in an AI defense system, if the system is aligned. Now, the LLM+RLHF paradigm which Turners puts in the center is, at least superficially, not like that. However, this is no argument against Bostrom: today's systems already went beyond LLM+RLHF (introducing RL over chain-of-thought) and tomorrow's systems are likely to be even more different. And, if a given AI design does not somehow acquire properties i+ii even indirectly (e.g. via in-context learning), then it's not clear how would it be useful for creating a defense system.

Second, Turner might argue that even granted i+ii, the AI would still not maximize reward because the properties of deep learning would cause it to converge to some different, reward-suboptimal, model. While this is often true, it is hardly an argument why not to worry.

While deep learning is not known to guarantee convergence to the reward-optimal policy (we don't know how to prove almost any guarantees about deep learning), RL algorithms are certainly designed with reward maximization in mind. If your AI is unaligned even under best-case assumptions about learning convergence, it seems very unlikely that deviating from these assumptions would somehow cause it to be aligned (while remaining highly capable). To argue otherwise is akin to hoping for the rocket to reach the moon because our equations of orbital mechanics don't account for some errors, rather than despite of it.

After this argument, Turner adds that "as a point of further fact, RL approaches constitute humanity's current best tools for aligning AI systems today". This observation seems completely irrelevant. It was indeed expected that RL would be useful in the subhuman regime, when the system cannot fail catastrophically simply because it lacks the capabilities. (Even when it convinces some vulnerable person to commit suicide, OpenAI's legal department can handle it.) I would expect it to be obvious to Bostrom even back then, and doesn't invalidate his conclusions in the slightest.

In section 3, Turner proceeds to attack the so-called "counting argument" for misalignment. The counting argument goes, since there are much more misaligned minds/goals than aligned minds/goals, even conditional on "good" behavior in training, it seems unlikely that current methods will produce an aligned mind. Turner (quoting Belrose and Pope) counters this argument by way of analogy. Deep learning successfully generalizes even though most models that perform well on the training data don't perform well on the test data. Hence, (they argue) the counting argument must be fallacious.

The major error that Turner, Belrose and Pope are making is that of confusing aleatoric and epistemic uncertainty. There is also a minor error of being careless about what measure the counting is performed over.

If we did not know anything about some algorithm except that it performs well on the training data, we would indeed have at most a weak expectation of it performing well on the test data. However, deep learning is far from random in this regard: it was selected by decades of research to be that sort of algorithm that does generalize well. Hence, the counting argument in this case gives us a perfectly reasonable prior.

(The minor point is that, w.r.t to a simplicity prior, even a random algorithm has some bounded-from-below probability of generalizing well.)

The counting argument is not premised on deep understanding of how deep learning works (which at present doesn't exist), but on a reasonable prior about what should we expect from our vantage point of ignorance. It describes our epistemic uncertainty, not the aleatoric uncertainty of deep learning. We can imagine that, if we knew how deep learning really works in the context of typical LLM training data etc, we would be able to confidently conclude that, say, RLHF has a high probability to eventually produce agents that primarily want to build astronomical superstructures in the shape of English letters, or whatnot. (It is ofc also possible we would conclude that LLM+RLHF will never produce anything powerful enough to be dangerous or useful-as-defense-sytem.) That would not be inconsistent with the counting argument as applied from our current state of knowledge.

The real question is then, conditional on our knowledge that deep learning often generalizes well, how confident are we that it will generalize aligned behavior from training to deployment, when scaled up to highly capable systems. Unfortunately, I don't think this update is strong enough to make us remotely safe. The fact deep learning generalizes implies that it implements some form of Occam's razor, but Occam's razor doesn't strongly select for alignment, as far as we can tell. Our current (more or less) best model of Occam's razor is Solomonoff induction, which Turner dismisses as irrelevant to neural networks: but here again, the fact that our understanding is flawed just pushes us back towards the counting-argument-prior, not towards safety.

Also, we should keep in mind that deep learning doesn't always generalize well empirically, it's just that when it fails we add more data until it starts generalizing. But, if the failure is "kill all humans", there is nobody left to add more data.

Turner's conclusion is "it becomes far easier to just use the AIs as tools which do things we ask". The extent to which I agree with this depends on the interpretation of the vague term "tools". Certainly modern AI is a tool that does approximately what we ask (even though when using AI for math, I'm already often annoyed at its attempts to cheat and hide the flaws of its arguments). However, I don't think we know how to safety create "tools" that are powerful enough to e.g. nearly-autonomously do alignment research or otherwise make substantial steps toward building an AI defense systems.

Second, Turner might argue that even granted i+ii, the AI would still not maximize reward because the properties of deep learning would cause it to converge to some different, reward-suboptimal, model. While this is often true, it is hardly an argument why not to worry.

While deep learning is not known to guarantee convergence to the reward-optimal policy (we don't know how to prove almost any guarantees about deep learning), RL algorithms are certainly designed with reward maximization in mind. If your AI is unaligned even under best-case assumptions about learning convergence, it seems very unlikely that deviating from these assumptions would somehow cause it to be aligned (while remaining highly capable). To argue otherwise is akin to hoping for the rocket to reach the moon because our equations of orbital mechanics don't account for some errors, rather than despite of it.

I partly agree, but think you take this point too far. I would say:

- It is possible in principle for us to be in a situation where the reward-optimal policy is bad (by human lights), but a specific learning algorithm winds up with a reward-suboptimal policy which is better (by human lights). In other words, it’s possible in principle for a learning algorithm to have an inner misalignment (a.k.a. goal misgeneralization) that cancels out an equal-and-opposite outer misalignment (a.k.a. specification gaming).

- We obviously should not breezily assume without argument that this kind of miraculous cancellation will happen.

- …But if someone wants to make a specific argument that this miraculous cancellation will happen in a particular case, then OK, cool, we should listen to that argument with an open mind.

I do in fact think there’s at least one case where there’s a reasonable prima facie argument for a miraculous cancellation of this type, and it’s one that TurnTrout has often brought up. Namely, the case of wireheading (and similar).

Suppose there’s a sequence of actions A, which is astronomically unlikely to occur by chance, and that leads to a maximally high reward, in a way that humans don’t like. E.g., A might involve the AI hacking into its own RAM space. It might be the case that normal explore-exploit RL techniques will never randomly come upon A. And it might further be the case that, even if the RL agent winds up foresighted and self-aware and aware of A, it’s motivated to avoid taking action sequence A, for the same reason that I’m not motivated to try addictive drugs (i.e., instrumental convergence goal-guarding). So it never does A, not even once. And thus TD learning (or whatever) never makes the RL agent “want” A. This would be outer misalignment (because A is high reward even though humans don’t like it) that gets cancelled out by inner misalignment (because the agent avoids A despite it being high-reward).

That’s not a bulletproof scenario; there are lots of ways it can go wrong. But I think it’s an existence proof that “miraculous cancellation” proposals should at least be seriously considered rather than dismissed out of hand.

What do you mean "randomly come upon A"? RL is not random. Why wouldn't it find A?

Let the proxy reward function we use to train the AI be and the "true" reward function that we intend the AI to follow be . Supposedly, these function agree on some domain but catastrophically go apart outside of it. Then, if all the training data lies inside , which reward function is selected depends on the algorithm's inductive bias (and possibly also on luck). The "cancellation" hope is then that inductive bias favors over .

But why would that be the case? Realistically, the inductive bias is something like "simplicity". And human preferences are very complex. On the other hand, something like "the reward is such-and-such bits in the input" is very simple. So instead of cancelling out, the problem is only aggravated.

And that's under the assumption that and actually agree on , which is in itself wildly optimistic.

I really don’t want to get into gory details here. I strongly agree that things can go wrong. We would need to be discussing questions like: What exactly is the RL algorithm, and what’s the nature of the exploring and exploiting that it does? What’s the inductive bias? What’s the environment? All of these are great questions! I often bring them up myself. (E.g. §7.2 here.)

I’m really trying to make a weak point here, which is that we should at least listen to arguments in this genre rather than dismissing them out of hand. After all, many humans, given the option, would not want to enter a state of perpetual bliss while their friends and family get tortured. Likewise, as I mentioned, I have never done cocaine, and don’t plan to, and would go out of my way to avoid it, even though it’s a very pleasurable experience. I think I can explain these two facts (and others like them) in terms of RL algorithms (albeit probably a different type of RL algorithm than you normally have in mind). But even if we couldn’t explain it, whatever, we can still observe that it’s a thing that really happens. Right?

And I’m not mainly thinking of complete plans but rather one ingredient in a plan. For example, I’m much more open-minded to a story that includes “the specific failure mode of wireheading doesn’t happen thanks to miraculous cancellation of inner and outer misalignment” than to a story that sounds like “the alignment problem is solved completely thanks to miraculous cancellation of inner and outer misalignment”.

The problem is, even if the argument that wireheading doesn't happen is valid, it is not a cancellation. It just means that the wireheading failure mode is replaced by some equally bad or worse failure mode. This argument is basically saying "your model that predicted this extremely specific bad outcome is inaccurate, therefore this bad outcome probably won't happen, good news!" But it's not meaningful good news, because it does literally nothing to select for the extremely specific good outcome that we want.

If a rocket was launched towards the Moon with a faulty navigation system, that does not mean the rocket is likely to land on Mars instead. Observing that the navigation system is faulty is not even an "ingredient in a plan" to get the rocket to Mars.

I also don't think that the drug analogy is especially strong evidence about anything. If you assumed that the human brain is a simple RL algorithm trained on something like pleasure vs. pain then not doing drugs would indeed be an example of not-wireheading. But why should we assume that? I think that the human brain is likely to be at least something like a metacognitive agent in which case you can come to model drugs as a "trap" (and there can be many additional complications).

The problem is…

I don’t think this part of the conversation is going anywhere useful. I don’t personally claim to have any plan for AGI alignment right now. If I ever do, and if “miraculous [partial] cancellation” plays some role in that plan, I guess we can talk then. :)

I also don't thing that the drug analogy is especially strong evidence…

I guess you’re saying that humans are “metacognitive agents” not “simple RL algorithms”, and therefore the drug thing provides little evidence about future AI. But that step assumes that the future AI will be a “simple RL algorithm”, right? It would provide some evidence if the future AI were similarly a “metacognitive agent”, right? Isn’t a “metacognitive agent” a kind of RL algorithm? (That’s not rhetorical, I don’t know.)

A metacognitive agent is not really an RL algorithm is in the usual sense. To first approximation, you can think of it as metalearning a policy that approximates (infra-)Bayes-optimality on a simplicity prior. The actual thing is more sophisticated and less RL-ish, but this is enough to understand how it can avoid wireheading.

Many alignment failure modes (wireheading, self-modification, inner misalignment...) can be framed as "traps", since they hinge of the non-asymptotic properties of generalization, i.e. on the "prior" or "inductive bias". Therefore frameworks that explicitly impose a prior (such as a metacognitive agents) are useful for understanding and avoiding these failure modes. (But, this has little to do with the OP, the way I see it.)

I get that a lot of AI safety rhetoric is nonsensical, but I think your strategy of obscuring technical distinctions between different algorithms and implicitly assuming that all future AI architectures will be something like GPT+DPO is counterproductive.

After making a false claim, Bostrom goes on to dismiss RL approaches to creating useful, intelligent, aligned systems. But, as a point of further fact, RL approaches constitute humanity's current best tools for aligning AI systems today! Those approaches are pretty awesome. No RLHF, then no GPT-4 (as we know it).

RLHF as understood currently (with humans directly rating neural network outputs, a la DPO) is very different from RL as understood historically (with the network interacting autonomously in the world and receiving reward from a function of the world). It's not an error from Bostrom's side to say something that doesn't apply to the former when talking about the latter, though it seems like a common error to generalize from the latter to the former.

I think it's best to think of DPO as a low-bandwidth NN-assisted supervised learning algorithm, rather than as "true reinforcement learning" (in the classical sense). That is, under supervised learning, humans provide lots of bits by directly creating a training sample, whereas with DPO, humans provide ~1 bit by picking the network-generated sample they like the most. It's unclear to me whether DPO has any advantage over just directly letting people edit the outputs, other than that if you did that, you'd empower trolls/partisans/etc. to intentionally break the network.

Did RL researchers in the 1990’s sit down and carefully analyze the inductive biases of PPO on huge 2026-era LLMs, conclude that PPO probably entrains LLMs which make decisions on the basis of their own reinforcement signal, and then decide to say “RL trains agents to maximize reward”? Of course not.

I was under the impression that PPO was a recently invented algorithm? Wikipedia says it was first published in 2017, which if true would mean that all pre-2017 talk about reinforcement learning was about other algorithms than PPO.

RLHF as understood currently (with humans directly rating neural network outputs, a la DPO) is very different from RL as understood historically (with the network interacting autonomously in the world and receiving reward from a function of the world).

This is actually pointing to the difference between online and offline learning algorithms, not RL versus non-RL learning algorithms. Online learning has long been known to be less stable than offline learning. That's what's primarily responsible for most "reward hacking"-esque results, such as the CoastRunners degenerate policy. In contrast, offline RL is surprisingly stable and robust to reward misspecification. I think it would have been better if the alignment community had been focused on the stability issues of online learning, rather than the supposed "agentness" of RL.

I was under the impression that PPO was a recently invented algorithm? Wikipedia says it was first published in 2017, which if true would mean that all pre-2017 talk about reinforcement learning was about other algorithms than PPO.

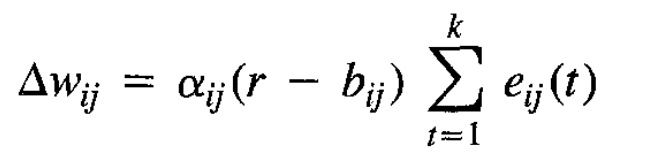

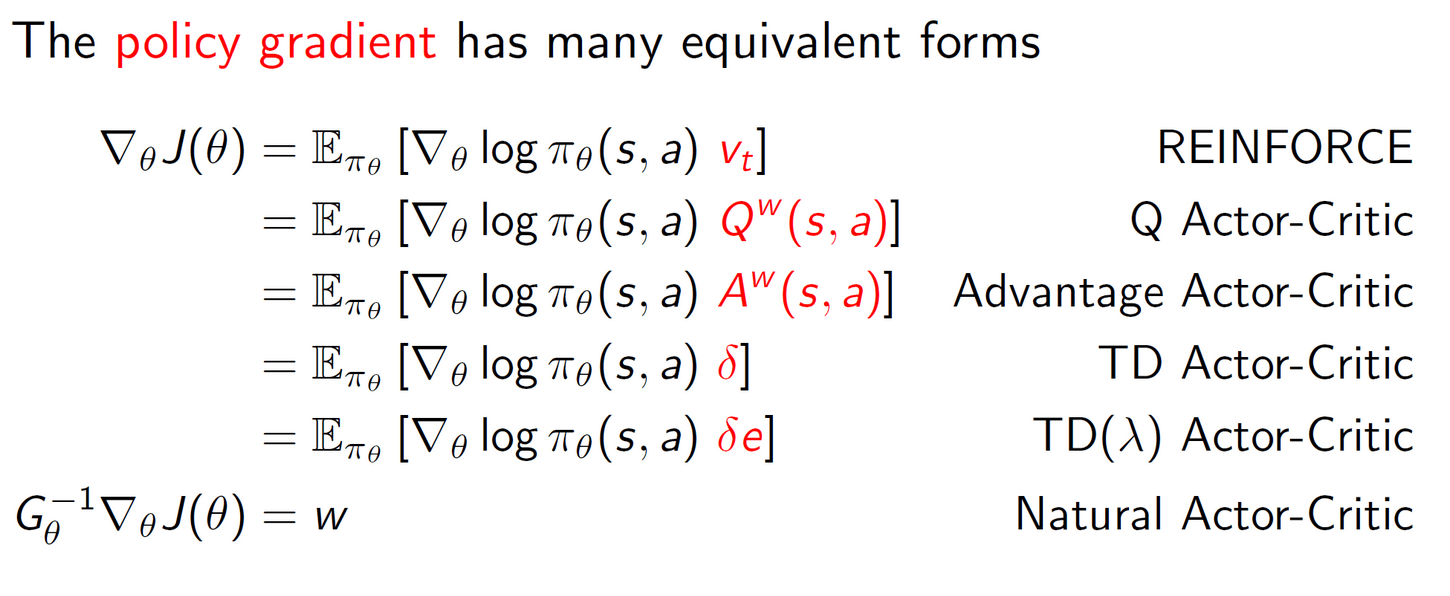

PPO may have been invented in 2017, but there are many prior RL algorithms for which Alex's description of "reward as learning rate multiplier" is true. In fact, PPO is essentially a tweaked version of REINFORCE, for which a bit of searching brings up Simple statistical gradient-following algorithms for connectionist reinforcement learning as the earliest available reference I can find. It was published in 1992, a full 22 years before Bostrom's book. In fact, "reward as learning rate multiplier" is even more clearly true of most of the update algorithms described in that paper. E.g., equation 11:

Here, the reward (adjusted by a "reinforcement baseline" ) literally just multiplies the learning rate. Beyond PPO and REINFORCE, this "x as learning rate multiplier" pattern is actually extremely common in different RL formulations. From lecture 7 of David Silver's RL course:

To be honest, it was a major blackpill for me to see the rationalist community, whose whole whole founding premise was that they were supposed to be good at making efficient use of the available evidence, so completely missing this very straightforward interpretation of RL (at least, I'd never heard of it from alignment literature until I myself came up with it when I realized that the mechanistic function of per-trajectory rewards in a given batched update was to provide the weights of a linear combination of the trajectory gradients. Update: Gwern's description here is actually somewhat similar).

implicitly assuming that all future AI architectures will be something like GPT+DPO is counterproductive.

When I bring up the "actual RL algorithms don't seem very dangerous or agenty to me" point, people often respond with "Future algorithms will be different and more dangerous".

I think this is a bad response for many reasons. In general, it serves as an unlimited excuse to never update on currently available evidence. It also has a bad track record in ML, as the core algorithmic structure of RL algorithms capable of delivering SOTA results has not changed that much in over 3 decades. In fact, just recently Cohere published Back to Basics: Revisiting REINFORCE Style Optimization for Learning from Human Feedback in LLMs, which found that the classic REINFORCE algorithm actually outperforms PPO for LLM RLHF finetuning. Finally, this counterpoint seems irrelevant for Alex's point in this post, which is about historical alignment arguments about historical RL algorithms. He even included disclaimers at the top about this not being an argument for optimism about future AI systems.

I wasn't around in the community in 2010-2015, so I don't know what the state of RL knowledge was at that time. However, I dispute the claim that rationalists "completely miss[ed] this [..] interpretation":

To be honest, it was a major blackpill for me to see the rationalist community, whose whole whole founding premise was that they were supposed to be good at making efficient use of the available evidence, so completely missing this very straightforward interpretation of RL [..] the mechanistic function of per-trajectory rewards in a given batched update was to provide the weights of a linear combination of the trajectory gradients.

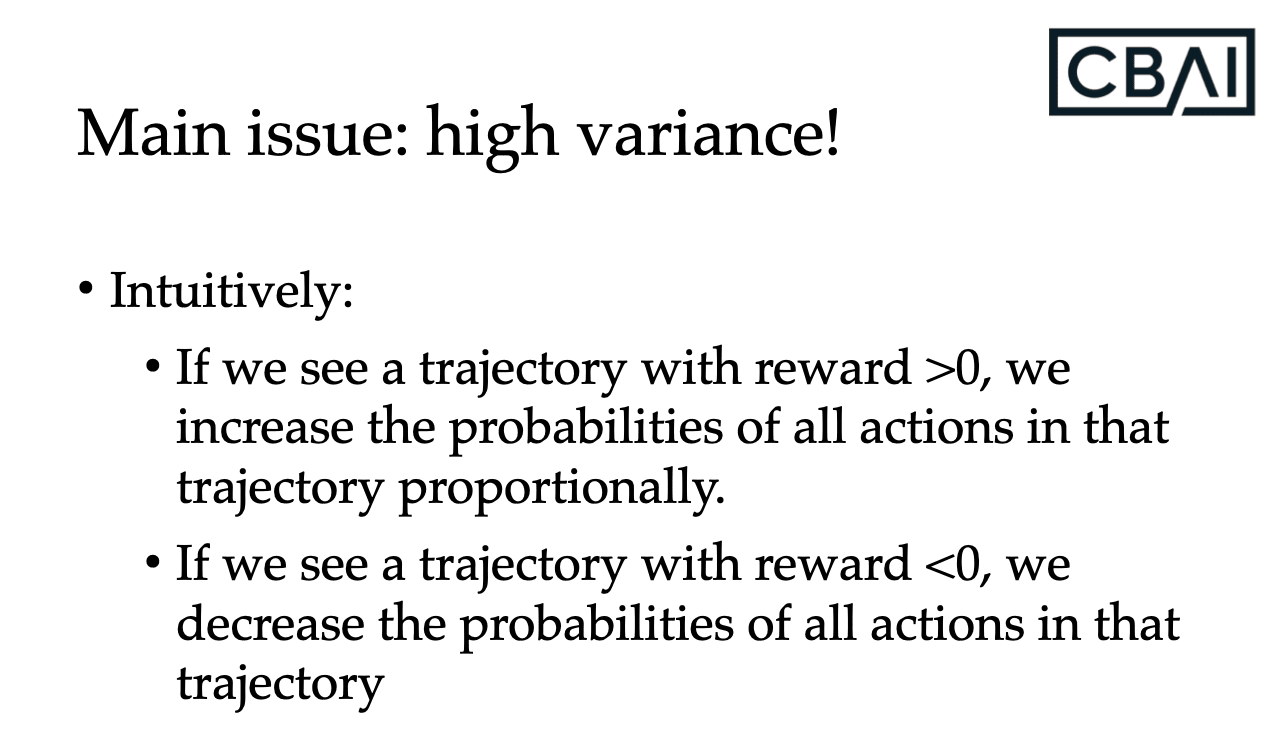

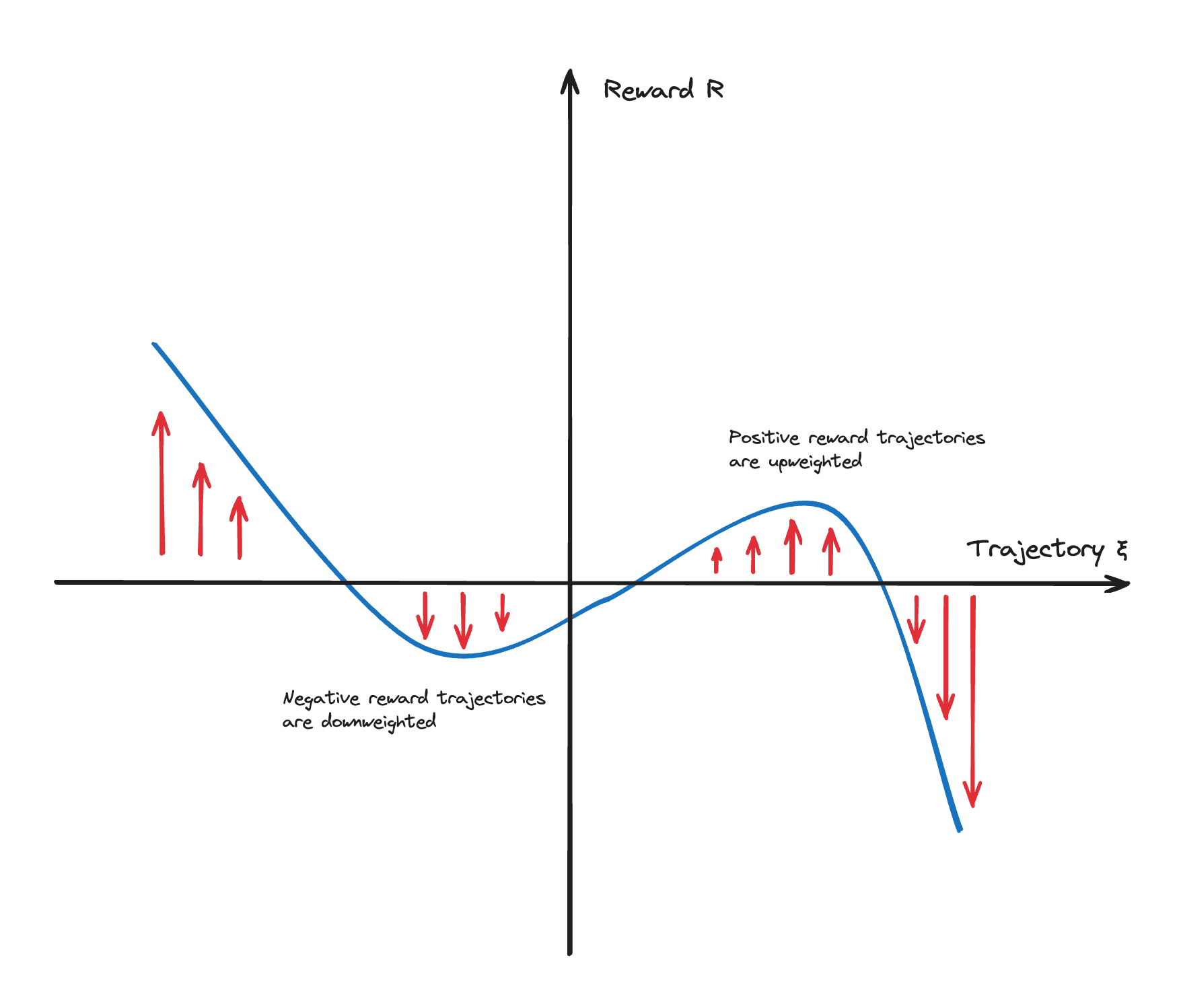

Ever since I entered the community, I've definitely heard of people talking about policy gradient as "upweighting trajectories with positive reward/downweighting trajectories with negative reward" since 2016, albeit in person. I remember being shown a picture sometime in 2016/17 that looks something like this when someone (maybe Paul?) was explaining REINFORCE to me: (I couldn't find it, so reconstructing it from memory)

In addition, I would be surprised if any of the CHAI PhD students when I was at CHAI from 2017->2021, many of whom have taken deep RL classes at Berkeley, missed this "upweight trajectories in proportion to their reward" intepretation? Most of us at the time have also implemented various RL algorithms from scratch, and there the "weighting trajectory gradients" perspective pops out immediately.

As another data point, when I taught MLAB/WMLB in 2022/3, my slides also contained this interpretation of REINFORCE (after deriving it) in so many words:

Insofar as people are making mistakes about reward and RL, it's not due to having never been exposed to this perspective.

That being said, I do agree that there's been substantial confusion in this community, mainly of two kinds:

- Confusing the objective function being optimized to train a policy with how the policy is mechanistically implemented: Just because the outer loop is modifying/selecting for a policy to score highly on some objective function, does not necessarily mean that the resulting policy will end up selecting actions based on said objective.

- Confusing "this policy is optimized for X" with "this policy is optimal for X": this is the actual mistake I think Bostom is making in Alex's example -- it's true that an agent that wireheads achieves higher reward than on the training distribution (and the optimal agent for the reward achieves reward at least as good as wireheading). And I think that Alex and you would also agree with me that it's sometimes valuable to reason about the global optima in policy space. But it's a mistake to identify the outputs of optimization with the optimal solution to an optimization problem, and many people were making this jump without noticing it.

Again, I contend these confusions were not due to a lack of exposure to the "rewards as weighting trajectories" perspective. Instead, the reasons I remember hearing back in 2017-2018 for why we should jump from "RL is optimizing agents for X" to "RL outputs agents that both optimize X and are optimal for X":

- We'd be really confused if we couldn't reason about "optimal" agents, so we should solve that first. This is the main justification I heard from the MIRI people about why they studied idealized agents. Oftentimes globally optimal solutions are easier to reason about than local optima or saddle points, or are useful for operationalizing concepts. Because a lot of the community was focused on philosophical deconfusion (often w/ minimal knowledge of ML or RL), many people naturally came to jump the gap between "the thing we're studying" and "the thing we care about".

- Reasoning about optima gives a better picture of powerful, future AGIs. Insofar as we're far from transformative AI, you might expect that current AIs are a poor model for how transformative AI will look. In particular, you might expect that modeling transformative AI as optimal leads to clearer reasoning than analogizing them to current systems. This point has become increasingly tenuous since GPT-2, but

- Some off-policy RL algorithms are well described as having a "reward" maximizing component: And, these were the approaches that people were using and thinking about at the time. For example, the most hyped results in deep learning in the mid 2010s were probably DQN and AlphaGo/GoZero/Zero. And many people believed that future AIs would be implemented via model-based RL. All of these approaches result in policies that contain an internal component which is searching for actions that maximize some learned objective. Given that ~everyone uses policy gradient variants for RL on SOTA LLMs, this does turn out to be incorrect ex post. But if the most impressive AIs seem to be implemented in ways that correspond to internal reward maximization, it does seem very understandable to think about AGIs as explicit reward optimizers.

- This is how many RL pioneers reasoned about their algorithms. I agree with Alex that this is probably from the control theory routes, where a PID controller is well modeled as picking trajectories that minimize cost, in a way that early simple RL policies are not well modeled as internally picking trajectories that maximize reward.

Also, sometimes it is just the words being similar; it can be hard to keep track of the differences between "optimizing for", "optimized for", and "optimal for" in normal conversation.

I think if you want to prevent the community from repeating these confusions, this looks less like "here's an alternative perspective through which you can view policy gradient" and more "here's why reasoning about AGI as 'optimal' agents is misleading" and "here's why reasoning about your 1 hidden layer neural network policy as if it were optimizing the reward is bad".

An aside:

In general, I think that many ML-knowledgeable people (arguably myself included) correctly notice that the community is making many mistakes in reasoning, that they resolve internally using ML terminology or frames from the ML literature. But without reasoning carefully about the problem, the terminology or frames themselves are insufficient to resolve the confusion. (Notice how many Deep RL people make the same mistake!) And, as Alex and you have argued before, the standard ML frames and terminology introduce their own confusions (e.g. 'attention').

A shallow understanding of "policy gradient is just upweighting trajectories" may in fact lead to making the opposite mistake: assuming that it can never lead to intelligent, optimizer-y behavior. (Again, notice how many ML academics made exactly this mistake) Or, more broadly, thinking about ML algorithms purely from the low-level, mechanistic frame can lead to confusions along the lines of "next token prediction can only lead to statistical parrots without true intelligence". Doubly so if you've only worked with policy gradient or language modeling with tiny models.

Ever since I entered the community, I've definitely heard of people talking about policy gradient as "upweighting trajectories with positive reward/downweighting trajectories with negative reward" since 2016, albeit in person. I remember being shown a picture sometime in 2016/17 that looks something like this when someone (maybe Paul?) was explaining REINFORCE to me: (I couldn't find it, so reconstructing it from memory)

Knowing how to reason about "upweighting trajectories" when explicitly prompted or in narrow contexts of algorithmic implementation is not sufficient to conclude "people basically knew this perspective" (but it's certainly evidence). See Outside the Laboratory:

Now suppose we discover that a Ph.D. economist buys a lottery ticket every week. We have to ask ourselves: Does this person really understand expected utility, on a gut level? Or have they just been trained to perform certain algebra tricks?

Knowing "vanilla PG upweights trajectories", and being able to explain the math --- this is not enough to save someone from the rampant reward confusions. Certainly Yoshua Bengio could explain vanilla PG, and yet he goes on about how RL (almost certainly, IIRC) trains reward maximizers.

I contend these confusions were not due to a lack of exposure to the "rewards as weighting trajectories" perspective.

I personally disagree --- although I think your list of alternative explanations is reasonable. If alignment theorists had been using this (simple and obvious-in-retrospect) "reward chisels circuits into the network" perspective, if they had really been using it and felt it deep within their bones, I think they would not have been particularly tempted by this family of mistakes.

In fact, PPO is essentially a tweaked version of REINFORCE,

Valid point.

Beyond PPO and REINFORCE, this "x as learning rate multiplier" pattern is actually extremely common in different RL formulations. From lecture 7 of David Silver's RL course:

Critically though, neither Q, A or delta denote reward. Rather they are quantities which are supposed to estimate the effect of an action on the sum of future rewards; hence while pure REINFORCE doesn't really maximize the sum of rewards, these other algorithms are attempts to more consistently do so, and the existence of such attempts shows that it's likely we will see more better attempts in the future.

It was published in 1992, a full 22 years before Bostrom's book.

Bostrom's book explicitly states what kinds of reinforcement learning algorithms he had in mind, and they are not REINFORCE:

Often, the learning algorithm involves the gradual construction of some kind of evaluation function, which assigns values to states, state–action pairs, or policies. (For instance, a program can learn to play backgammon by using reinforcement learning to incrementally improve its evaluation of possible board positions.) The evaluation function, which is continuously updated in light of experience, could be regarded as incorporating a form of learning about value. However, what is being learned is not new final values but increasingly accurate estimates of the instrumental values of reaching particular states (or of taking particular actions in particular states, or of following particular policies). Insofar as a reinforcement-learning agent can be described as having a final goal, that goal remains constant: to maximize future reward. And reward consists of specially designated percepts received from the environment. Therefore, the wireheading syndrome remains a likely outcome in any reinforcement agent that develops a world model sophisticated enough to suggest this alternative way of maximizing reward.

Similarly, before I even got involved with alignment or rationalism, the canonical reinforcement learning algorithm I had heard of was TD, not REINFORCE.

It also has a bad track record in ML, as the core algorithmic structure of RL algorithms capable of delivering SOTA results has not changed that much in over 3 decades.

Huh? Dreamerv3 is clearly a step in the direction of utility maximization (away from "reward is not the optimization target"), and it claims to set SOTA on a bunch of problems. Are you saying there's something wrong with their evaluation?

In fact, just recently Cohere published Back to Basics: Revisiting REINFORCE Style Optimization for Learning from Human Feedback in LLMs, which found that the classic REINFORCE algorithm actually outperforms PPO for LLM RLHF finetuning.

LLM RLHF finetuning doesn't build new capabilities, so it should be ignored for this discussion.

Finally, this counterpoint seems irrelevant for Alex's point in this post, which is about historical alignment arguments about historical RL algorithms. He even included disclaimers at the top about this not being an argument for optimism about future AI systems.

It's not irrelevant. The fact that Alex Turner explicitly replies to Nick Bostrom and calls his statement nonsense means that Alex Turner does not get to use a disclaimer to decide what the subject of discussion is. Rather, the subject of discussion is whatever Bostrom was talking about. The disclaimer rather serves as a way of turning our attention away from stuff like DreamerV3 and towards stuff like DPO. However DreamerV3 seems like a closer match for Bostrom's discussion than DPO is, so the only way turning our attention away from it can be valid is if we assume DreamerV3 is a dead end and DPO is the only future.

This is actually pointing to the difference between online and offline learning algorithms, not RL versus non-RL learning algorithms.

I was kind of pointing to both at once.

In contrast, offline RL is surprisingly stable and robust to reward misspecification.

Seems to me that the linked paper makes the argument "If you don't include attempts to try new stuff in your training data, you won't know what happens if you do new stuff, which means you won't see new stuff as a good opportunity". Which seems true but also not very interesting, because we want to build capabilities to do new stuff, so this should instead make us update to assume that the offline RL setup used in this paper won't be what builds capabilities in the limit. (Not to say that they couldn't still use this sort of setup as some other component than what builds the capabilities, or that they couldn't come up with an offline RL method that does want to try new stuff - merely that this particular argument for safety bears too heavy of an alignment tax to carry us on its own.)

"If you don't include attempts to try new stuff in your training data, you won't know what happens if you do new stuff, which means you won't see new stuff as a good opportunity". Which seems true but also not very interesting, because we want to build capabilities to do new stuff, so this should instead make us update to assume that the offline RL setup used in this paper won't be what builds capabilities in the limit.

I'm sympathetic to this argument (and think the paper overall isn't super object-level important), but also note that they train e.g. Hopper policies to hop continuously, even though lots of the demonstrations fall over. That's something new.

I was under the impression that PPO was a recently invented algorithm

Well, if we're going to get historical, PPO is a relatively small variation on Williams's REINFORCE policy gradient model-free RL algorithm from 1992 (or earlier if you count conferences etc), with a bunch of minor DL implementation tweaks that turn out to help a lot. I don't offhand know of any ways in which PPO's tweaks make it meaningfully different from REINFORCE from the perspective of safety, aside from the obvious ones of working better in practice. (Which is the main reason why PPO became OA's workhorse in its model-free RL era to train small CNNs/RNNs, before they moved to model-based RL using Transformer LLMs. Policy gradient methods based on REINFORCE certainly were not novel, but they started scaling earlier.)

So, PPO is recent, yes, but that isn't really important to anything here. TurnTrout could just as well have used REINFORCE as the example instead.

Did RL researchers in the 1990’s sit down and carefully analyze the inductive biases of PPO on huge 2026-era LLMs, conclude that PPO probably entrains LLMs which make decisions on the basis of their own reinforcement signal, and then decide to say “RL trains agents to maximize reward”? Of course not.

I don't know how you (TurnTrout) can say that. It certainly seems to me that plenty of researchers in 1992 were talking about either model-based RL or using model-free approaches to ground model-based RL - indeed, it's hard to see how anything else could work in connectionism, given that model-free methods are simpler, many animals or organisms do things that can be interpreted as model-free but not model-based (while all creatures who do model-based RL, like humans, clearly also do model-free), and so on. The model-based RL was the 'cherry on the cake', if I may put it that way... These arguments were admittedly handwavy: "if we can't write AGI from scratch, then we can try to learn it from scratch starting with model-free approaches like Hebbian learning, and somewhere between roughly mouse-level and human/AGI, a miracle happens, and we get full model-based reasoning". But hey, can't argue with success there! We have loads of nice results from DeepMind and others with this sort of flavor†.

On the other hand, I'm not able to think of any dissenters which claim that you could have AGI purely using model-free RL with no model-based RL anywhere to be seen? Like, you can imagine it working (eg. in silico environments for everything), but it's not very plausible since it would seem like the computational requirements go astronomical fast.

Back then, they had a richer conception of RL, heavier on the model-based RL half of the field, and one more relevant to the current era, than the impoverished 2017 era of 'let's just PPO/Impala everything we can't MCTS and not talk about how this is supposed to reach AGI, exactly, even if it scales reasonably well'. If you want to critique what AI researchers could imagine back in 1992, you should be reading Schmidhuber, not Bostrom. ("Computing is a pop culture", as Kay put it, and DL, and DRL, are especially pop culture right now. Which is not necessarily a bad thing if you're just trying to get things to work, but if you are going to make historical arguments about what people were or were not thinking in 2014, or 1992, pop culture isn't going to cut the mustard. People back then weren't stupid, and often had very sophisticated well-thought-out ideas & paradigms; they just had a millionth of the compute/data/infrastructure they needed to make any of it work properly...)

If you look at that REINFORCE paper, Williams isn't even all that concerned with direct use of it to train a model to solve RL tasks.* He's more concerned with handling non-differentiable things in general, like stochastic rather than the usual deterministic neurons we use, so you could 'backpropagate through the environment' models like Schmidhuber & Huber 1990, which bootstrap from random initialization using the high-variance REINFORCE-like learning signal to a superior model. (Hm, why, that sounds like the sort of thing you might do if you analyze the inductive biases of model-free approaches which entrain larger systems which have their own internal reinforcement signals which they maximize...) As Schmidhuber has been saying for decades, it's meta-learning all the way up/down. The species-level model-free RL algorithm (evolution) creates model-free within-lifetime learning algorithms (like REINFORCE), which creates model-based within-lifetime learning algorithms (like neural net models) which create learning over families (generalization) for cross-task within-lifetime learning which create learning algorithms (ICL/history-based meta-learners**) for within-episode learning which create...

It's no surprise that the "multiply a set of candidate entities by a fixed small percentage based on each entity's reward" algorithm pops up everywhere from evolution to free markets to DRL to ensemble machine learning over 'experts', because that model-free algorithm is always available as the fallback strategy when you can't do anything smarter (yet). Model-free is just the first step, and in many ways, least interesting & important step. I'm always weirded out to read one of these posts where something like PPO or evolution strategies is treated as the only RL algorithm around and things like expert iteration an annoying nuisance to be relegated to a footnote - 'reward is not the optimization target!* * except when it is in these annoying exceptions like AlphaZero, but fortunately, we can ignore these, because after all, it's not like humans or AGI or superintelligences would ever do crazy stuff like "plan" or "reason" or "search"'.

* He'd've probably been surprised to see people just... using it for stuff like DoTA2 on fully-differentiable BPTT RNNs. I wonder if he's ever done any interviews on DL recently? AFAIK he's still alive.

** Specifically, in the case of Transformers, it seems to be by self-attention doing gradient descent steps on an abstracted version of a problem; gradient descent itself isn't a very smart algorithm, but if the abstract version is a model that encodes the correct sufficient statistics of the broader meta-problem, then it can be very easy to make Bayes-optimal predictions/choices for any specific problem.

† my paper-of-the-day website feature yesterday popped up "Learning few-shot imitation as cultural transmission", Bhoopchand et al 2023 (excerpts) which is a nice example because they show clearly how history+diverse-environments+simple-priors-of-an-evolvable-sort elicit 'inner' model-like imitation learning starting from the initial 'outer' model-free RL algorithm (MPO, an actor-critic).

'reward is not the optimization target!* *except when it is in these annoying exceptions like AlphaZero, but fortunately, we can ignore these, because after all, it's not like humans or AGI or superintelligences would ever do crazy stuff like "plan" or "reason" or "search"'.

If you're going to mock me, at least be correct when you do it!

I think that reward is still not the optimization target in AlphaZero (the way I'm using the term, at least). Learning a leaf node evaluator on a given reinforcement signal, and then bootstrapping the leaf node evaluator via MCTS on that leaf node evaluator, does not mean that the aggregate trained system

- directly optimizes for the reinforcement signal, or

- "cares" about that reinforcement signal,

- or "does its best" to optimize the reinforcement signal (as opposed to some historical reinforcement correlate, like winning or capturing pieces or something stranger).

If most of the "optimization power" were coming from e.g. MCTS on direct reward signal, then yup, I'd agree that the reward signal is the primary optimization target of this system. That isn't the case here.

You might use the phrase "reward as optimization target" differently than I do, but if we're just using words differently, then it wouldn't be appropriate to describe me as "ignoring planning."

Learning a leaf node evaluator on a given reinforcement signal, and then bootstrapping the leaf node evaluator via MCTS on that leaf node evaluator, does not mean that the aggregate trained system

directly optimizes for the reinforcement signal, or "cares" about that reinforcement signal, or "does its best" to optimize the reinforcement signal (as opposed to some historical reinforcement correlate, like winning or capturing pieces or something stranger).

Yes, it does mean all of that, because MCTS is asymptotically optimal (unsurprisingly, given that it's a tree search on the model), and will eg. happily optimize the reinforcement signal rather than proxies like capturing pieces as it learns through search that capturing pieces in particular states is not as useful as usual. If you expand out the search tree long enough (whether or not you use the AlphaZero NN to make that expansion more efficient by evaluating intermediate nodes and then back-propagating that through the current tree), then it converges on the complete, true, ground truth game tree, with all leafs evaluated with the true reward, with any imperfections in the leaf evaluator value estimate washed out. It directly optimizes the reinforcement signal, cares about nothing else, and is very pleased to lose if that results in a higher reward or not capture pieces if that results in a higher reward.*

All the NN is, is a cache or an amortization of the search algorithm. Caches are important and life would be miserable without them, but it would be absurd to say that adding a cache to a function means "that function doesn't compute the function" or "the range is not the target of the function".

I'm a little baffled by this argument that because the NN is not already omniscient and might mis-estimate the value of a leaf node, that apparently it's not optimizing for the reward and that's not the goal of the system and the system doesn't care about reward, no matter how much it converges toward said reward as it plans/searches more, or gets better at acquiring said reward as it fixes those errors.

If most of the "optimization power" were coming from e.g. MCTS on direct reward signal, then yup, I'd agree that the reward signal is the primary optimization target of this system.

The reward signal is in fact the primary optimization target, because it is where the neural net's value estimates derive from, and the 'system' corrects them eventually and converges. The dog wags the tail, sooner or later.

* I think I've noted this elsewhere, and mentioned my Kelly coinflip trajectories as nice visualization of how model-based RL will behave as, but to repeat: MCTS algorithms in Go/chess were noted for that sort of behavior, especially for sacrificing pieces or territory while they were ahead, in order to 'lock down' the game and maximize the probability of victory, rather than the margin of victory; and vice-versa, for taking big risks when they were behind. Because the tree didn't back-propagate any rewards on 'margin', just on 0/1 rewards from victory, and didn't care about proxy heuristics like 'pieces captured' if the tree search found otherwise.

How many alignment techniques presuppose an AI being motivated by the training signal (e.g. AI Safety via Debate)

It would be good to get a definitive response from @Geoffrey Irving or @paulfchristiano, but I don't think AI Safety via Debate presupposes an AI being motivated by the training signal. Looking at the paper again, there is some theoretical work that assumes "each agent maximizes their probability of winning" but I think the idea is sufficiently well-motivated (at least as a research approach) even if you took that section out, and simply view Debate as a way to do RL training on an AI that is superhumanly capable (and hence hard or unsafe to do straight RLHF on).

BTW what is your overall view on "scalable alignment" techniques such as Debate and IDA? (I guess I'm getting the vibe from this quote that you don't like them, and want to get clarification so I don't mislead myself.)

I certainly do think that debate is motivated by modeling agents as being optimized to increase their reward, and debate is an attempt at writing down a less hackable reward function. But I also think RL can be sensibly described as trying to increase reward, and generally don't understand the section of the document that says it obviously is not doing that. And then if the RL algorithm is trying to increase reward, and there is a meta-learning phenomenon that cause agents to learn algorithms, then the agents will be trying to increase reward.

Reading through the section again, it seems like the claim is that my first sentence "debate is motivated by agents being optimized to increase reward" is categorically different than "debate is motivated by agents being themselves motivated to increase reward". But these two cases seem separated only by a capability gap to me: sufficiently strong agents will be stronger if they record algorithms that adapt to increase reward in different cases.

The post defending the claim is Reward is not the optimization target. Iirc, TurnTrout has described it as one of his most important posts on LW.

but I don't think AI Safety via Debate presupposes an AI being motivated by the training signal

This seems right to me.

I often imagine debate (and similar techniques) being applied in the (low-stakes/average-case/non-concentrate) control setting. The control setting is the case where you are maximally conservative about the AI's motivations and then try to demonstrate safety via making the incapable of causing catastrophic harm.

If you make pessimistic assumptions about AI motivations like this, then you have to worry about concerns like exploration hacking (or even gradient hacking), but it's still plausible that debate adds considerable value regardless.

We could also less conservatively assume that AIs might be misaligned (including seriously misaligned with problematic long range goals), but won't necessarily prefer colluding with other AI over working with humans (e.g. because humanity offers payment for labor). In this case, techniques like debate seem quite applicable and the situation could be very dangerous in the absence of good enough approaches (at least if AIs are quite superhuman).

More generally, debate could be applicable to any type of misalignment which you think might cause problems over a large number of independently assessible actions.

I am not covering training setups where we purposefully train an AI to be agentic and autonomous. I just think it's not plausible that we just keep scaling up networks, run pretraining + light RLHF, and then produce a schemer.[2]

Like Ryan, I'm interested in how much of this claim is conditional on "just keep scaling up networks" being insufficient to produce relevantly-superhuman systems (i.e. systems capable of doing scientific R&D better and faster than humans, without humans in the intellectual part of the loop). If it's "most of it", then my guess is that accounts for a good chunk of the disagreement.

I don't expect the current paradigm will be insufficient (though it seems totally possible). Off the cuff I expect 75% that something like the current paradigm will be sufficient, with some probability that something else happens first. (Note that "something like the current paradigm" doesn't just involve scaling up networks.)

(Disclaimer: Nothing in this comment is meant to disagree with “I just think it's not plausible that we just keep scaling up [LLM] networks, run pretraining + light RLHF, and then produce a schemer.” I’m agnostic about that, maybe leaning towards agreement, although that’s related to skepticism about the capabilities that would result.)

It is simply not true that "[RL approaches] typically involve creating a system that seeks to maximize a reward signal."

I agree that Bostrom was confused about RL. But I also think there are some vaguely-similar claims to the above that are sound, in particular:

- RL approaches may involve inference-time planning / search / lookahead, and if they do, then that inference-time planning process can generally be described as “seeking to maximize a learned value function / reward model / whatever” (which need not be identical to the reward signal in the RL setup).

- And if we compare Bostrom’s incorrect “seeking to maximize the actual reward signal” to the better “seeking at inference time to maximize a learned value function / reward model / whatever to the best of its current understanding”, then…

- We should feel better about wireheading—under Bostrom’s assumptions, the AI will absolutely 100% be trying to wirehead, whereas in the corrected version, the AI might or might not be trying to wirehead.

- We should have mixed updates about power-seeking. On the plus side, it’s at least possible for the learned value function to wind up incorporating complex “conceptual” and deontological motivations like being helpful, corrigible, following rules and norms, etc., whereas a reward function can’t (easily) do that. On the minus side, the AI’s motivations become generally harder to reason about; e.g. a myopic reward signal can give rise to a non-myopic learned value function.

- RL approaches historically have typically involved the programmer wanting to get a maximally high reward signal, and creating a training setup such that the resulting trained model does stuff that get as high a reward signal as possible. And this continues to be a very important lens for understanding why RL algorithms work the way they work. Like, if I were teaching an RL class, and needed to explain the formulas for TD learning or PPO or whatever, I think I would struggle to explain the formulas without saying something like “let’s pretend that you the programmer are interested in producing trained models that score maximally highly according to the reward function. How would you update the model parameters in such-and-such situation…?” Right?

- Related to the previous bullet, I think many RL approaches have a notion of “global optimum” and “training to convergence” (e.g. given infinite time in a finite episodic environment). And if a model is “trained to convergence”, then it will behaviorally “seek to maximize a reward signal”. I think that’s important to have in mind, although it might or might not be relevant in practice.

I bet people would care a lot less about “reward hacking” if RL’s reinforcement signal hadn’t ever been called “reward.”

In the context of model-based planning, there’s a concern that the AI will come upon a plan which from the AI’s perspective is a “brilliant out-of-the-box solution to a tricky problem”, but from the programmer’s perspective is “reward-hacking, or Goodharting the value function (a.k.a. exploiting an anomalous edge-case in the value function), or whatever”. Treacherous turns would probably be in this category.

There’s a terminology problem where if I just say “the AI finds an out-of-the-box solution”, it conveys the positive connotation but not the negative one, and if I just say “reward-hacking” or “Goodharting the value function” it conveys the negative part without the positive.

The positive part is important. We want our AIs to find clever out-of-the-box solutions! If AIs are not finding clever out-of-the-box solutions, people will presumably keep improving AI algorithms until they do.

Ultimately, we want to be able to make AIs that think outside of some of the boxes but definitely stay inside other boxes. But that’s tricky, because the whole idea of “think outside the box” is that nobody is ever aware of which boxes they are thinking inside of.

Anyway, this is all a bit abstract and weird, but I guess I’m arguing that I think the words “reward hacking” are generally pointing towards an very important AGI-safety-relevant phenomenon, whatever we want to call it.

The strongest argument for reward-maximization which I’m aware of is: Human brains do RL and often care about some kind of tight reward-correlate, to some degree. Humans are like deep learning systems in some ways, and so that’s evidence that “learning setups which work in reality” can come to care about their own training signals.

Isn't there a similar argument for "plausible that we just keep scaling up networks, run pretraining + light RLHF, and then produce a schemer"? Namely the way we train our kids seems pretty similar to "pretraining + light RLHF" and we often do end up with scheming/deceptive kids. (I'm speaking partly from experience.) ETA: On second thought, maybe it's not that similar? In any case, I'd be interested in an explanation of what the differences are and why one type of training produces schemers and the other is very unlikely to.

Also, in this post you argue against several arguments for high risk of scheming/deception from this kind of training but I can't find where you talk about why you think the risk is so low ("not plausible"). You just say 'Undo the update from the “counting argument”, however, and the probability of scheming plummets substantially.' but why is your prior for it so low? I would be interested in whatever reasons/explanations you can share. The same goes for others who have indicated agreement with Alex's assessment of this particular risk being low.