Square visualization of probabilities on two events

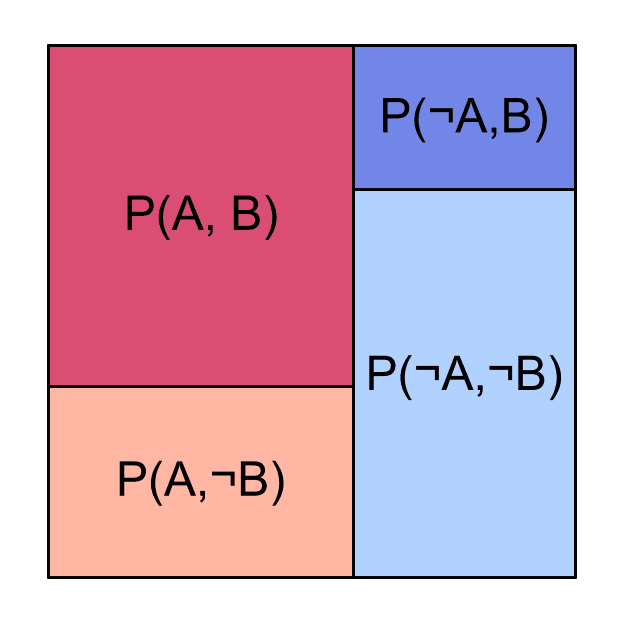

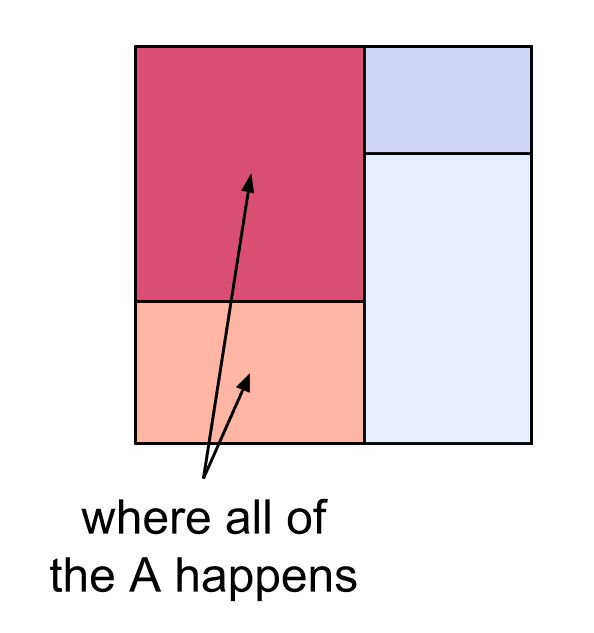

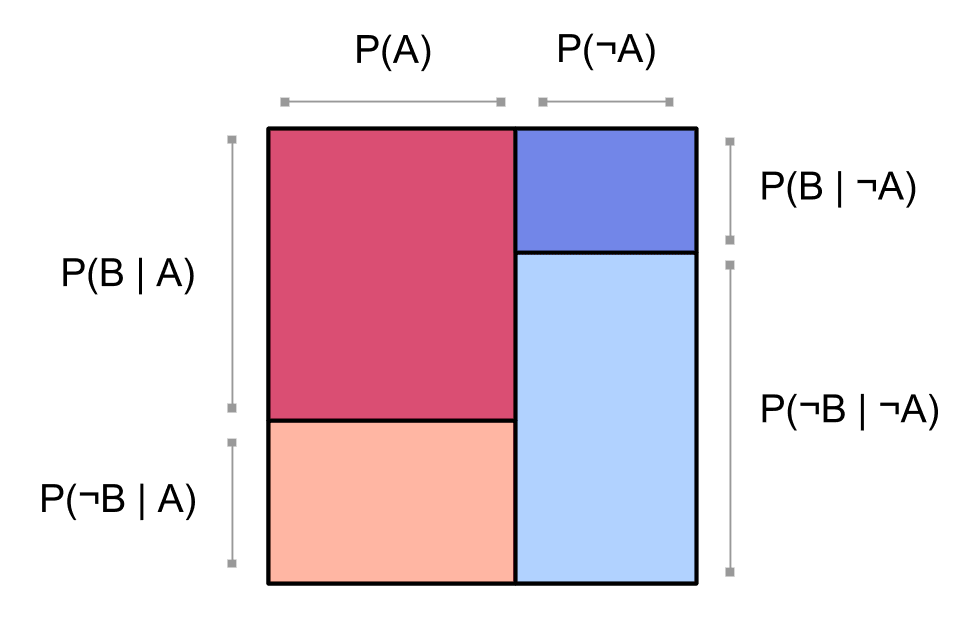

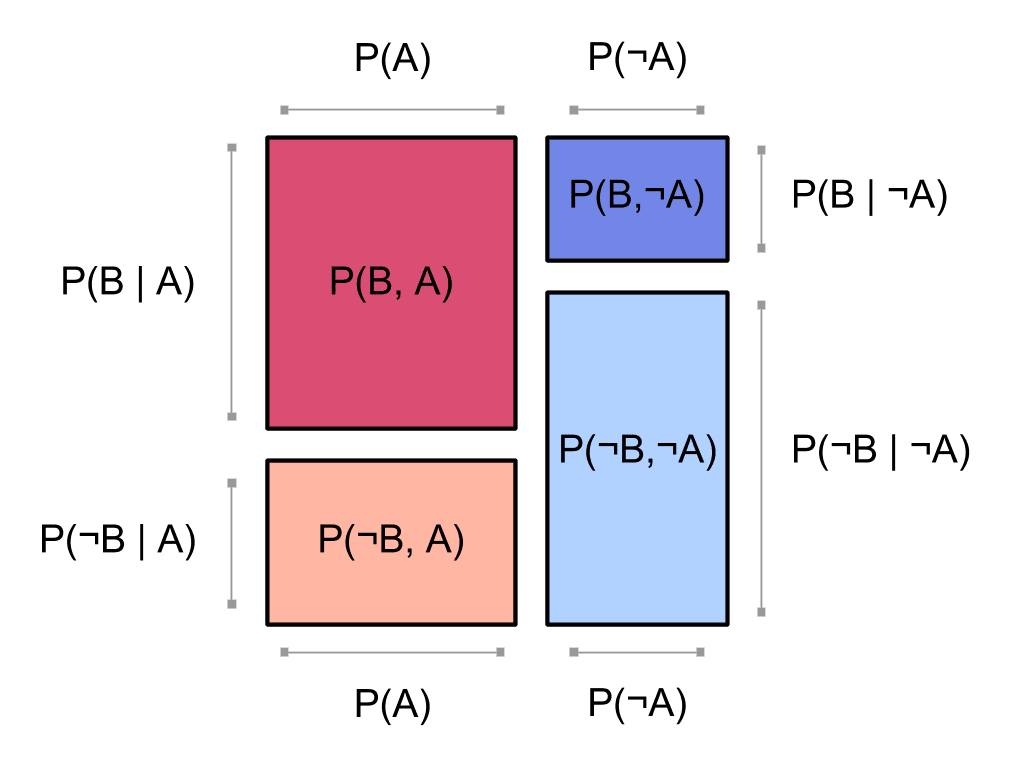

Say we have two events, and , and a probability distribution over whether or not they happen. We can represent as a square:

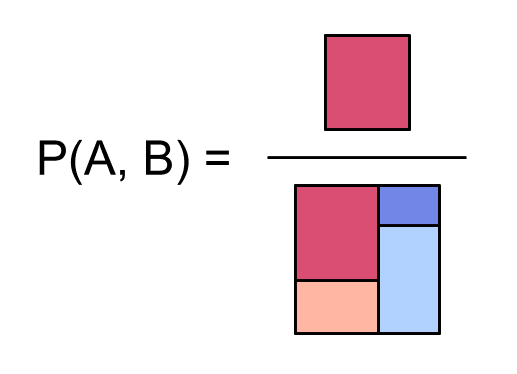

So for example, the probability of both and occurring is the ratio of [the area of the dark red region] to [the area of the entire square]:

Visualizing probabilities in a square is neat because we can draw simple pictures that highlight interesting facts about our probability distribution.

Below are some pictures illustrating:

independent events (What happens if the columns and the rows in our square both line up?)

marginal probabilities (If we're looking at a square of probabilities, where's the probability of or the probability ?)

conditional probabilities (Can we find in the square the probability of if we condition on seeing ? What about the conditional probability ?)

factoring a distribution (Can we always write as a square? Why do the columns line up but not the rows?)

the process of computing joint probabilities from factored probabilities

Independent events

Here's a picture of the joint distribution of two independent events and :

Now the rows for and line up across the two columns. This is because . When and are independent, updating on or doesn't change the probability of .

For more on this visualization of independent events, see the aptly named Two independent events: Square visualization.

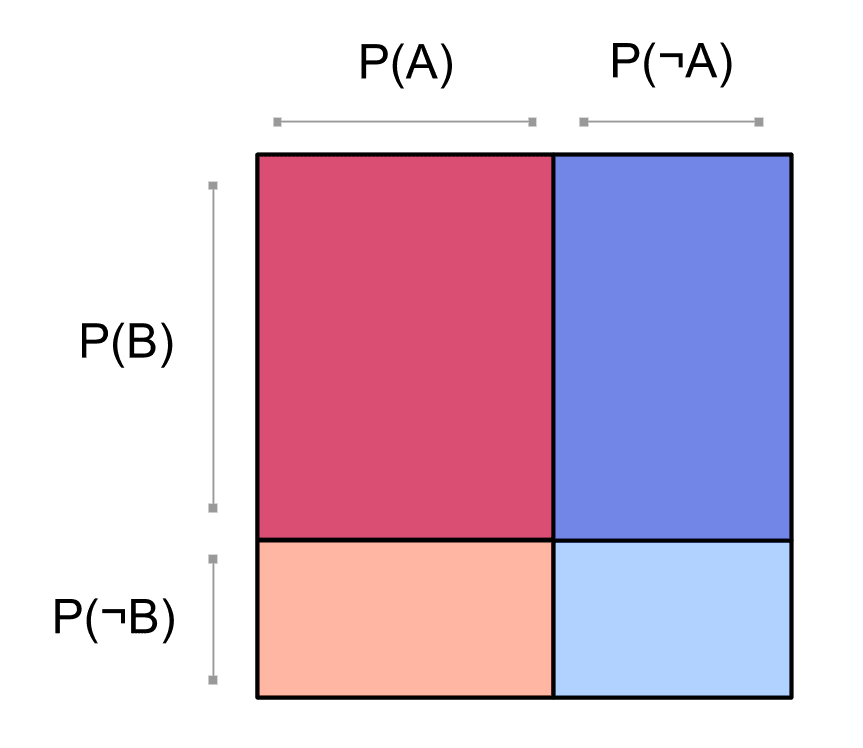

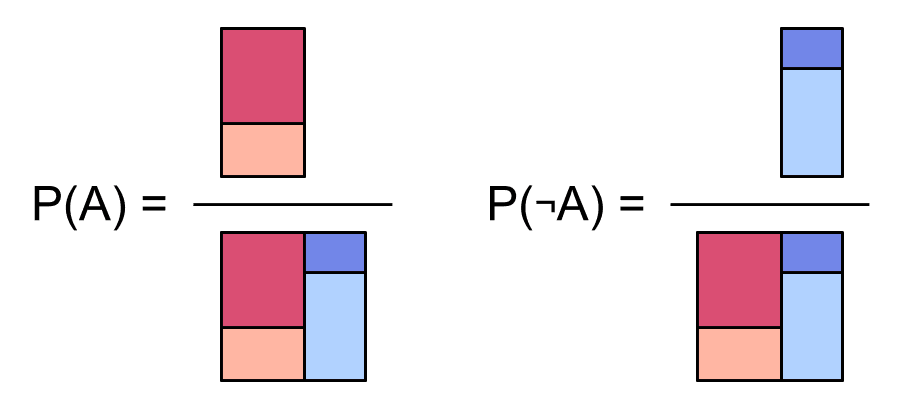

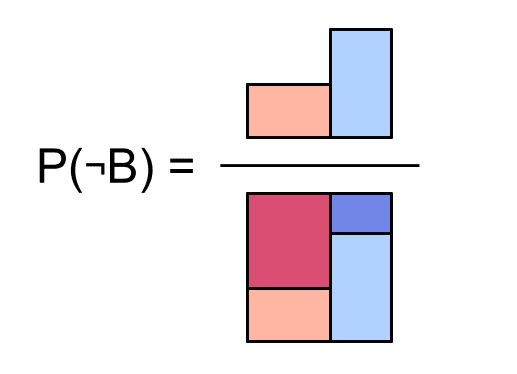

Marginal probabilities

We can see the marginal probabilities of and by looking at some of the blocks in our square. For example, to find the probability that doesn't occur, we just need to add up all the blocks where happens: .

Here's the probability of , and the probability of :

Here's the probability of :

In these pictures we're dividing by the area of the whole square. Since the probability of anything at all happening is 1, we could just leave it out, but it'll be helpful for comparison while we think about conditionals next.

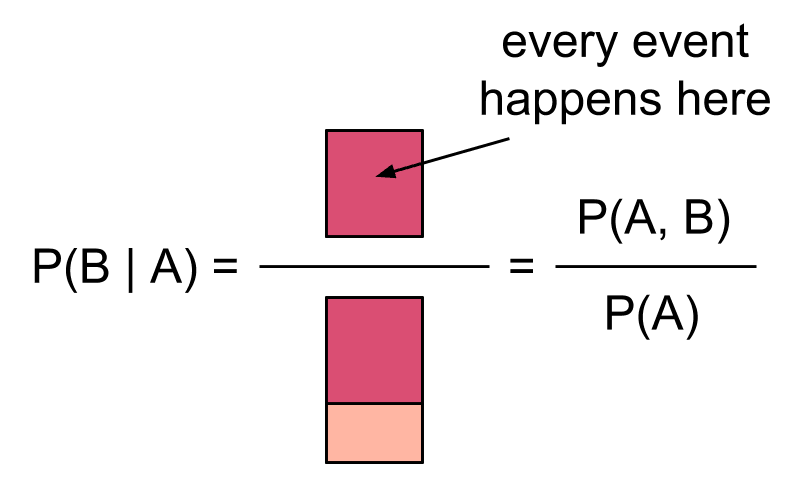

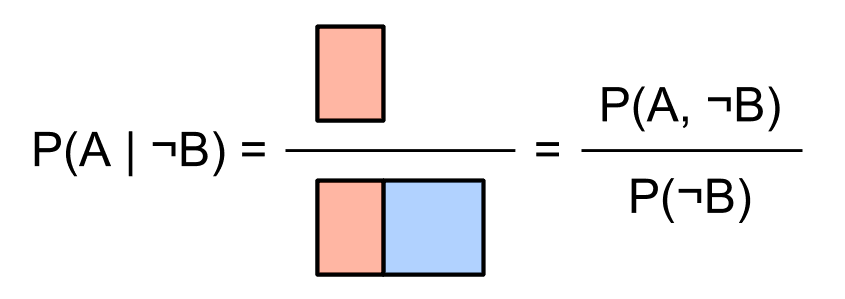

Conditional probabilities

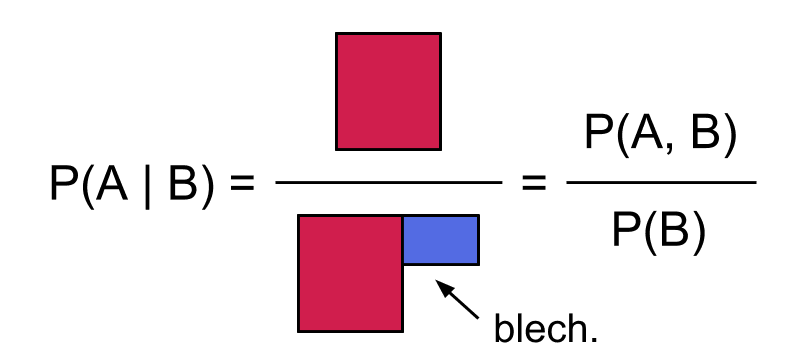

We can start with some probability , and then assume that is true to get a conditional probability of . Conditioning on being true is like restricting our whole attention to just the possible worlds where happens:

Then the conditional probability of given is the proportion of these worlds where also happens:

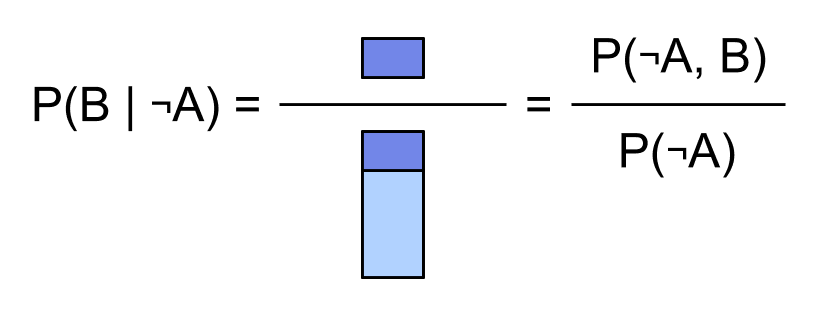

If instead we condition on , we get:

So our square visualization gives a nice way to see, at a glance, the conditional probabilities of given or given :

We don't get such nice pictures for :

Factoring a distribution

Recall the square showing our joint distribution :

Notice that in the above square, the reddish blocks for and are the same width and form a column; and likewise the blueish blocks for and . This is because we chose to factor our probability distribution starting with :

Let's use the equivalence between events and binary random variables, so if we say we mean . For any choice of truth values and , we have

The first factor tells us how wide to make the red column relative to the blue column . Then the second factor tells us the proportions of dark and light within the column for .

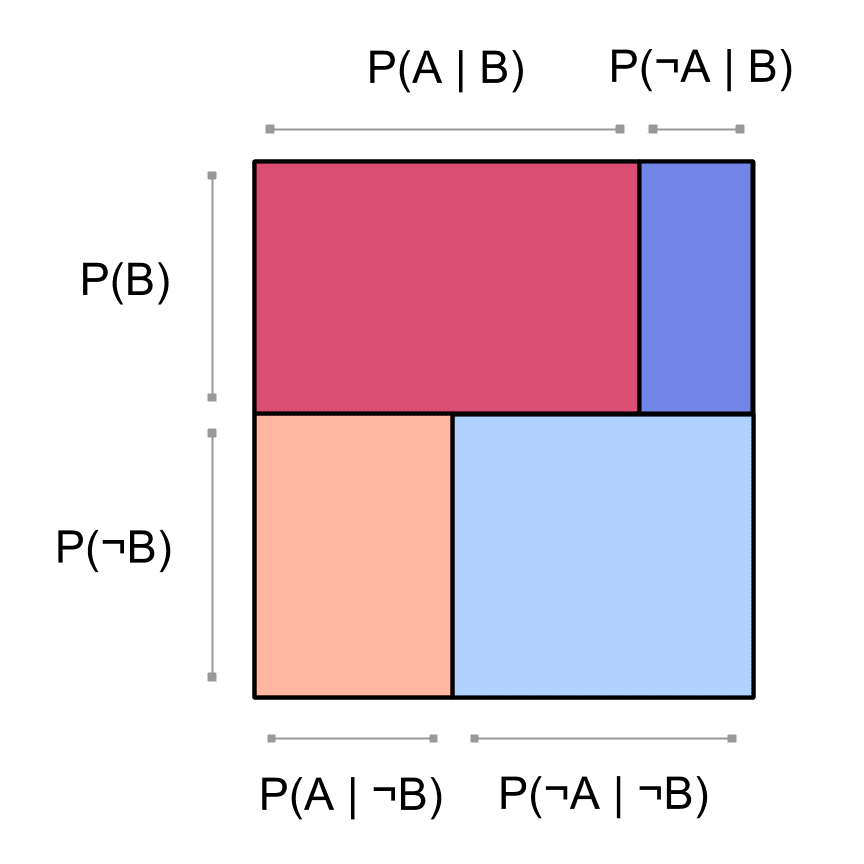

We could just as well have factored by first:

Then we'd draw a picture like this:

By the way, earlier when we factored by first, we got simple pictures of the probabilities for conditioned on . Now that we're factoring by first, we have simple pictures for the conditional probability :

and for the conditional probability :

Computing joint probabilities from factored probabilities

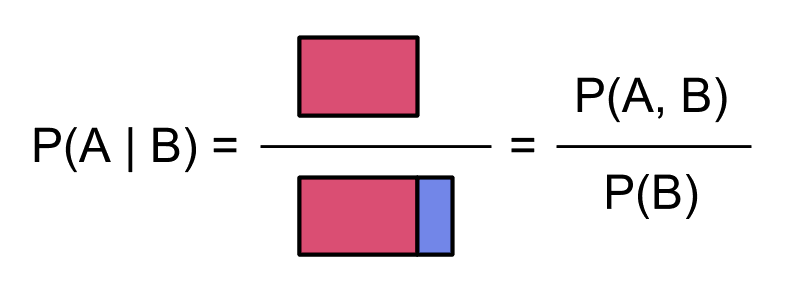

Let's say we know the factored probabilities for and , factoring by . That is, we know , and we also know and . How can we recover the joint probability that is the case and also is the case?

Since

we can multiply the prior by the conditional to get the joint :

If we do this at the same time for all the possible truth values and , we get back the full joint distribution: