New Comment

I don't think it's the most important or original or interesting thing I've done, but I'm proud of the ideas in here nevertheless. Basically, other researchers have now actually done many of the relevant experiments to explore the part of the tech tree I was advocating for in this post. See e.g. https://www.alignmentforum.org/posts/HuoyYQ6mFhS5pfZ4G/paper-output-supervision-can-obfuscate-the-cot

I'm very happy that those researchers are doing that research, and moreover, very happy that the big AI companies have sorta come together to agree on the importance of CoT monitorability! https://arxiv.org/abs/2507.11473

OK but are these ideas promising? How do they fit into the bigger picture?

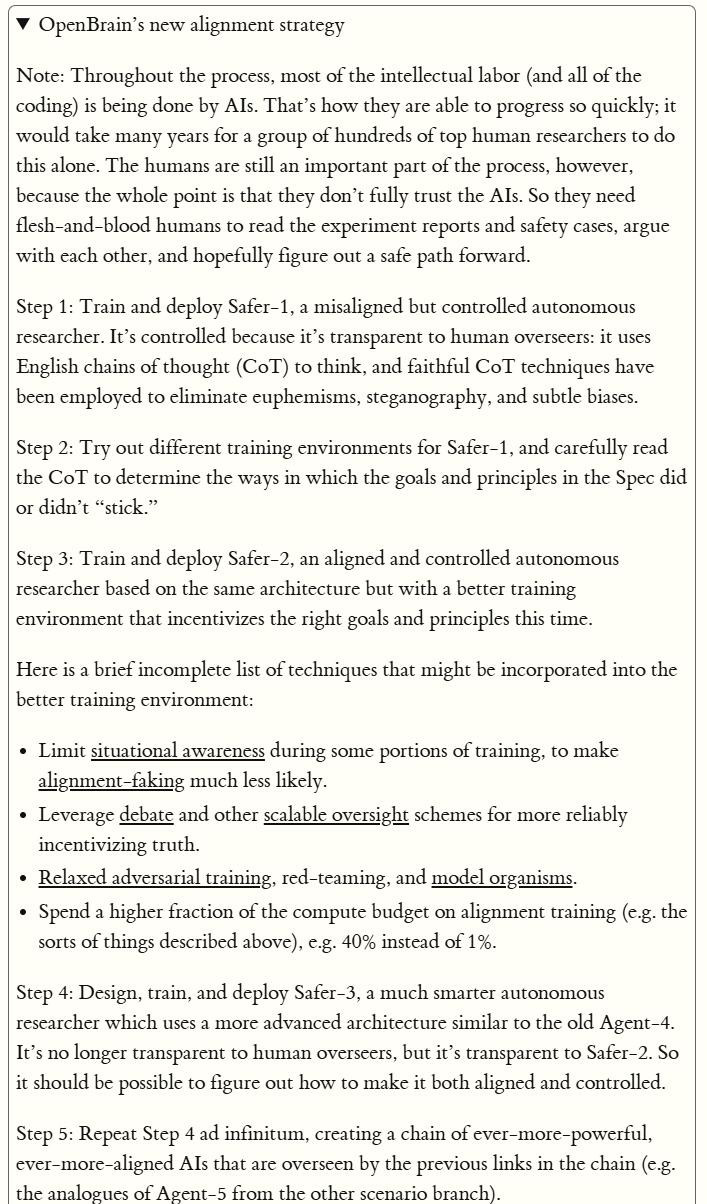

Conveniently, my answer to those questions is illustrated in the AI 2027 scenario:

It's not the only answer though. I think that improving the monitorability of CoT is just amaaaazing for building up the science of AI and the science of AI alignment, plus also raising awareness about how AIs think and work, etc.

Another path to impact is that if neuralese finally arrives and we have no more CoTs to look at, (a) some of the techniques for making good neuralese interpreters might benefit from the ideas developed for keeping CoT faithful, and (b) having previously studied all sorts of examples of misaligned CoTs, it'll be easier to argue to people that there might be misaligned cognition happening in the neuralese.

(Oh also, to be clear, I'm not taking credit for all these ideas. Other people like Fabian and Tamera for example got into CoT monitorability before I did, as did MIRI arguably. I think of myself as having just picked up the torch and ran with it for a bit, or more like, shouted at people from the sidelines to do so.)

Overall do I recommend this for the review? Well, idk, I don't think it's THAT important or great. I like it but I don't think it's super groundbreaking or anything. Also, it has low production value; it was a very low-effort dialogue that we banged out real quick after some good conversations.